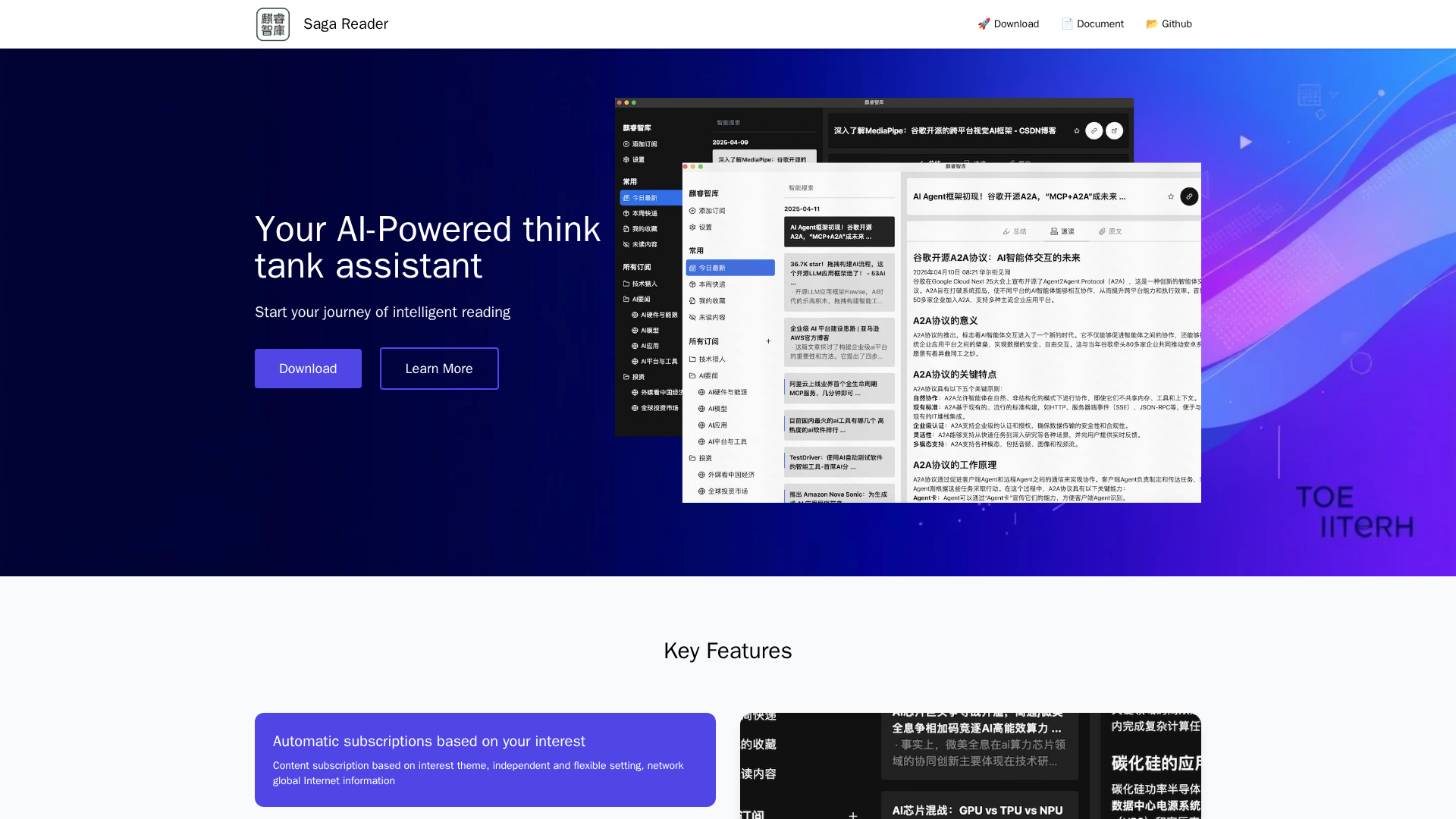

Overview

Saga Reader (Chinese name: 麒睿智库) is an open-source, cross-platform AI-powered internet reader designed to act as a personal think‑tank assistant. It automatically retrieves and curates content based on user-defined topics and keyword subscriptions, summarizes and translates articles, and provides an interactive AI companion so users can discuss and explore reading material in depth. The app emphasizes privacy (local-first storage), performance (built with Rust, Tauri and Svelte), and flexibility: it runs either with cloud LLMs or entirely locally (supports local LLMs such as via Ollama). Saga Reader supports live search, RSS and background updates, offers a lightweight UI, and is targeted at users who want a private, customizable research and reading workflow.

Key Features

Personalized Topic Subscriptions

Users define keywords/topics; Saga Reader automatically finds, fetches, and updates content matching those interests.

Summarization & Translation

AI-generated article summaries and high-quality translations to make foreign-language content feel native.

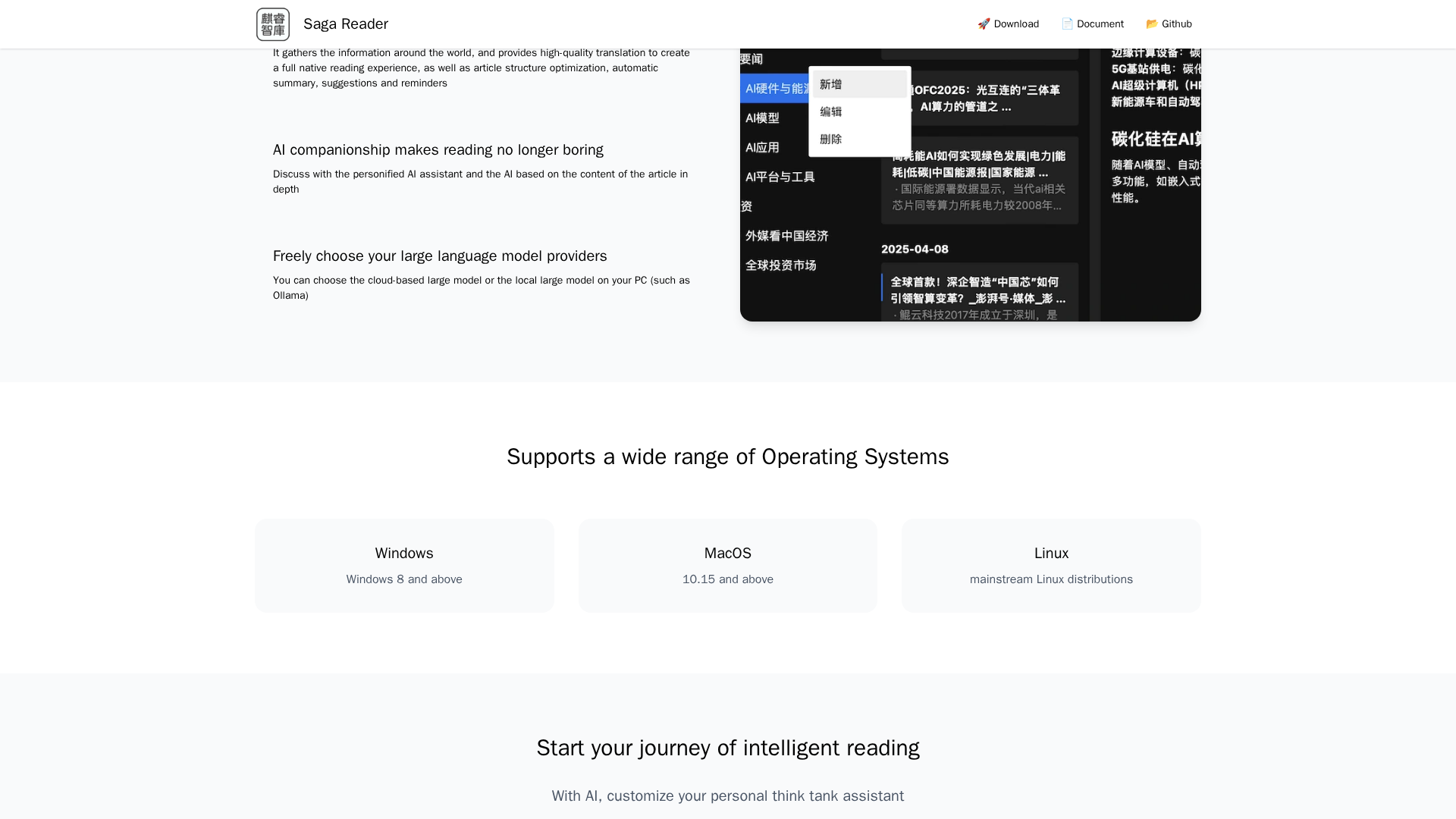

Local and Cloud LLM Support

Works with cloud LLMs or local LLMs (e.g., via Ollama), giving users control over data and latency.

Interactive AI Companion

Conversational AI agent to discuss content, suggest follow-ups, and help with deeper understanding.

Cross‑Platform Desktop App (Rust/Tauri/Svelte)

Lightweight, performant desktop application for Windows/Mac/Linux with optional in-app or external browser viewing.

Privacy-first Local Storage

Data is stored locally on the user’s machine by default; no mandatory telemetry or third‑party tracking.

Who Can Use This Tool?

- Researchers:Automate literature tracking, summarization, and multilingual reading workflows.

- Developers:Integrate or extend the open-source reader and run local LLMs for private experiments.

- Knowledge Workers:Stay updated with curated topics and digest complex information quickly.

- Privacy-conscious Users:Run local models and keep all reading data stored privately on their machine.

Pricing Plans

Pricing information is not available yet.

Pros & Cons

✓ Pros

- ✓Open-source and free to use (MIT license)

- ✓Local-first architecture preserves privacy and offers offline/local model operation

- ✓Supports both local and cloud LLM providers (flexible deployment)

- ✓Lightweight, high-performance stack (Rust backend, Tauri desktop, Svelte frontend)

- ✓Automatic topic/keyword-based subscriptions and background updates

- ✓Built-in summarization, translation, and an interactive AI companion for deeper engagement

✗ Cons

- ✗New project; ecosystem and third‑party integrations still maturing

- ✗Setup complexity for non-technical users (local LLMs, Ollama integration require configuration)

- ✗No official hosted/cloud SaaS pricing or managed service (users must self-host or use cloud LLMs)

- ✗Limited dedicated commercial support (primarily community / GitHub issues)

Related Articles (5)

A practical guide to turning 404 errors into user-friendly experiences and SEO gains.

A concise preview of the latest platform updates, feature highlights, and upcoming roadmap.

What’s New in Our Changelog: fresh features, fixes, and improvements.

A practical prompt guiding you to generate blog metadata in JSON (title, summary, description, keywords, author, and type).

A customizable AI reading assistant that translates, summarizes, structures content, and discusses with an AI, with cloud or local model options.