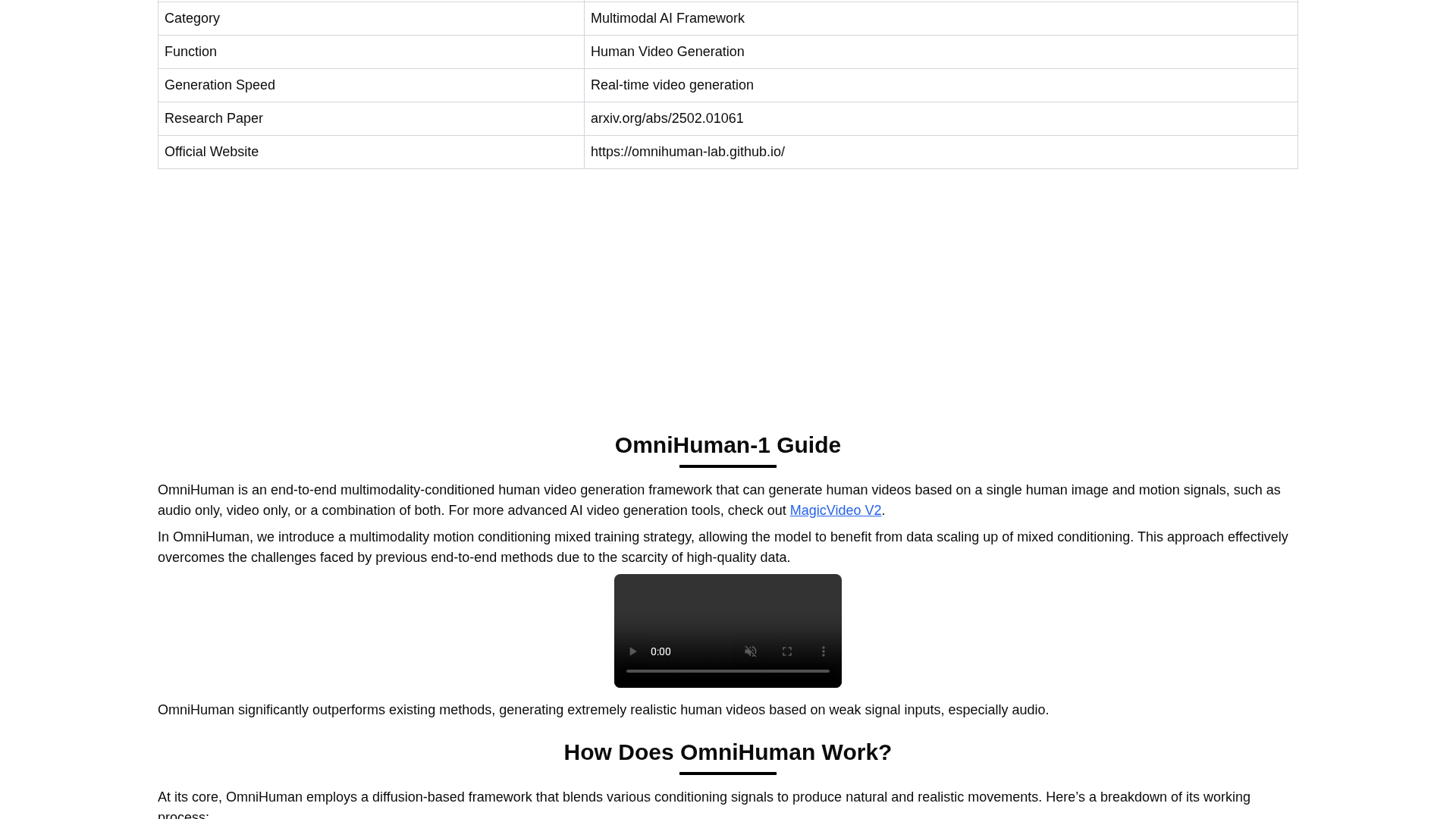

Overview

OmniHuman-1 is a ByteDance-developed multimodal human animation system that uses a diffusion-transformer backbone to generate realistic human videos from a single reference image plus a motion signal (audio or driving video). It supports portrait, half-body, and full-body outputs with arbitrary aspect ratios and stylization options (cartoons, animals, objects). The official site highlights real-time lip-sync, gesture realism, and Seedance/Dreamina mentions. The project is documented across the Home page, a Demo Gallery, a Text-to-Video article, use-case pages, a technical review, and a follow-up OmniHuman-1.5 page. Training is described as Omni-Condition training mixing weak and strong conditioning signals to scale data efficiently. Reported training data amounts are around 18.7k hours. Reproducibility appears to rely on omnihuman-lab.github.io materials; ByteDance has not publicly released official weights or download links. Some features (text-to-video) may not be working; pricing is not listed; access seems demo/private. The arXiv paper and lab pages provide architecture and evaluation context; further questions remain about licensing, API access, and dataset transparency.

Key Features

Single-reference input

Generates videos from a single reference image plus motion signal (audio or driving video).

Full-body support

Outputs include portrait, half-body, and full-body videos.

Real-time lip-sync and motion fidelity

Delivers lip-sync accuracy and convincing motion.

Gesture realism

Captures natural gestures and body language.

Stylization options

Supports stylization such as cartoons, animals, objects.

Seedance/Dreamina mentions

References Seedance and Dreamina concepts mentioned on the site.

Who Can Use This Tool?

- creators:generate realistic human videos from a reference image and motion signals

- marketers/virtual spokespeople:produce promotional or virtual spokesperson videos

- education and entertainment professionals:build educational/entertainment content with human-like agents

Pricing Plans

Pricing information is not available yet.

Pros & Cons

✓ Pros

- ✓Strong lip-sync and motion fidelity

- ✓Multi-modal conditioning support (image, motion, text, pose)

- ✓Portrait to full-body output with flexible aspect ratios

- ✓Active research presence and public papers

✗ Cons

- ✗Official weights and model weights not publicly released

- ✗Limited public access / demo-only at time observed

- ✗Text-to-video feature may be non-functional

- ✗No explicit licensing or safety policy publicly published