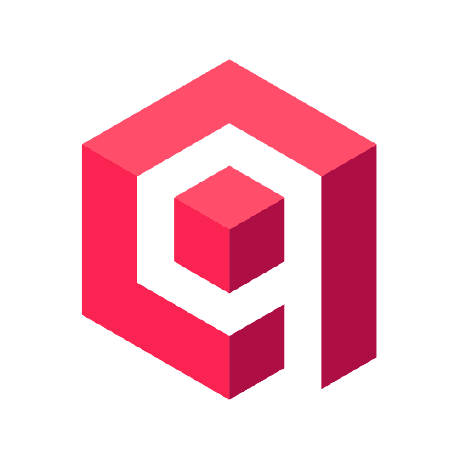

Topic Overview

This topic examines the practical ecosystem for safety‑and‑alignment‑oriented LLMs—typified by Claude Opus 4.5 and contemporary competitors—and how operators combine agent observability, on‑device inference, and knowledge‑base connectors to reduce risk and improve reliability. By 2025 the focus has shifted from raw capability to controllability: systems are being instrumented with Model Context Protocol (MCP) servers, semantic memory layers, and secure tool sandboxes so models can be monitored, constrained, and grounded in up‑to‑date sources. Key components include mentor/metacognitive layers (e.g., Vibe Check) that provide external “vibe” signals to steer agent behavior; secure code execution endpoints (pydantic-ai/mcp-run-python) that sandbox Python via Deno/Pyodide; hybrid, production memory services (mcp-memory-service) that combine local fast reads with cloud sync; and semantic search backends like Qdrant for vectorized memory and retrieval. On‑prem RAG solutions (Minima) and content connectors (obsidian‑mcp, Wikipedia MCP, Scholarly) let teams keep grounding data private and auditable. Observability integrations (Prometheus, Dynatrace MCP servers) bring runtime metrics and traces into the loop so teams can detect drift, failures, or misalignment. Together these elements create interoperable stacks where safety properties are enforced through tooling (mentor signals, sandboxed tool calls, verifiable memory, and metric‑driven observability) rather than solely by model architecture. For practitioners evaluating Claude Opus 4.5 versus alternatives, the immediate questions are not just model accuracy but how well the model integrates with MCP‑based connectors, local inference options, and production memory/monitoring systems—an ecosystem view that is now central to deploying aligned, auditable LLM applications.

MCP Server Rankings – Top 10

Plug-and-play mentor layer for MCP servers to prevent overengineering and align LLMs.

Run Python code in a secure sandbox via MCP tool calls, powered by Deno and Pyodide

Production-ready MCP memory service with zero locks, hybrid backend, and semantic memory search.

Implement semantic memory layer on top of the Qdrant vector search engine

MCP server for RAG on local files

(by Steven Stavrakis) An MCP server for Obsidian.md with tools for searching, reading, writing, and organizing notes.

An MCP server to search for scholarly and academic articles.

Access and search Wikipedia articles via MCP for AI-powered information retrieval.

Query and analyze Prometheus - open-source monitoring system.

Manage and interact with the Dynatrace Platform for real-time observability and monitoring.