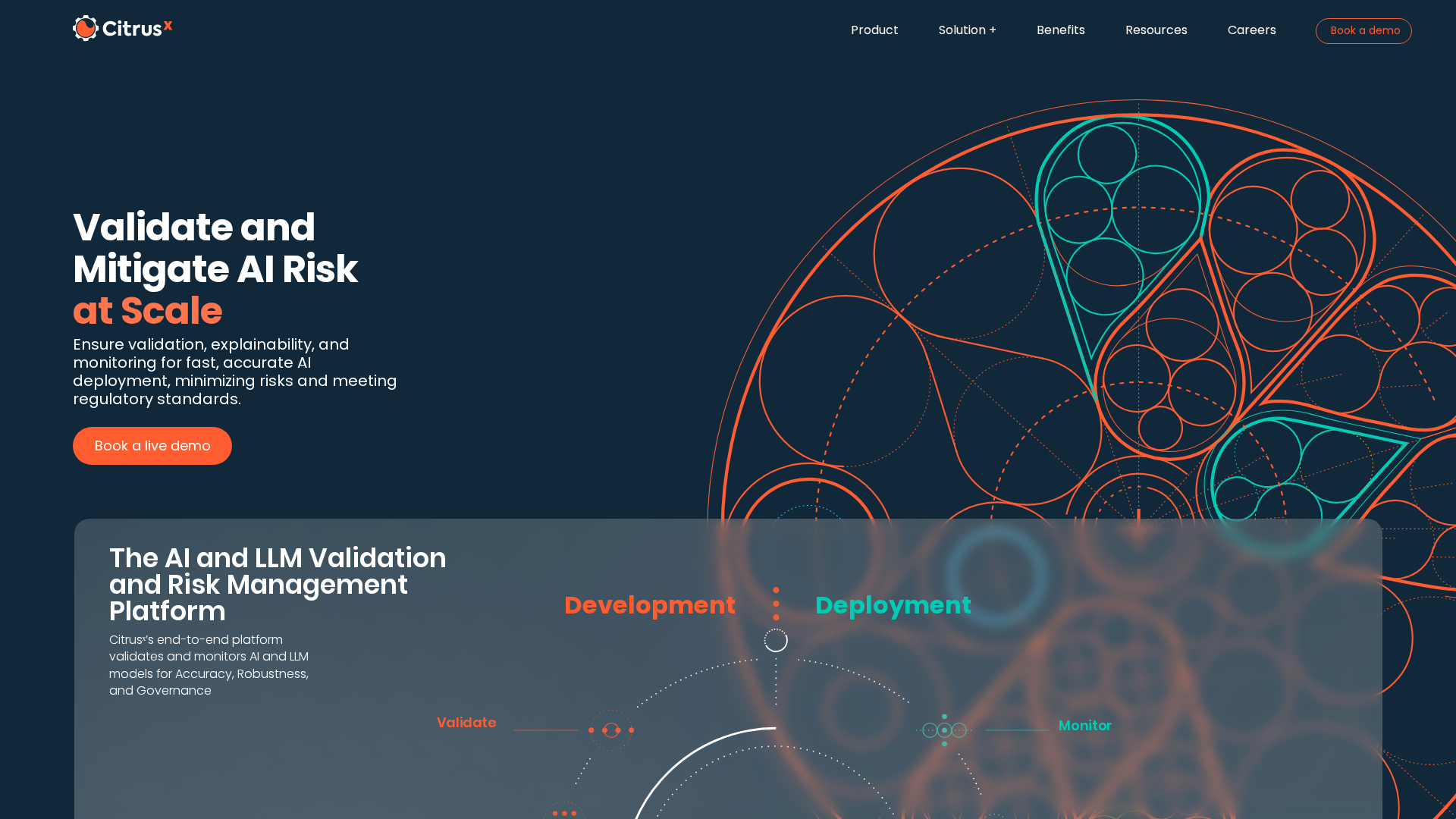

Overview

Citrusˣ is an enterprise-focused platform that validates, explains, monitors, and governs AI and LLM models at scale. It provides high-resolution performance and robustness assessment, real-time monitoring with live alerts, bias and vulnerability detection, and automated reporting and approval workflows to support regulatory compliance (mentions alignment with EU AI Act, ISO 42001 and U.S. SB 53 implications). The product emphasizes explainability at global, local, cluster and segment levels and offers proprietary metrics and methods to surface model vulnerabilities and actionable mitigation guidance for production readiness. The site indicates claims of proprietary/patent-pending metrics that provide higher-resolution performance maps than common explainability baselines. No public pricing, pricing tiers, or detailed platform/SDK/API documentation were discovered on the website; visitors are asked to book a demo or contact the vendor for pricing and integration details. The resources section appears limited and public technical documentation (developer-facing docs) was not found on the site. (Source: citrusx.ai pages: homepage, building-models, ensuring-compliance, book-a-demo.)

Key Features

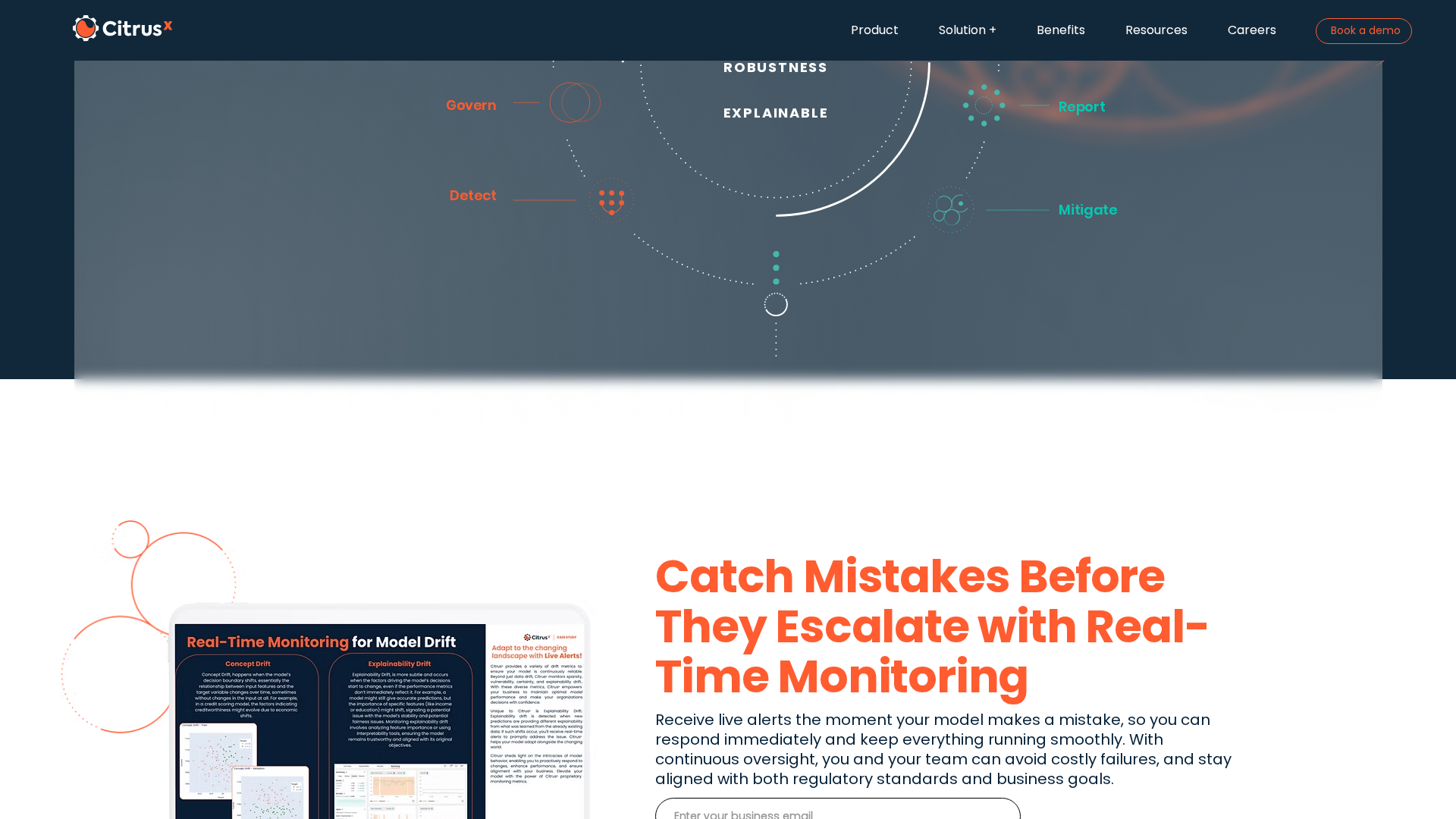

Real-time Validation & Monitoring

Continuously assesses models in production and issues live alerts when anomalies, accuracy drops, or unsafe outputs occur.

Explainability at Multiple Levels

Provides local (per-prediction), global, cluster, and segment-level explainability to help stakeholders understand model decisions.

Bias & Fairness Detection

Detects fairness issues, surfaces hidden risks, and enables tolerance thresholds and mitigation workflows.

Compliance Reporting & Governance

Generates customizable, cross-domain reports and supports documentation needed for audits and regulatory alignment (mentions EU AI Act and ISO references).

Drift Detection & Anomaly Identification

Tracks data and performance drift with alerting and recommended remediation steps.

Automated Model Approval Workflows

Streamlines validation-to-production handoffs with automated risk assessment and approval trails.

Who Can Use This Tool?

- Data Scientists:Validate model performance, investigate failures, and improve production reliability.

- Risk & Compliance Officers:Monitor model risk, demonstrate regulatory alignment, and generate audit-ready reports.

- ML Engineers / SREs:Detect drift, receive real-time alerts, and operationalize mitigations for production models.

- Executives & Business Leaders:Assess model safety and business risk to support deployment decisions and stakeholder reporting.

Pricing Plans

Pricing information is not available yet.

Pros & Cons

✓ Pros

- ✓Real-time monitoring and live alerts for model failures and drift.

- ✓Built-in explainability at multiple granularities (global, local, cluster-level).

- ✓Compliance- and governance-focused features (automated reports, audit documentation, alignment with EU AI Act/ISO standards).

- ✓Automated bias detection, mitigation guidance, and model approval workflows.

✗ Cons

- ✗No public pricing or tier information available on the website.

- ✗Limited publicly available technical documentation or developer-facing docs found.

- ✗Resources section appears minimal / placeholder content (few downloadable resources visible).

- ✗Integration details, supported platforms, and API details are not publicly documented on the site.

Compare with Alternatives

| Feature | Citrusˣ (CitrusX) | Holistic AI | RagaAI |

|---|---|---|---|

| Pricing | N/A | N/A | N/A |

| Rating | 8.2/10 | 8.3/10 | 8.2/10 |

| Real-time Validation | Yes | Yes | Yes |

| Explainability Depth | Multi level explainability | Governance focused explainability | Step level traceability |

| Bias & Fairness | Yes | Partial | Partial |

| Agent Tracing | Partial | No | Yes |

| Compliance Evidence | Yes | Yes | Partial |

| Drift Sensitivity | Yes | Partial | Yes |

| Integration Surface | Limited integrations | Broad integrations and connectors | Developer SDK and APIs |

| Approval Automation | Yes | Partial | No |

Related Articles (3)

ACAMS NY hosts a 4-hour forum on financial crime prevention, regulators, AI risk modeling, and networking.

A practical 2025 roadmap to meet the EU AI Act’s staggered deadlines and build robust AI governance.

Explores Unilever’s move from policy to automated Responsible AI governance and the need for scalable assurance platforms.