Overview

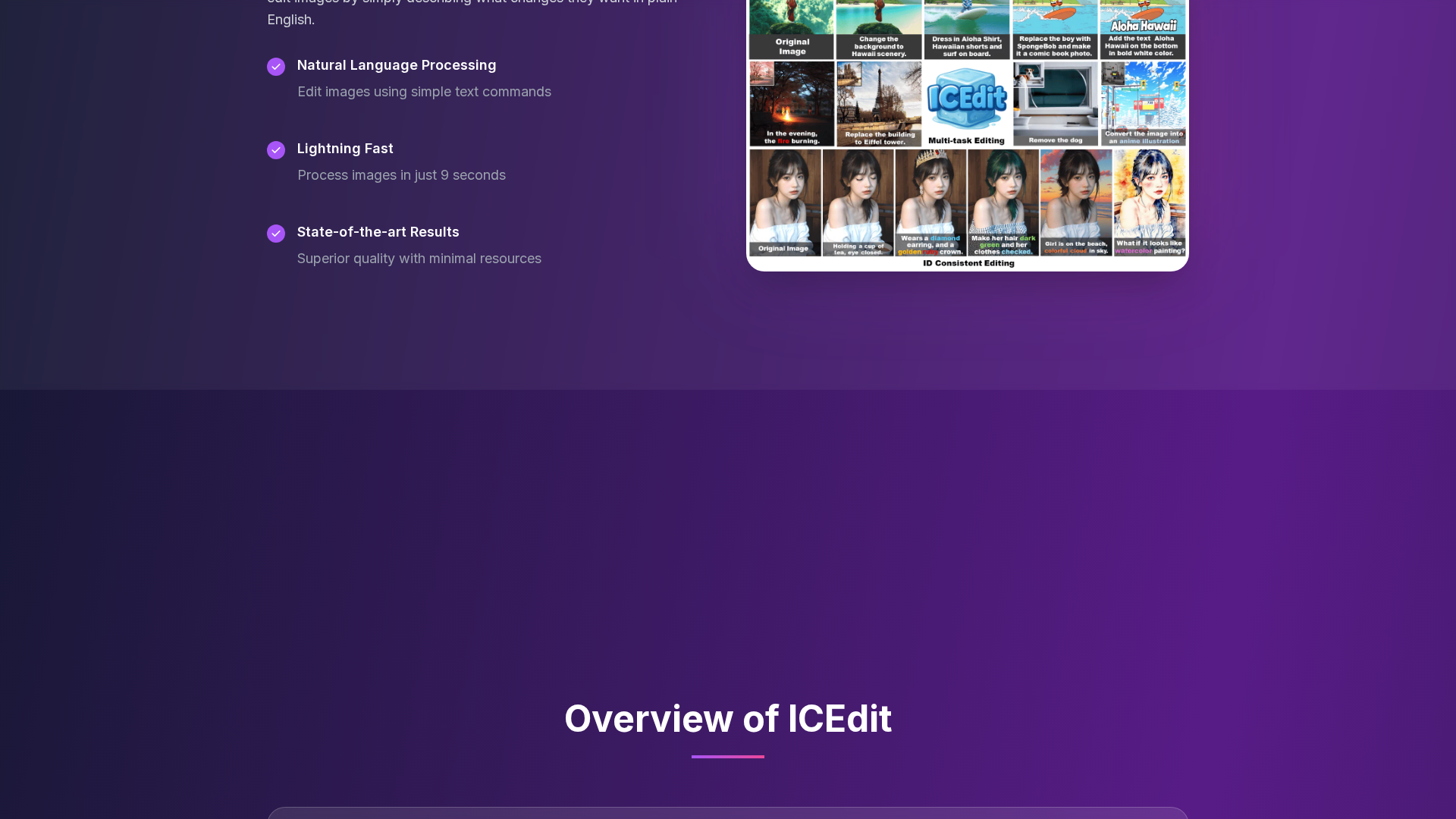

ICEdit AI (In-Context Edit) is an open-source, instruction-driven image editing framework built on large-scale diffusion transformers to enable robust text-based edits from plain-English instructions. It combines an in-context editing framework (processing prompts alongside source images), a LoRA-MoE hybrid tuning strategy for parameter-efficient adaptation, and an early-filter inference-time scaling method that selects better initial noise patterns for improved edit quality. ICEdit is designed to be lightweight and accessible — training uses a tiny fraction of prior datasets and parameters, and inference can run on modest hardware (examples report ~9 seconds per image and deployability on 4GB VRAM environments via ComfyUI). The project provides Gradio/Hugging Face demo spaces, downloadable checkpoints, and detailed ComfyUI workflows for local use and experimentation.

Key Features

In-Context Instructional Editing

Accepts plain-English edit instructions processed alongside the source image so the model follows stepwise instructions without architectural changes.

LoRA-MoE Hybrid Tuning

Low-Rank Adaptation combined with Mixture-of-Experts routing enables highly efficient, adaptable fine-tuning with small trainable footprints.

Early-Filter Inference-Time Scaling

Uses vision-language filtering to choose stronger initial noise seeds, improving final edit quality and robustness.

Low-Resource Deployment

Workflows and checkpoints optimized to run on modest hardware (examples indicate workable 4 GB VRAM setups via ComfyUI).

ComfyUI & Gradio Integration

Official ComfyUI nodes/workflows and a HuggingFace Gradio Space allow interactive demos and node-based pipeline construction.

Research-Grade Open-Source Ecosystem

Full GitHub repo, example workflows, Colab notebooks, and an arXiv paper (2504.20690) with reproducible instructions and community contributions.

Who Can Use This Tool?

- Researchers:Study and extend instruction-based image editing algorithms and models.

- Developers:Integrate text-driven editing into apps and build ComfyUI/Gradio pipelines.

- Designers/Creators:Quickly prototype image edits and creative iterations using natural-language instructions.

- Hobbyists/Enthusiasts:Run demos locally or via HuggingFace Space to experiment with AI image edits.

Pricing Plans

Open-source, free to use locally with fast processing and customization.

- ✓Open-source, free to use

- ✓Instruction-based text image editing

- ✓Runs locally and is customizable

- ✓Approximately 9 seconds per image processing (typical)

- ✓Low training-data and parameter footprint compared to prior methods

Pros & Cons

✓ Pros

- ✓Open-source and reproducible with full repo, demos, and workflows

- ✓Fast inference (typical ~9s per image) and low VRAM requirements (deployable on ~4GB with ComfyUI setups)

- ✓Instruction-driven text editing (natural-language prompts) with strong ID persistence

- ✓Efficient training/tuning (LoRA-MoE hybrid) using far less data and parameters than prior SOTA

- ✓Multiple ways to run: Gradio/HuggingFace Space, local Conda/ComfyUI workflows, and example Colab notebooks

✗ Cons

- ✗Not perfect; known failure cases and precision limits for complex edits

- ✗Some restrictions noted (e.g., recommended 512px width input constraint to reduce memory issues)

- ✗Certain MoE checkpoints or weights may have distribution/permission caveats

- ✗Requires some technical comfort for local installation and model handling