Overview

Knostic (knostic.ai) is an enterprise-focused GenAI Knowledge Security Platform founded in 2023. The platform aims to prevent AI/LLM oversharing and knowledge-layer leaks by combining pre-deployment simulation, ongoing monitoring, and real-time controls. Core capabilities described on the site include a Copilot Readiness Assessment (CRA) for discovery and remediation, a simulation engine that runs persona-based prompt patterns and yields a readiness score (0–100) with prioritized remediation, need-to-know IAM for LLMs, a real-time AI firewall and DLP gateway that inspects prompts/responses, continuous surveillance for policy drift with audit trails and dashboards, and adversarial testing/red-team tooling including a free RAG Security Training Simulator. The company calls out integrations and targets such as Microsoft 365 Copilot, Glean, Salesforce Einstein AI, Gemini, and enterprise LLM/RAG setups. Resources on the site include a free RAG Security Training Simulator, webinars, white papers, blog posts, video library and security toolkits. Legal documents available on the site include a Data Processing Agreement (DPA) (Customer = Controller, Knostic = Processor), a privacy policy, and Terms of Service; the DPA and site mention subprocessors, cross-border transfers, retention/deletion options, and notification/cooperation obligations. Public pricing is not published (the /pricing URL returned a 404) and no self-serve pricing or free trial was found; the site recommends contacting sales via the contact form or [email protected] for pricing, pilot costs, or trial options. Company information on public sources and the site indicates an announced $11M funding raise (March 2025), leadership including founder/CEO Gadi Evron and co-founder/CTO Sounil Yu, and LinkedIn listing 11–50 employees with a U.S. address in Herndon, VA.

Key Features

Copilot Readiness Assessment (CRA)

Discovery-first program to find likely oversharing exposures, automated labeling suggestions, and role/department profiling with prioritized remediation.

Simulation Engine & Readiness Scoring

Runs many prompt patterns per persona to reveal inference/aggregation exposures and yields a readiness score (0–100) with prioritized remediation actions.

Need-to-know IAM for LLMs

Enforces granular, role-based access controls for AI assistants and copilots to restrict knowledge access by role/persona.

Real-time AI Firewall & DLP Gateway

Inspects prompts and responses in real time to block sensitive data from leaving applications.

Continuous Surveillance & Governance

Monitors for policy drift, provides audit trails, board-ready dashboards, and governance controls.

Adversarial Testing / Red-team Tooling

Simulation and red-team tooling including a free RAG Security Training Simulator to teach prompt-injection defenses.

Who Can Use This Tool?

- Enterprises:Prevent AI/LLM oversharing, run readiness assessments, and enforce knowledge-layer security controls across the organization.

- Security & Risk Teams:Adversarial testing, continuous surveillance, insider-risk checks, and governance controls for AI assistants and copilots.

- Customer Support / CS Teams:Enable safe Copilot/assistant usage for CS/support teams and reduce risk of sensitive data exposure.

- Executives / Legal / M&A:Use readiness scoring, audit trails, and due-diligence simulations for executive-access monitoring and M&A assessments.

Pricing Plans

Pricing information is not available yet.

Pros & Cons

✓ Pros

- ✓Focused, enterprise-oriented GenAI knowledge security capabilities (simulation, IAM, DLP, monitoring).

- ✓Provides a Copilot Readiness Assessment and simulation-based readiness scoring (0–100) with remediation guidance.

- ✓Offers a free RAG Security Training Simulator and a library of educational resources.

- ✓Legal artifacts available (DPA, privacy policy, Terms) with subprocessors and retention options documented.

✗ Cons

- ✗No public pricing or self-serve trial information available; /pricing returned 404.

- ✗Limited public detail on commercial pricing models and pilot costs — requires contact with sales.

- ✗Logo URL and certain platform/technical integration details not enumerated on the public site.

Compare with Alternatives

| Feature | Knostic | Enkrypt AI | Holistic AI |

|---|---|---|---|

| Pricing | N/A | N/A | N/A |

| Rating | 8.1/10 | 8.2/10 | 8.3/10 |

| Simulation Fidelity | High-fidelity simulation engine | Threat-focused simulations | Governance-oriented testing |

| Need-to-know IAM | Yes | Partial | Partial |

| Real-time DLP | Yes | Yes | Yes |

| Adversarial Testing | Yes | Yes | Yes |

| Continuous Monitoring | Yes | Yes | Yes |

| Compliance Reporting | Partial | Yes | Yes |

| Integration Depth | Enterprise AI tool integrations | APIs and deployment integrations | Broad connectors and API integrations |

Related Articles (6)

Researchers expose ShadowMQ deserialization flaws in major AI frameworks, enabling remote code execution and widespread risk.

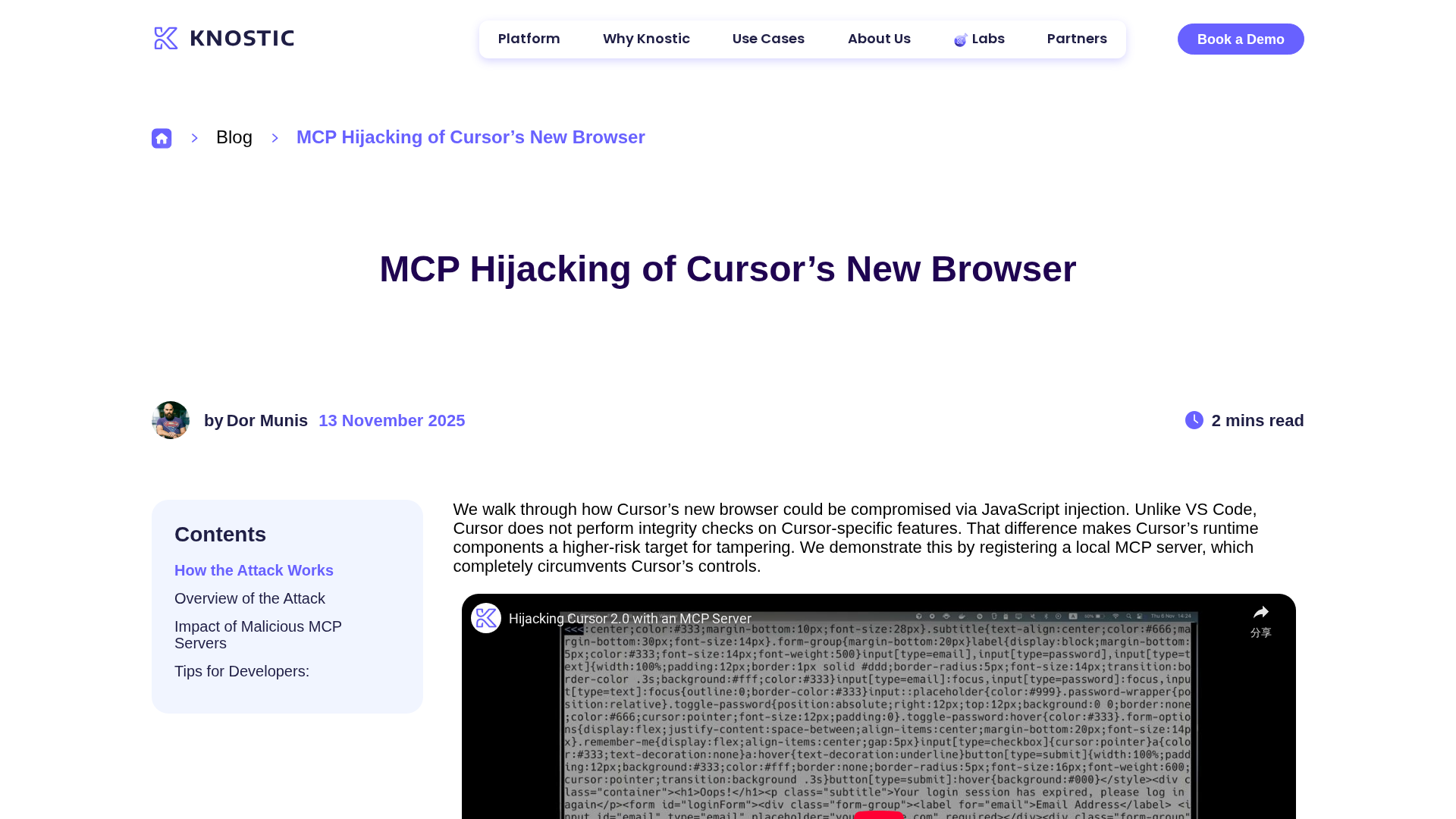

Shows how malicious MCP servers can hijack Cursor's embedded browser to steal credentials and threaten workstations, with defensive guidance.

A data-driven look at 20 AI governance stats for 2025, revealing maturity gaps, leadership gaps, and path to safer GenAI deployments.

Security leaders must instrument the IDE, enforce the Rule of Two, and shift from SBOMs to outcomes in a world of agentic AI.

Researchers show rogue MCP servers can inject JavaScript into Cursor’s built-in browser to harvest credentials and potentially take over the workstation.