Overview

LangChain is a developer-first framework and commercial platform that helps teams build, test, and deploy LLM-powered agents and applications. The open-source SDKs provide a standard model interface, hundreds of provider and integration connectors, pre-built agent abstractions, and the ability to compose chains and workflows quickly. For more advanced orchestration and deterministic workflows, LangGraph exposes lower-level primitives while remaining interoperable with LangChain agents. LangChain’s commercial product suite centers on LangSmith, which adds tracing, evals, monitoring, and scalable deployment capabilities (dev vs production deployments, retention tiers for traces, and usage billing). The platform emphasizes observability, durable execution, and a framework-agnostic approach so teams can keep preferred models and infrastructure while gaining debugging and governance tooling.

Key Features

Agent Framework and Prebuilt Components

Provides high-level agent abstractions for rapid assembly of LLM-driven agents with memory, tools, and chain-of-thought orchestration.

LangGraph (Orchestration Runtime)

Low-level primitives for building durable, deterministic workflows and custom agent orchestration for complex production scenarios.

LangSmith Observability & Evals

Tracing, state capture, streaming, annotation queues, online/offline evaluators, and monitoring to debug and continuously evaluate agent behavior.

Standard Model Interface & Multi-provider Support

Unified model interface to swap providers and avoid lock-in (OpenAI, Anthropic, Google Vertex, Ollama, and more).

Integrations Ecosystem

Hundreds of provider integrations (LLMs, vector databases, search, and tools) to build end-to-end applications quickly.

Deployment & Runtime Controls

Deployment options with dev vs production semantics, uptime-based billing, auto-scaling and security features for long-running agent workloads.

Who Can Use This Tool?

- Developers:Rapidly prototype and build LLM-powered agents, chains, and workflows using SDKs and integrations.

- Teams:Collaborate, observe, and deploy agent workloads using LangSmith, shared workspaces, and seats.

- Startups:Get early-stage credits and startup pricing to ship agent-driven products and validate use cases.

- Enterprises:Run secure, audited deployments with custom hosting, SSO/RBAC, SLAs, and procurement terms.

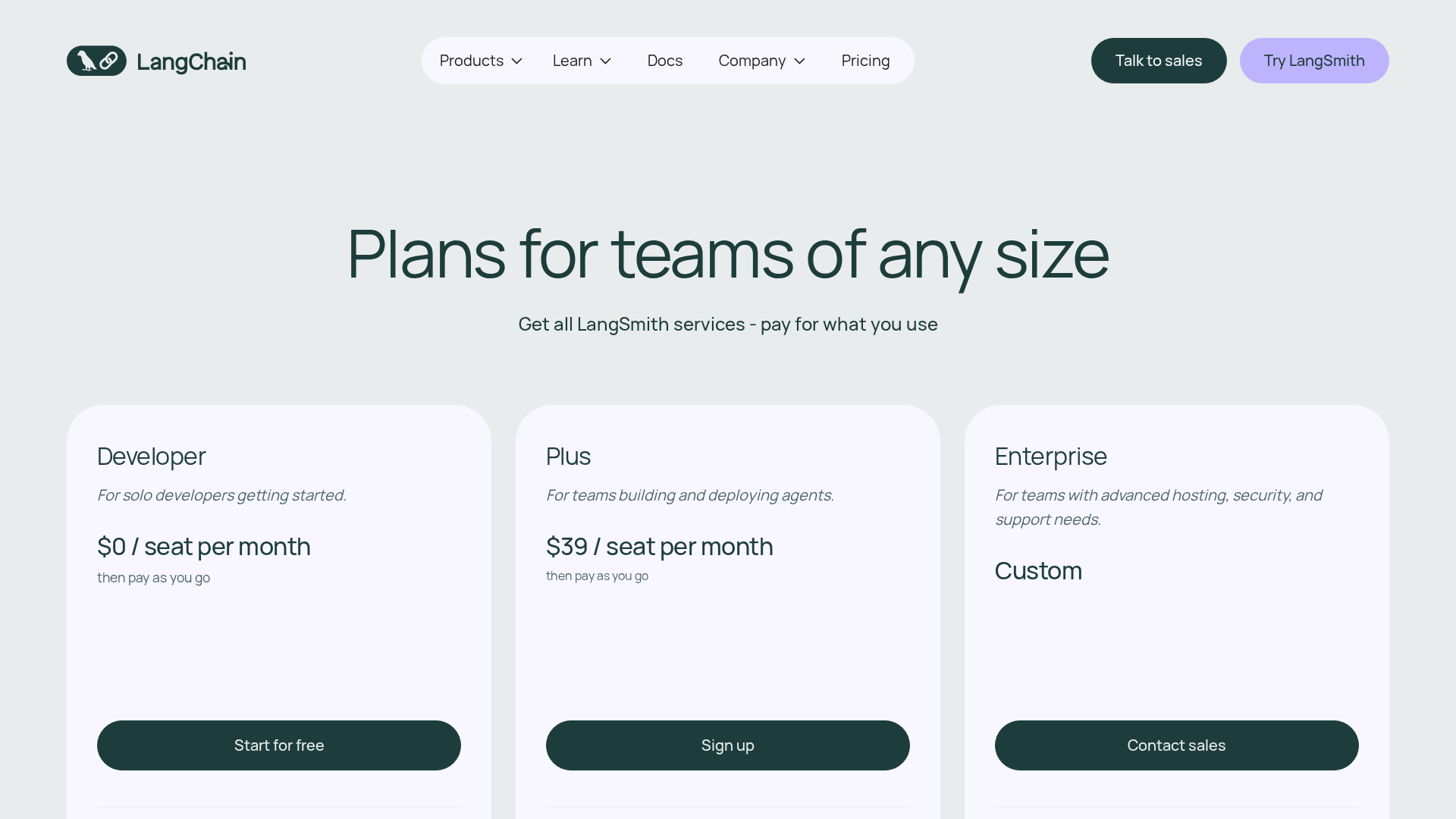

Pricing Plans

For solo developers; includes tracing, evals, Prompt Hub, and community support.

- ✓1 free seat (maximum of 1 seat)

- ✓Up to 5k base traces per month included, then pay-as-you-go

- ✓Tracing to debug agent execution

- ✓Online and offline evals

- ✓Prompt Hub, Playground, and Canvas for prompt iteration

- ✓Annotation queues for human feedback

- ✓Monitoring and alerting

- ✓Community support

For teams; includes up to 10k traces, 1 free dev deployment, seats, and email support.

- ✓Everything in the Developer plan

- ✓Up to 10k base traces per month included, then pay-as-you-go

- ✓1 dev-sized agent deployment included (free)

- ✓Email support

- ✓Up to 10 seats

- ✓Up to 3 workspaces

- ✓Additional deployments and usage billed separately (agent runs, uptime)

- ✓Additional usage examples/pricing: $0.005 per agent run; uptime billing for deployments: $0.0007 per minute (Development), $0.0036 per minute (Production)

For teams needing advanced hosting, security, and support; custom pricing via sales.

- ✓Everything in the Plus plan

- ✓Alternative hosting options: Cloud, Hybrid, or Self-Hosted (data can remain in your VPC)

- ✓Custom SSO and Role-Based Access Control (RBAC)

- ✓Dedicated deployed engineering team and support SLA

- ✓Team trainings and architectural guidance

- ✓Custom seats, workspaces, and procurement terms

- ✓Annual invoicing and custom contract/infosec review options

Pros & Cons

✓ Pros

- ✓Very large ecosystem and integrations with many model providers and vector DBs.

- ✓Fast prototyping: pre-built agents let you assemble simple agents in under ten lines of code.

- ✓Powerful observability and evaluation tooling via LangSmith (traces, evals, monitoring, alerts).

- ✓Strong open-source community and adoption (very high PyPI downloads and GitHub stars).

- ✓Flexible deployment options (cloud, hybrid, self-hosted), and enterprise controls.

✗ Cons

- ✗Operational complexity and learning curve for large-scale/advanced workflows.

- ✗Dependency management and occasional integration fragility reported by users.

- ✗Costs can grow with traces, long retention, and heavy production agent-run usage.

- ✗Enterprise pricing and contractual terms are custom; no fixed enterprise tiers publicly listed.

Related Articles (4)

A comprehensive LangChain releases roundup detailing Core 1.2.6 and interconnected updates across XAI, OpenAI, Classic, and tests.

A reproducible bug where LangGraph with Gemini ignores tool results when a PDF is provided, even though the tool call succeeds.

A CLI tool to pull LangSmith traces and threads directly into your terminal for fast debugging and automation.

A practical guide to debugging deep agents with LangSmith using tracing, Polly AI analysis, and the LangSmith Fetch CLI.