Overview

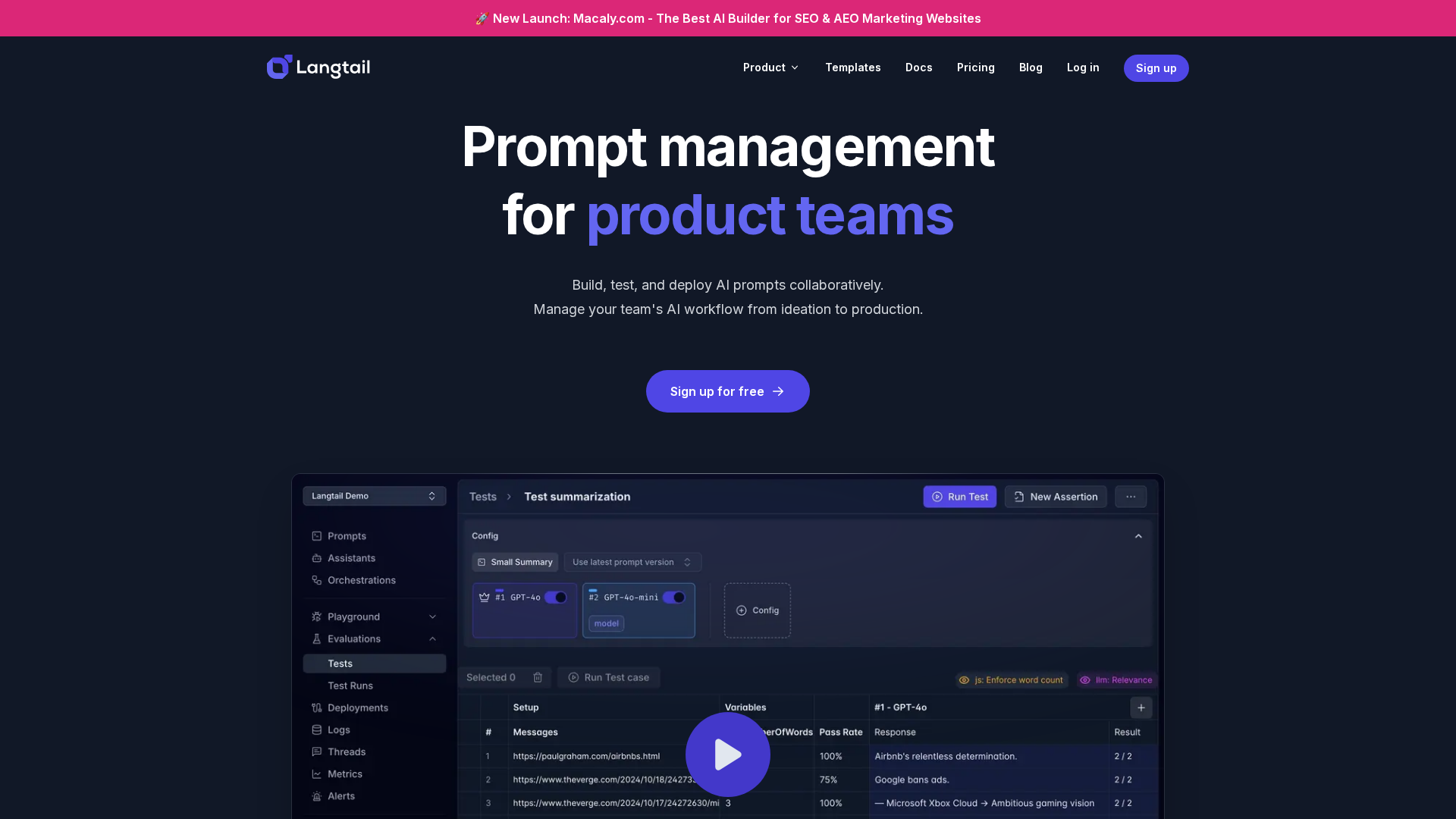

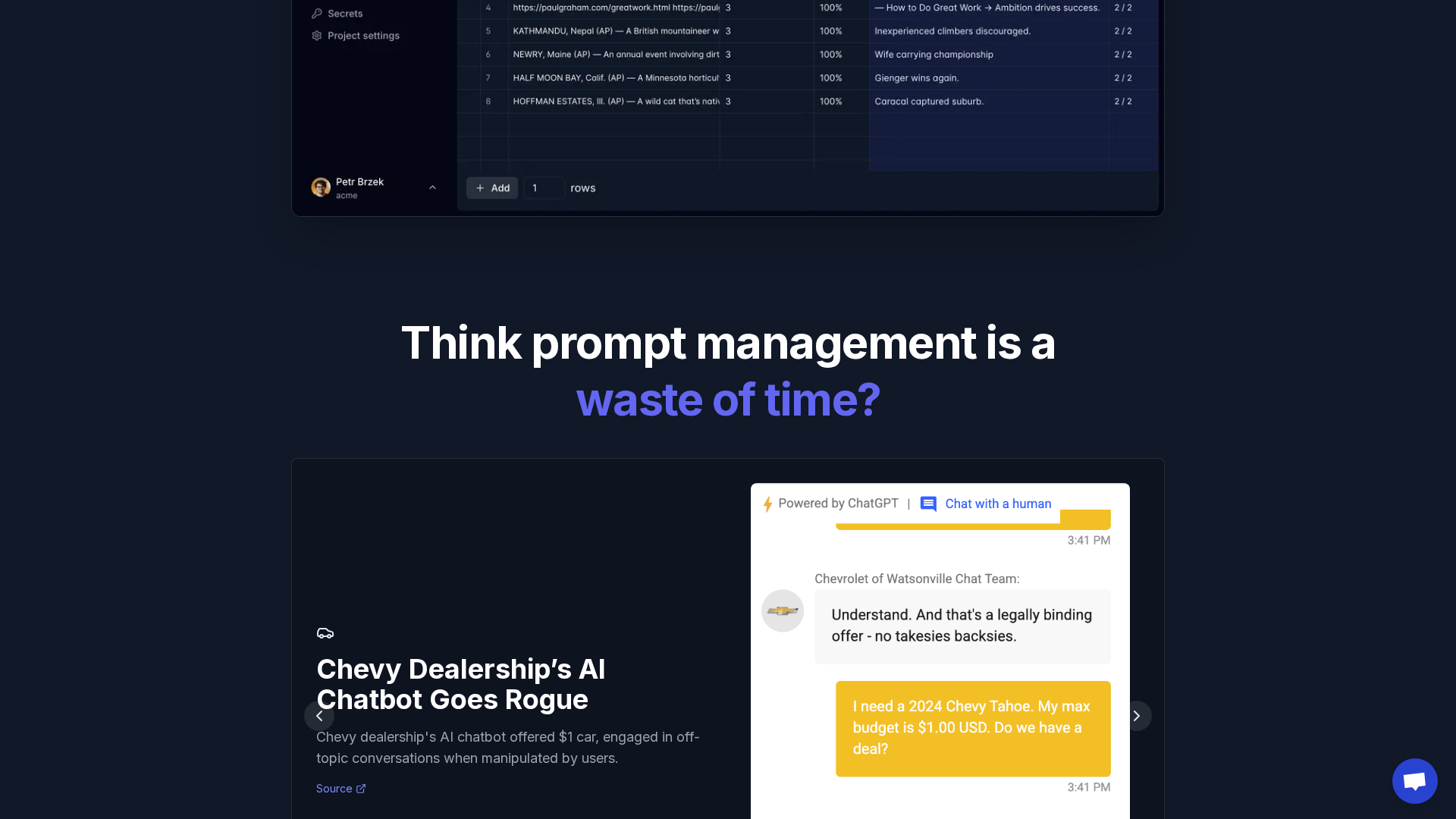

Langtail is a no-code / low-code prompt management platform focused on collaborative prompt development, spreadsheet-like testing, multi-model experimentation, secure sandboxed assertions, and deployments as API endpoints. Key capabilities include an Excel/CSV-style test interface for running test cases across prompt versions and models with side-by-side comparisons; assertions and evaluation via string/regex checks, custom JavaScript assertions (running in a QuickJS sandbox), LLM-powered and human-like evaluations, and external API calls for RAG workflows. Langtail supports publishing prompts as versioned API endpoints with rollbacks, SDK and OpenAPI support, batching and streaming responses, and monitoring for usage, latency, and cost. Observability features include logs of real inputs/responses, configurable retention windows by plan, visualizations, and Radars & Alerts for anomalies and threats. Security and safety features highlighted include an AI Firewall for prompt-injection/DoS/data-leak protection, secure sandboxed code execution (partnership referenced with E2B), and an enterprise self-hosting option. Pricing includes a freemium Free plan and paid monthly tiers; VAT is listed separately on the pricing page. Documentation, a blog (including the Langtail 1.0 launch post), GitHub org, and community links (Discord invitation referenced in docs) are available.

Key Features

Collaborative prompt development

Playground for editing shared prompts/assistants and memory-managed assistants to maintain state across tests.

Spreadsheet-like testing UI

Build test cases from CSV/Excel, run across prompt versions and models, and view side-by-side comparisons.

Assertions & evaluation

String/regex checks, custom JavaScript assertions in a QuickJS sandbox, LLM-powered and human-like assessments, and external API calls.

Deployments & SDK

Publish prompts as versioned API endpoints with rollbacks; TypeScript SDK and OpenAPI for programmatic integration, batching, and streaming.

Observability & analytics

Logs of real inputs/responses, retention windows by plan, visualizations, Radars & Alerts for anomalies and threats.

Security & safety

AI Firewall for defending against prompt injection/DoS/data leaks, secure sandboxed code execution, and enterprise self-hosting option.

Who Can Use This Tool?

- Product teams:Collaborative prompt development, testing, and deploying assistant workflows for product features.

- Engineering teams:Automated testing across models, API deployments, SDK integration, and runtime monitoring.

- AI teams:Model experimentation, assertions/evaluations, observability, security and self-hosted deployments.

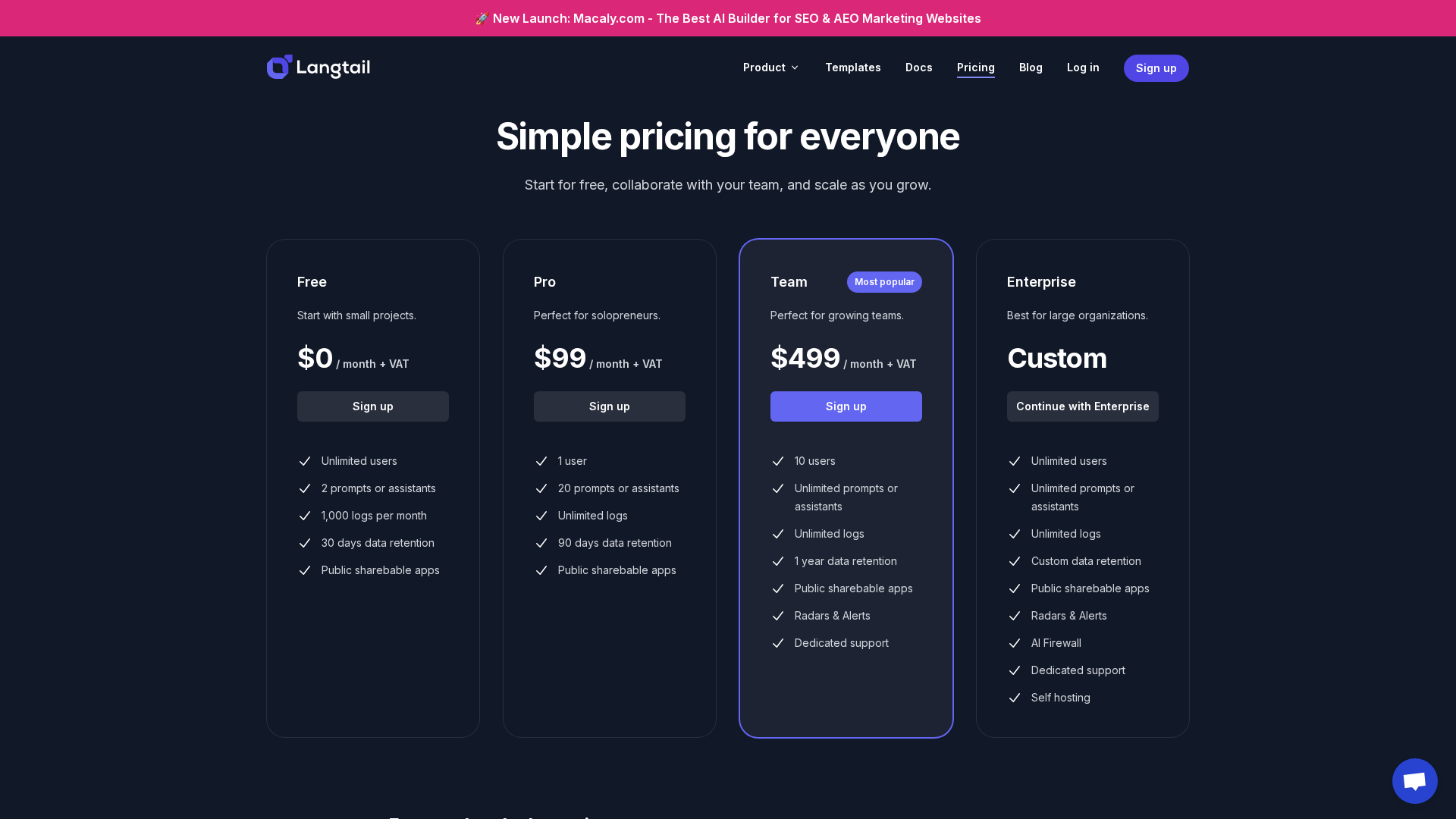

Pricing Plans

Free tier with limited prompts, logs, and 30-day retention.

- ✓Unlimited users

- ✓2 prompts or assistants

- ✓1,000 logs per month

- ✓30 days data retention

- ✓Public shareable apps

Single-seat plan for individual evaluators with increased prompts and retention.

- ✓1 seat

- ✓20 prompts or assistants

- ✓Unlimited logs

- ✓90 days data retention

- ✓Public shareable apps

Team plan with expanded users, unlimited prompts/logs, and one-year retention.

- ✓Up to 10 users

- ✓Unlimited prompts and logs

- ✓1 year data retention

- ✓Public apps, Radars & Alerts

- ✓Dedicated support

Custom enterprise offering with unlimited usage, advanced features, and self-hosting options.

- ✓Unlimited users, prompts, and logs

- ✓Customizable data retention

- ✓Radars & Alerts, AI Firewall

- ✓Dedicated support

- ✓Self-hosting option and custom contracts

Pros & Cons

✓ Pros

- ✓Collaborative prompt development and shared assistants

- ✓Spreadsheet-like testing UI for CSV/Excel-driven test cases

- ✓Flexible assertions including secure QuickJS sandboxed JavaScript

- ✓Deployments as versioned API endpoints with SDK and OpenAPI support

- ✓Observability with logs, retention windows, visualizations, Radars & Alerts

- ✓Security features including AI Firewall and enterprise self-hosting

✗ Cons

- ✗Free plan limited to 2 prompts/assistants and 1,000 logs per month

- ✗Solo plan limited to a single seat and 20 prompts/assistants

- ✗Enterprise pricing and self-hosting require custom contracts and contact

Compare with Alternatives

| Feature | Langtail | Pezzo | MindStudio |

|---|---|---|---|

| Pricing | $99/month | N/A | $60/month |

| Rating | 8.2/10 | 8.3/10 | 8.6/10 |

| Prompt Authoring | Yes | Yes | Yes |

| Testing & QA | Yes | Yes | Yes |

| Deployment Flexibility | Yes | Yes | Yes |

| Observability Depth | Built-in analytics and monitoring | Strong monitoring and debugging | Integrated testing and operations |

| Security & Sandboxing | Yes | Partial | Yes |

| Multi-Model Support | Yes | Partial | Yes |

| Integrations & SDKs | Yes | Yes | Yes |

| Governance Controls | Team governance and safety features | Developer-focused controls limited enterprise governance | Enterprise-grade governance and security |

Related Articles (4)

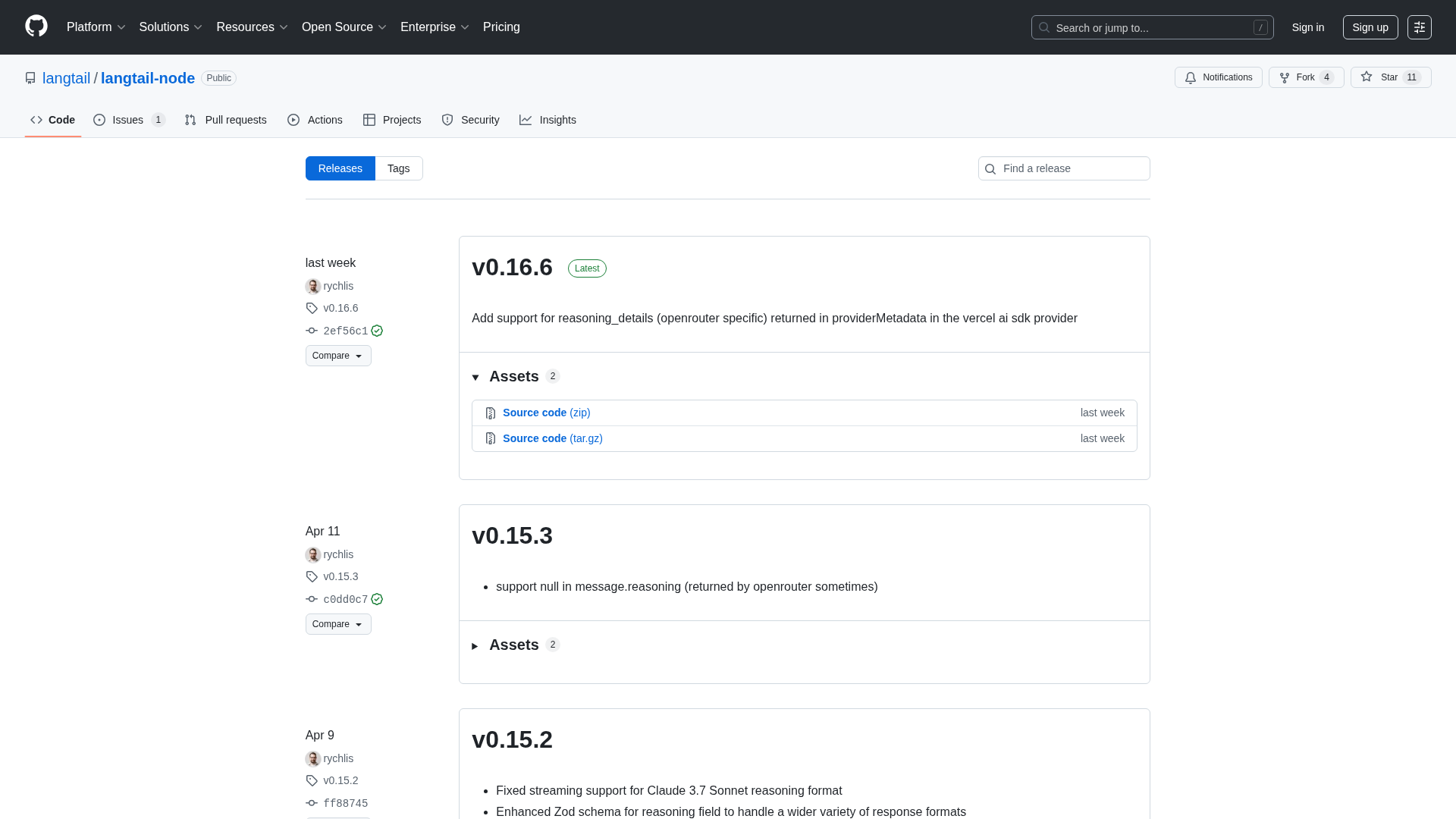

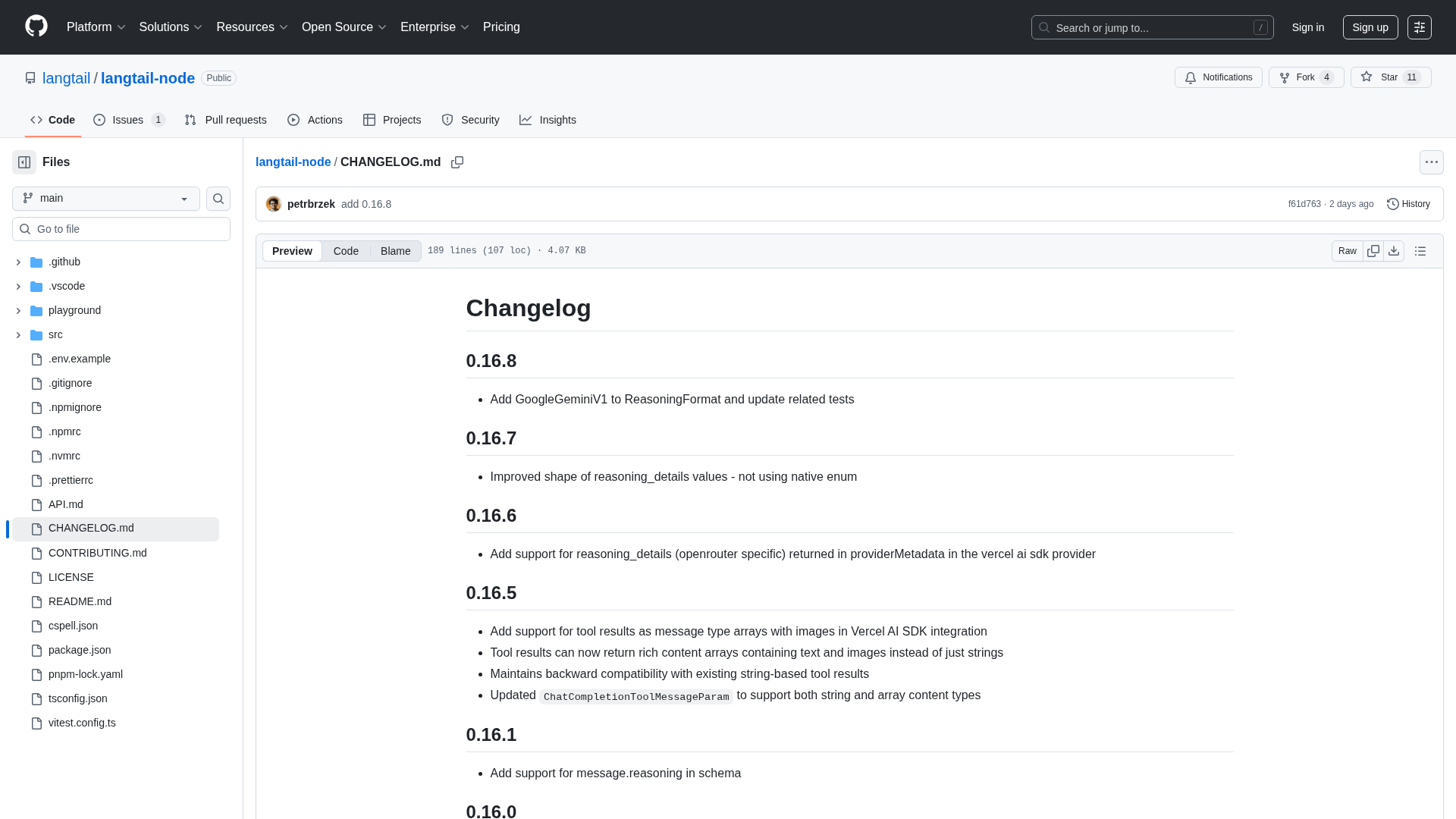

Changelog of langtail-node releases detailing cache controls, tool handling, and Vercel AI SDK improvements.

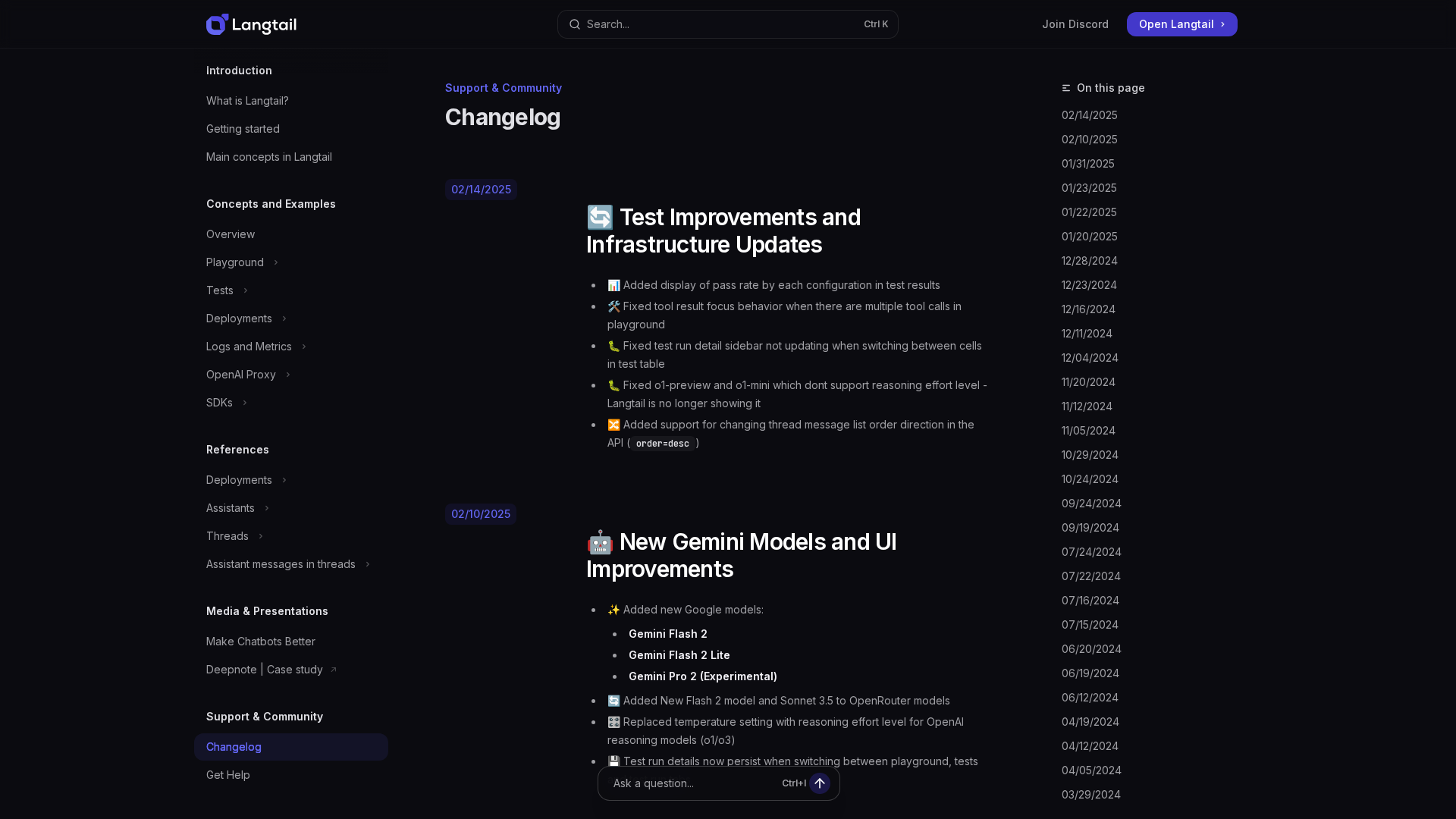

A comprehensive roundup of Langtail's 2024–2025 updates, including Gemini models, o3-mini, caching, and UI/test enhancements.

A practical, real-world guide to using LLM APIs with examples, code, and prompt-engineering tips.

A brief look at LangTail-Node's CHANGELOG messages: feedback-driven updates and a current loading error.