Overview

OpenPipe is a managed platform for collecting LLM interaction data, fine-tuning models, and hosting inference. It provides a unified SDK to capture request/response logs, prepare datasets, fine-tune models (including reward model support), evaluate models (head-to-head and comparisons vs base models), and host resulting models with caching and optimizations. The platform supports RL-based agent workflows via tooling such as ART (Agent Reinforcement Trainer) and RULER (reward tooling), and includes features like automatic request logs, pruning rules to reduce fine-tuning dataset size, and deployment options (serverless, hourly compute units, and dedicated single-tenant deployments). OpenPipe publishes per-token training pricing by model size, per-token and hourly compute unit (CU) hosted inference billing models, and offers enterprise/custom pricing (volume discounts, on-prem options, dedicated support/SLAs).

Key Features

Unified SDK for data capture

Capture request/response logs, apply pruning rules, and prepare datasets for fine-tuning.

Fine-tuning pipeline

Fine-tune models at scale with support for reward models and RL workflows.

Hosted inference with caching

Host fine-tuned and base models with caching and optimizations to reduce latency and cost.

Evaluation tooling

Head-to-head evaluations and comparisons vs base models with custom criteria.

RL tooling

Supports ART (Agent Reinforcement Trainer) and RULER for reward tooling and RL-based workflows.

Multiple deployment modes

Serverless endpoints, hourly CU-based deployments, and dedicated single-tenant deployments.

Who Can Use This Tool?

- ML engineers:Build, fine-tune, evaluate, and deploy models with integrated data capture and RL tooling.

- Product teams:Replace prompt-heavy workflows with fine-tuned models and host scalable inference endpoints.

- Enterprises:Use dedicated deployments, on-prem options, and enterprise SLAs for latency-sensitive workloads.

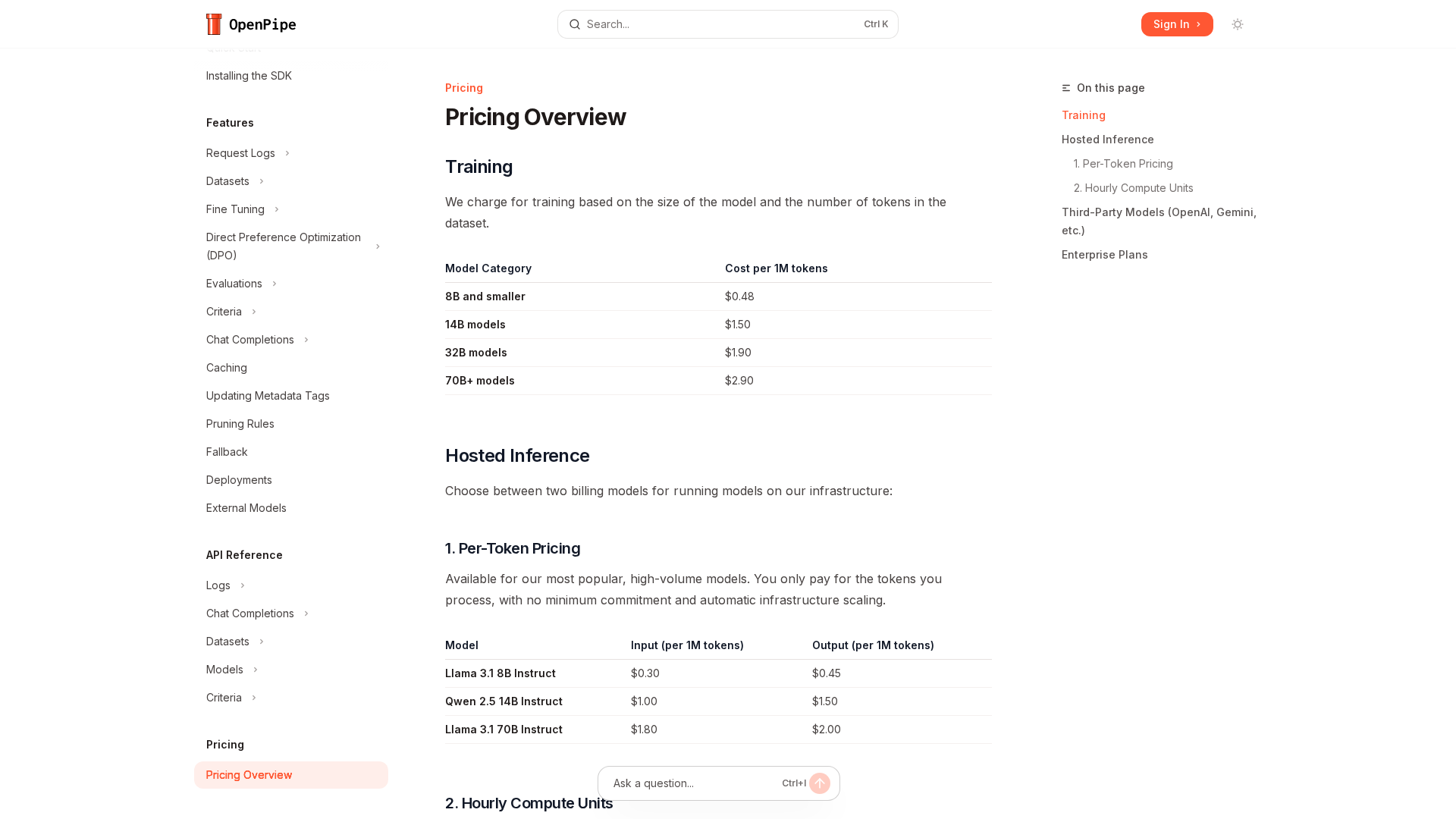

Pricing Plans

Training cost per 1M tokens for 8B and smaller models ($0.48 / 1M tokens).

- ✓Training: $0.48 per 1M tokens

Training cost per 1M tokens for 14B models ($1.50 / 1M tokens).

- ✓Training: $1.50 per 1M tokens

Training cost per 1M tokens for 32B models ($1.90 / 1M tokens).

- ✓Training: $1.90 per 1M tokens

Training cost per 1M tokens for 70B+ models ($2.90 / 1M tokens).

- ✓Training: $2.90 per 1M tokens

Per-token hosted inference example: Llama 3.1 8B Instruct. Input $0.30 / 1M tokens; output $0.45 / 1M tokens. No minimum commitment; automatic scaling.

- ✓Input: $0.30 per 1M tokens

- ✓Output: $0.45 per 1M tokens

- ✓No minimum commitment; automatic scaling

Per-token hosted inference example: Qwen 2.5 14B Instruct. Input $1.00 / 1M tokens; output $1.50 / 1M tokens.

- ✓Input: $1.00 per 1M tokens

- ✓Output: $1.50 per 1M tokens

Per-token hosted inference example: Llama 3.1 70B Instruct. Input $1.80 / 1M tokens; output $2.00 / 1M tokens.

- ✓Input: $1.80 per 1M tokens

- ✓Output: $2.00 per 1M tokens

Hourly compute unit billing example: Llama 3.1 8B at $1.50 per CU-hour. Billed precise to the second; CUs remain active 60s after spikes.

- ✓Price: $1.50 per CU-hour

- ✓Billed precise to the second; CUs stay active 60s after spikes

Hourly compute unit billing example: Mistral Nemo 12B at $1.50 per CU-hour.

- ✓Price: $1.50 per CU-hour

Hourly compute unit billing example: Qwen 2.5 32B Coder at $6.00 per CU-hour.

- ✓Price: $6.00 per CU-hour

Hourly compute unit billing example: Qwen 2.5 72B at $12.00 per CU-hour.

- ✓Price: $12.00 per CU-hour

Hourly compute unit billing example: Llama 3.1 70B at $12.00 per CU-hour.

- ✓Price: $12.00 per CU-hour

Third-party models (OpenAI, Google, etc.) are billed as pass-through; you pay the provider's standard rates with no OpenPipe markup.

- ✓Pass-through billing to third-party providers

Enterprise/custom pricing with volume discounts, on-prem options, dedicated support and SLAs. Contact [email protected] for details.

- ✓Custom pricing and volume discounts

- ✓On-premises options and dedicated support/SLAs

Pros & Cons

✓ Pros

- ✓Unified SDK covering data capture, dataset prep, fine-tuning, evaluation, and hosting.

- ✓Supports RL tooling (ART, RULER) and reward model workflows.

- ✓Flexible deployment options: serverless, CU-hour, and dedicated deployments.

- ✓Transparent published pricing for training and hosted inference; third-party models billed pass-through.

✗ Cons

- ✗No publicly listed free trial in docs (as of the provided information).

- ✗Hourly CU deployments can have cold starts if model idle (~5 minutes) which may increase latency.

- ✗Enterprise/custom pricing requires contacting sales; not fully self-service for large dedicated deployments.

Compare with Alternatives

| Feature | OpenPipe | AgentOps | Together AI |

|---|---|---|---|

| Pricing | N/A | $40/month | N/A |

| Rating | 8.2/10 | 8.2/10 | 8.4/10 |

| Data Capture Depth | Unified SDK deep session and token capture | Token-level traces and full session observability | Limited data capture tooling |

| Fine-tuning Workflow | Yes | No | Yes |

| Hosted Inference | Yes | No | Yes |

| Evaluation & RL | Yes | No | Partial |

| Observability & Replay | Partial | Yes | No |

| Deployment Flexibility | Multiple deployment modes managed and serverless | Self-hosting and enterprise deployment options | Scalable GPU cloud and private cluster deployments |

| Model Ownership | Yes | Yes | Yes |

| Infrastructure Scale | Managed scale with caching and enterprise options | Developer-focused not large GPU infrastructure | Large GPU cloud and private cluster capacity |

Related Articles (14)

Meta to lease 500 MW Visakhapatnam data centre capacity from Sify and land Waterworth submarine cable.

Meta plans a 500MW AI data center in Visakhapatnam with Sify, linked to the Waterworth subsea cable.

OpenPipe accelerates reinforcement learning workflows to empower autonomous agents.

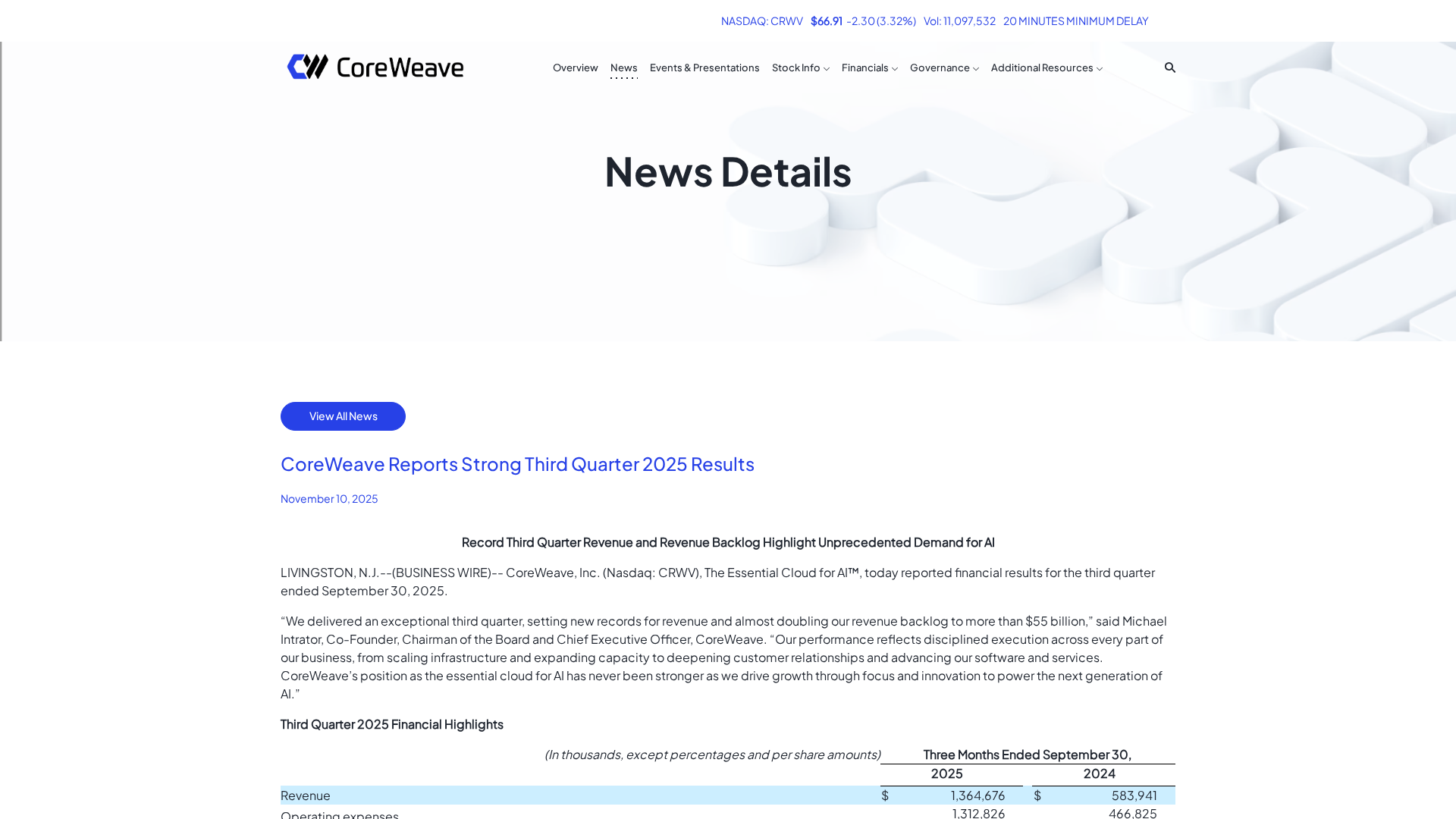

CoreWeave reports record Q3 2025 revenue and a $55.6B backlog, with major AI partnerships and rapid infrastructure expansion.

CoreWeave reports record Q3 2025 revenue, $55.6B backlog, major AI deals, and aggressive capacity expansion.