Overview

Summary based only on public pages and docs referenced: Platform goal is to enable an “open superintelligence” / decentralized AI ecosystem by combining a multi-cloud compute marketplace (Compute Cloud / Compute Exchange), an Environments Hub for RL tasks, and decentralized training infrastructure and protocols (INTELLECT series, PRIME protocol). Compute: aggregates GPUs across major clouds into a single interface, supports multi-node clusters (claims scale to 64+ H100 multi-node), Docker-ready workloads, 1-click deploy, cross-cloud GPU price/availability scoring, and marketing claims of “no extra fees” (pay as if direct to clouds). Decentralized training & protocols: public INTELLECT initiatives (INTELLECT-1, INTELLECT-2, etc.) and PRIME protocol testnet referenced; claims decentralized training of 10B-parameter models and ongoing scaling. Environments Hub: community-driven repository for RL environments, verifiers, sandboxes, and tooling for training/evaluation. Datasets & research: references to SYNTHETIC-2 and collaborative datasets/reasoning-trace projects. Documentation and developer resources include cross-cloud GPU discovery, inference quickstart (API/CLI/OpenAI-compatible API, token-based billing), environments packaging/publishing guides, and contribution guidelines for compute contributors (self-hosting, worker software, integrity rules, slashing/bans). Public announcements reference platform introduction, compute deep-dive, fundraise (~$15M round announced in blog), and Environments Hub launch. Authentication: API keys generated in Account → API Keys; CLI recommended; team accounts use X-Prime-Team-ID header for team credits. Community: Discord and other social channels referenced. Security: vulnerability reporting via [email protected] and PGP public key; canonical security pages linked. Pricing notes: inference uses usage/token-based billing (charged for input and output tokens) with no explicit per-token rates shown on the inference overview page reviewed; compute marketing claims competitive pricing and “no extra fees” but no canonical public per-GPU hourly price sheet was found on pages reviewed. Gaps identified: no complete public compute price sheet, no explicit per-token rates found on the inference overview page, some live API examples not collected, and exact GitHub repo URLs were not copied. Links and next-step actions were collected and are included separately. This description is limited to the public information and links provided in the source text; no additional facts or unverifiable claims were added.

Key Features

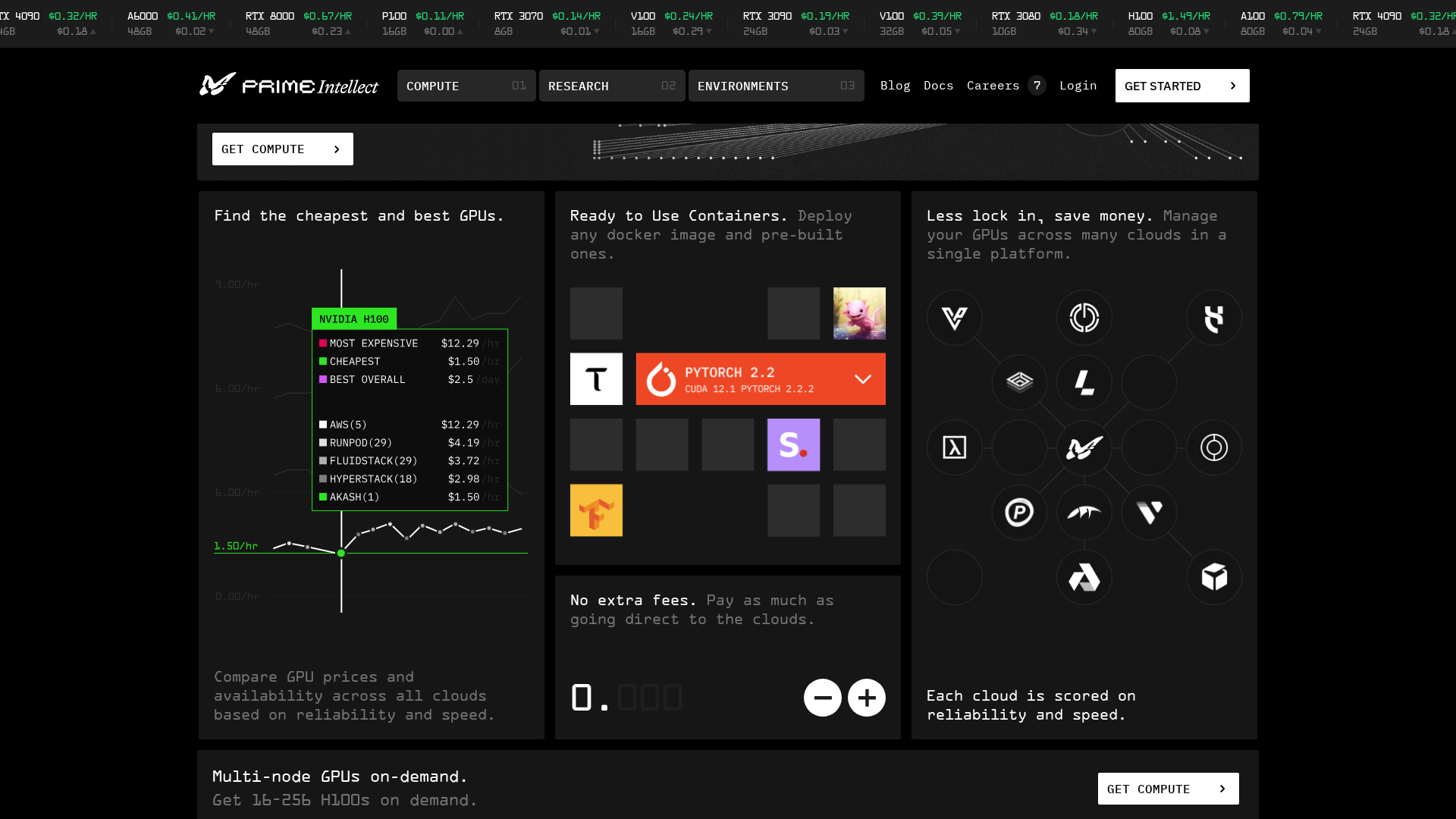

Multi-cloud Compute Marketplace (Compute Cloud / Compute Exchange)

Aggregates GPUs across major clouds into one interface, offers multi-node clusters, Docker-ready workloads, 1-click deploy, and cross-cloud price/availability scoring; marketing claims “no extra fees.”

Decentralized Training & Protocols

Public INTELLECT initiatives (INTELLECT-1, INTELLECT-2, etc.) and PRIME protocol testnet demonstrating decentralized training efforts, including claimed 10B+ model training.

Environments Hub for RL

Community-driven repository and tooling for RL environments, verifiers, sandboxes, and integrated training/evaluation workflows to standardize open RL tasks.

Inference API & CLI

OpenAI-compatible API and CLI quick-starts; API keys created via account; team header (X-Prime-Team-ID) for team credits; billing is token-based (input/output tokens).

Datasets & Research Projects

References to collaborative datasets such as SYNTHETIC-2 and reasoning-trace projects used for benchmarking and research efforts.

Contribution & Integrity Guidelines

Guidance for compute contributors including self-hosting, worker software, integrity rules, and slashing/bans for violations.

Who Can Use This Tool?

- Developers:Build and deploy models using multi-cloud GPUs, CLI and API integrations, and contribute environments or tooling.

- Researchers:Run decentralized training experiments, access collaborative datasets, and use Environments Hub for benchmarking.

- Compute providers:Join the compute marketplace, self-host worker software, and follow contribution/integrity guidelines to earn from supplying resources.

Pricing Plans

Usage- and token-based inference billing charged for input and output tokens (no per-token rates published on overview page).

- ✓Token-based billing for input/output tokens

- ✓OpenAI-compatible API and CLI support

- ✓Team header (X-Prime-Team-ID) for team credits

Compute marketplace with cross-cloud GPU aggregation and marketing claims of competitive pricing; no canonical public per-GPU hourly price sheet found—contact sales or use the app/dashboard for quotes.

- ✓Aggregated GPUs across major clouds

- ✓Multi-node clusters (advertised scale to 64+ H100 multi-node)

- ✓Docker-ready workloads and 1-click deploy

Access to Environments Hub, tooling, and community resources; contributor programs, grants, and programs referenced for contributors.

- ✓Environment publishing and verifiers

- ✓Community grants and contributor programs

- ✓Tools: Prime CLI, Verifiers, prime-rl referenced

Pros & Cons

✓ Pros

- ✓Open, decentralized platform vision combining compute, training, and environments

- ✓Multi-cloud GPU aggregation with multi-node cluster support and ready-to-use Docker workloads

- ✓Public decentralized-training initiatives and a protocol testnet (INTELLECT series, PRIME protocol)

- ✓Community-driven Environments Hub to standardize and scale RL tasks

- ✓Inference API and CLI with OpenAI-compatible interface and team-account support

- ✓Security contact and PGP key for vulnerability reporting

✗ Cons

- ✗No canonical, complete public per-GPU hourly price sheet found in the reviewed pages

- ✗Inference overview page referenced token billing but did not publish explicit per-token rates

- ✗Exact GitHub repository URLs for referenced tools (Prime CLI, Verifiers, prime-rl) were not copied

- ✗Some live API examples or detailed payloads were not collected on the overview pages reviewed

Compare with Alternatives

| Feature | Prime Intellect | Together AI | PublicAI |

|---|---|---|---|

| Pricing | N/A | N/A | N/A |

| Rating | 8.3/10 | 8.4/10 | 8.0/10 |

| Compute Marketplace Depth | Multi-cloud compute marketplace | Scalable GPU cloud, no marketplace | No compute marketplace, data-marketplace focused |

| Training Decentralization | Yes | No | Partial |

| RL Environments Hub | Yes | No | No |

| Fractional GPU Support | Partial | Partial | No |

| Data Marketplace Presence | Partial | No | Yes |

| Research & Pretraining Tools | Datasets and open research projects | Pretraining and research tooling | HITL and model evaluation tooling |

| Governance & Incentives | Partial | No | Yes |

Related Articles (4)

How DePIN, data marketplaces, and zkML could fix AI’s governance, privacy, and compute bottlenecks through blockchain-enabled collaboration.

Prime Intellect now offers permissionless Akash GPU access, including H100 and A100, via an integrated, user-friendly platform.

Open, community-powered platform unifying multi-cloud compute, open RL environments, and decentralized training for frontier AI.

GM {{first_name}} ! Last week, it was tariffs. This week? $6B gone, no thanks to Mantra. The RWA-f...