Overview

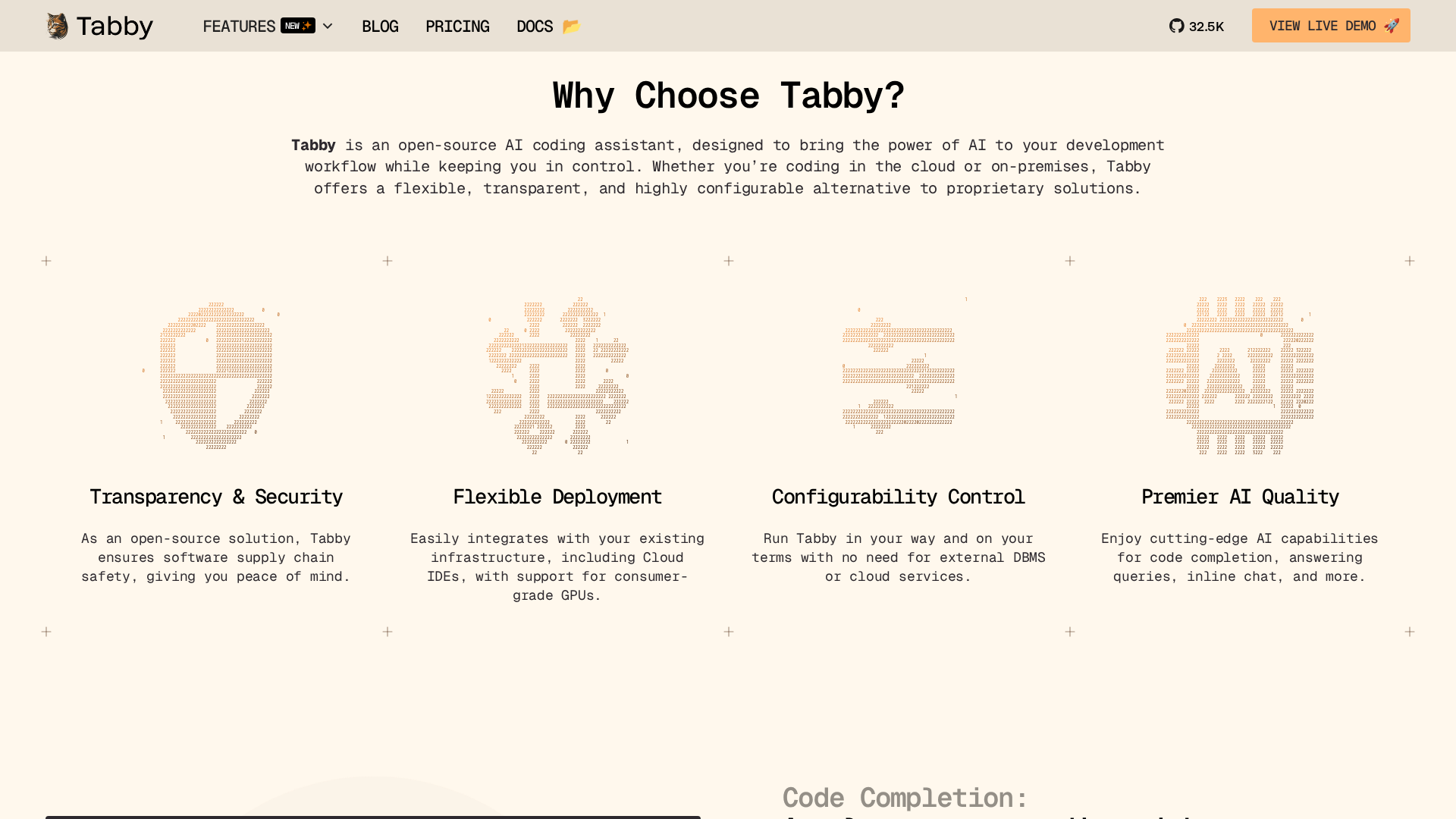

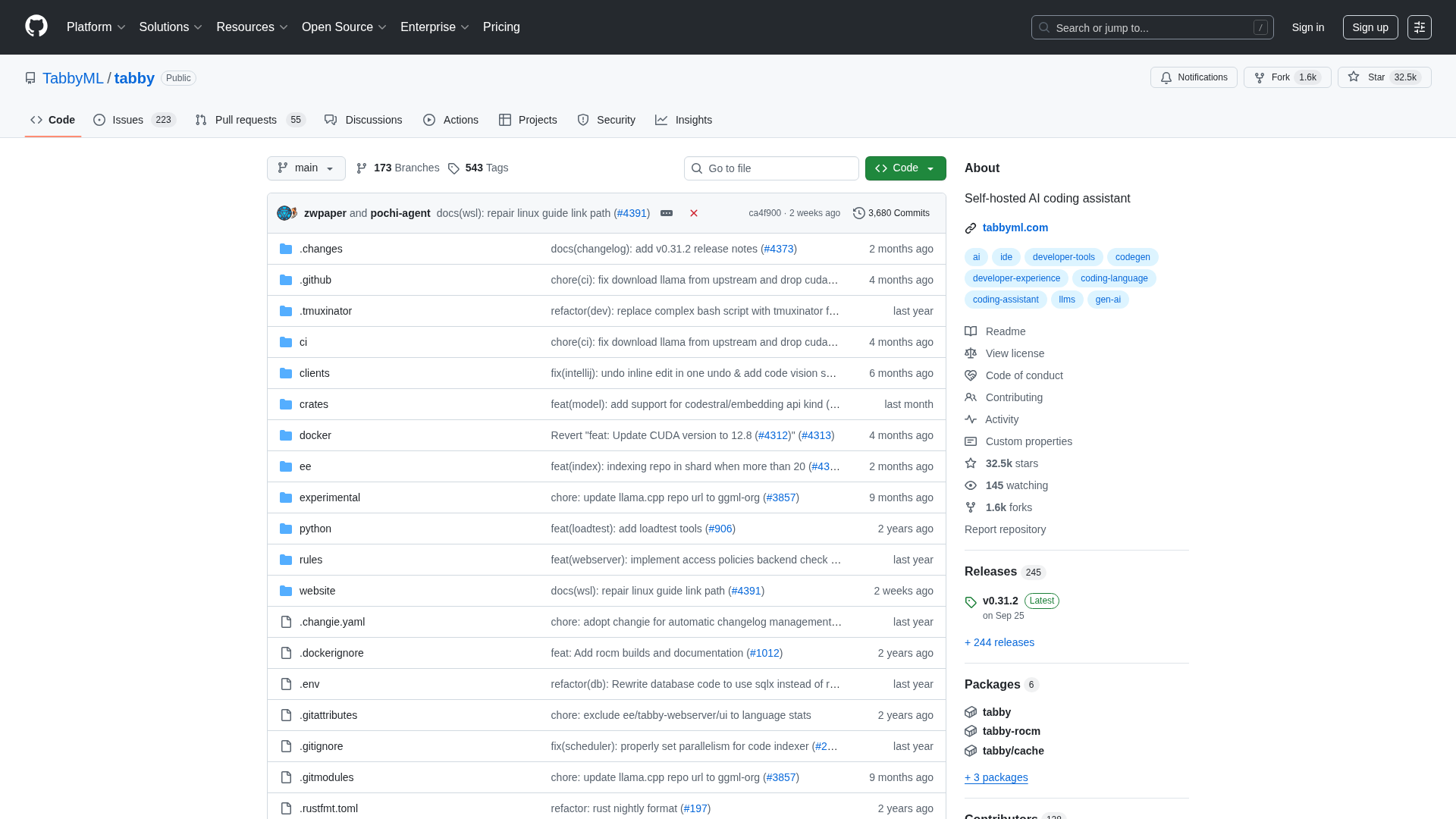

Tabby is an open-source, self-hosted AI coding assistant offering IDE extensions and model serving. Key capabilities observed in the documentation and marketing site include fast, context-aware code completion, an in-IDE Answer Engine/Inline Chat, Data Connectors/Context Providers to ingest project docs/configs/APIs, model interoperability (references to CodeLlama, StarCoder, CodeGen and others), and EngOps tooling for deployment, backups, and telemetry controls. The project emphasizes transparency and local-first operation (self-hosted or on-prem) while providing flexible cloud deployment options and a Tabby Cloud usage-based offering. Documentation includes quick-start installation notes (including CUDA 11+ requirement for GPU acceleration on Windows), cloud-deployment references (Modal, BentoML, SkyPilot) that mention supported GPUs (T4, L4, A100), an administration backup page noting default SQLite DB location ($HOME/.tabby/ee/db.sqlite), and a models registry with a public leaderboard for benchmarks. Marketing/pricing content lists Community (free/local-first), Team (per-user subscription — example shown ~ $19/user/month on the marketing page), Enterprise (custom, SSO and advanced security), and Tabby Cloud (usage-based billing with some free monthly credits and budget controls). The docs-hosted pricing page returned 404; the marketing pricing page contains some inconsistent/garbled text and should be verified with sales before relying on exact numbers.

Key Features

Context-aware code completion

Fast, context-aware code completion integrated into IDE extensions for productivity improvements.

In-IDE Answer Engine and Inline Chat

Answer Engine and Inline Chat capabilities within the IDE to ask questions and get context-aware responses.

Data Connectors / Context Providers

Connectors and context providers to pull project documentation, configuration files, and API specs into the model context.

Model interoperability

Supports multiple models (marketing/docs reference CodeLlama, StarCoder, CodeGen and others) for flexible model choices.

IDE extensions + model serving

Architecture includes IDE extensions and model-serving components for self-hosted or cloud deployment.

EngOps and administration tooling

Tools and documentation for deployment, backups, telemetry controls, and operational management.

Who Can Use This Tool?

- Individual developers:Local-first AI coding assistant for individual developers; Community plan free, up to 5 users referenced.

- Teams:Small to medium teams seeking per-user paid collaboration features and cloud or self-hosted deployment.

- Enterprises:Large organizations requiring SSO, advanced security, custom contracts, and operational controls.

Pricing Plans

Free, local-first Community plan intended for individuals and small teams. Marketing page references up to 5 users for this plan.

- ✓Local-first / self-hosted use

- ✓Free community access

- ✓Up to 5 users referenced on marketing page

Paid per-user Team plan. The marketing page shows an example ~ $19/user/month; pricing page and labels contain inconsistencies — verify with sales.

- ✓Per-user billing

- ✓Collaboration features (as marketed)

- ✓Hosted or self-hosted options implied

Custom Enterprise offering with unlimited users, SSO, and advanced security. Contact sales for details and official pricing.

- ✓Unlimited users (marketing claim)

- ✓SSO and advanced security

- ✓Custom contract and support

Usage-based cloud offering (Tabby Cloud) with usage billing, some free monthly credits and budget controls as described on the marketing page. Exact billing details should be confirmed with sales.

- ✓Usage-based billing

- ✓Free monthly credits referenced

- ✓Budget controls

Pros & Cons

✓ Pros

- ✓Open-source and self-hosted allowing local-first and on-prem deployments

- ✓IDE extensions with context-aware code completion and Inline Chat/Answer Engine

- ✓Supports multiple models (CodeLlama, StarCoder, CodeGen references) for flexibility

- ✓Documentation covers installation, backups, cloud deployments, and model benchmarks

- ✓Cloud deployment guides (Modal, BentoML, SkyPilot) and mentions supported GPUs

✗ Cons

- ✗Docs subdomain pricing page returned a 404; marketing pricing page contains inconsistent/garbled text — pricing should be verified with sales

- ✗Self-hosted setups require operational work (e.g., GPU/CUDA requirements for acceleration — Windows install notes require CUDA 11+)

- ✗Some marketing content appears inconsistent; do not rely on shown example prices without confirmation

- ✗No explicit mobile app information found in the inspected pages

Compare with Alternatives

| Feature | Tabby | Aider | Cline |

|---|---|---|---|

| Pricing | $19/month | N/A | N/A |

| Rating | 8.4/10 | 8.3/10 | 8.1/10 |

| Deployment Flexibility | Yes | Partial | Partial |

| Local-First Support | Yes | Partial | Yes |

| Model Interoperability | Yes | Yes | Yes |

| IDE Integration Depth | Deep IDE extensions and inline chat | Editor and CLI integration | Limited IDE integration |

| Agent Automation | Partial | Yes | Yes |

| Context Connectors | Yes | Yes | Yes |

| EngOps Tooling | Yes | Partial | Yes |

| Observability & Audit | Yes | Partial | Yes |

Related Articles (5)

Overview of TabbyML's Tabby, a self-hosted AI coding assistant, and its place in a growing ecosystem of local-first AI tools.

A chronological roundup of Tabby’s latest features, integrations, and deployment updates from 2023–2024.

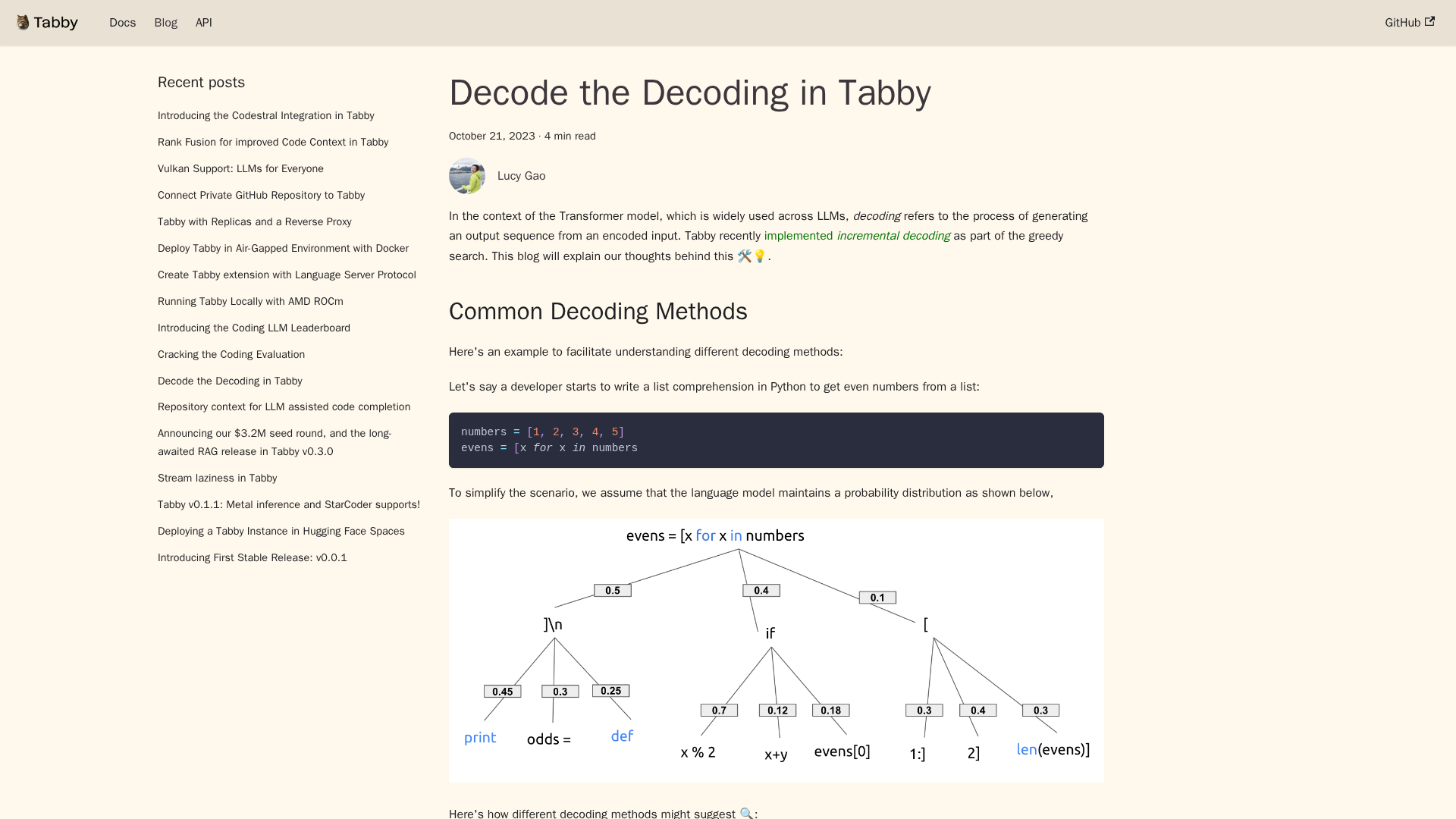

Incremental decoding, streaming, and retrieval-augmented coding in Tabby, with deployment tips and roadmap.

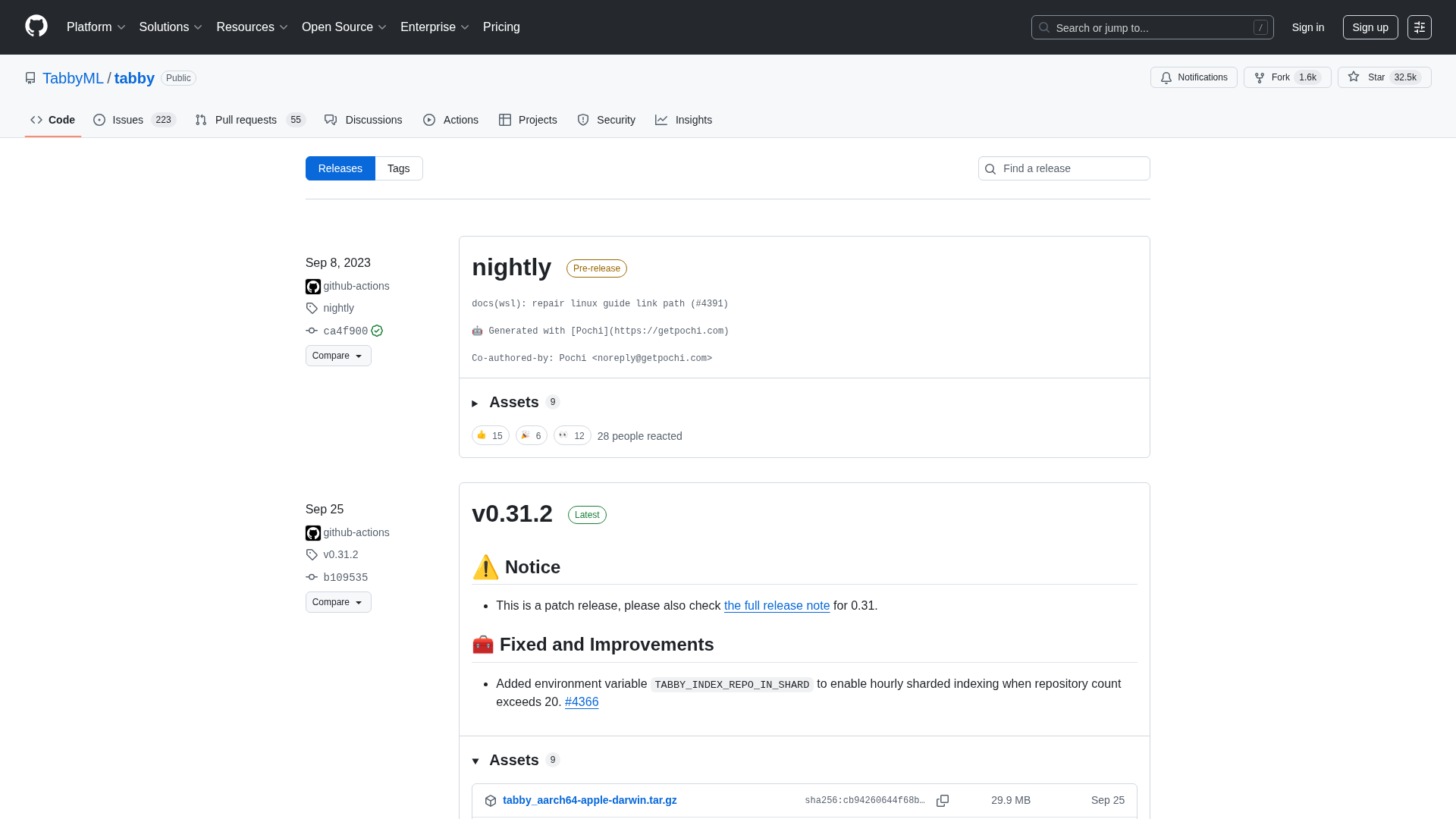

GitHub release notes for TabbyML/tabby, featuring a new nightly build and 0.31.x patches with sharded indexing and SQLite improvements.

Tabby is an open-source, self-hosted AI coding assistant with OpenAPI, GPU support, and Docker-based quickstart.

.avif)