Topic Overview

This topic covers the landscape of AI inference and deployment platforms—managed services and hardware-optimized servers that host models in production—and how they integrate with cloud and MCP (Model Context Protocol) deployment tooling. It includes managed inference platforms such as Baseten, vendor-optimized inference servers like Red Hat AI Inference Server tuned for AWS Trainium and Inferentia, and accelerator integrations with Cerebras hardware. Relevance (2026-01-22): production AI demands continued growth in throughput, latency control, cost efficiency, and compliance. That has driven convergence of three trends: specialized accelerators (Trainium/Inferentia, Cerebras WSE) and vendor stacks for higher performance; managed inference and MLOps platforms for lifecycle and scaling; and standardized integration layers (MCP) to connect LLMs, agents, and external systems consistently across clouds and edge platforms. Key tools and roles from the provided list: Baseten — managed model hosting and deployment workflows; Red Hat AI Inference Server on Trainium/Inferentia — a server optimized for AWS accelerator hardware for lower latency and cost at scale; Cerebras integrations — wafer-scale engine support for extremely large and high-throughput models. Complementary MCP-compatible deployment tooling includes Google Cloud Run and Cloudflare MCP servers for serverless and edge-hosted agent integration, Firebase’s experimental MCP server, Daytona and YepCode for sandboxed execution of LLM-generated code, and other MCP servers that standardize context passing and secure runtime orchestration. Taken together, these platforms and integrations let teams choose between managed convenience, hardware-optimized performance, and secure, standardized deployment patterns. Key considerations are latency vs. cost, model size and parallelism needs, runtime safety (sandboxing), and the operational tooling to manage context, scaling, and cross-cloud deployments.

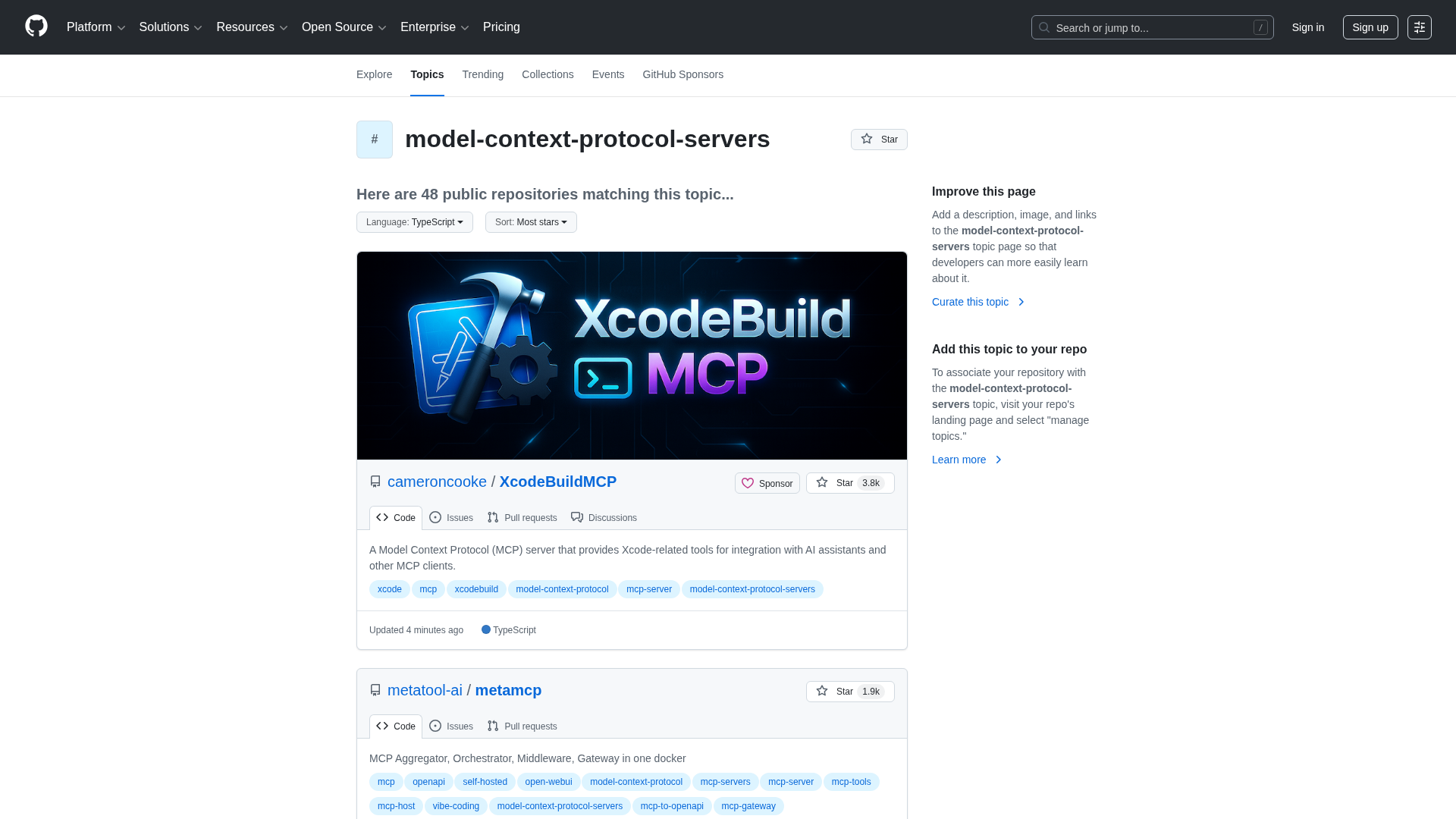

MCP Server Rankings – Top 5

Deploy code to Google Cloud Run

Deploy, configure & interrogate your resources on the Cloudflare developer platform (e.g. Workers/KV/R2/D1)

Fast and secure execution of your AI generated code with Daytona sandboxes

Firebase's experimental MCP Server to power your AI Tools

An MCP server that runs YepCode processes securely in sandboxed environments for AI tooling.