Topic Overview

Decentralized AI compute marketplaces and GPU rental platforms connect model owners and developers with on-demand accelerator capacity—ranging from peer-to-peer GPU spot offers to managed cloud GPU fleets. As of 2026-01-22 this space is driven by growing model scale, heterogeneous accelerator demand, and cost pressure that make rental marketplaces (e.g., Argentum AI, iExec) and centralized providers (e.g., Render) increasingly relevant for inference, fine-tuning, and batch training. Key technical themes: interoperability with cloud platform integrations and hypervisor integrations; secure execution of LLM-generated code; and standardized control interfaces. Tools in this ecosystem address those needs: Daytona and YepCode provide sandboxed runtimes for safe execution of AI-generated code; Cloudflare, Google Cloud Run and Grafbase host Model Context Protocol (MCP) servers that let agents deploy, configure and query external resources; and marketplace platforms broker GPU access and billing. Together they enable hybrid workflows where decentralized capacity is orchestrated alongside managed cloud instances and hypervisor-backed VMs. Why it matters now: continued shortages and price volatility for GPUs, regulatory emphasis on data locality, and the rise of agentic workflows have increased demand for flexible, composable compute procurement. Marketplaces offer cost arbitrage and geographic diversity, while MCP-compatible integrations and sandboxing reduce operational and security risk when agents manage remote resources. Practitioners evaluating these platforms should weigh latency, reliability, and governance (identity, billing, provenance), plus how well a marketplace integrates with MCP servers, sandbox runtimes, and existing cloud/hypervisor stacks to support secure, reproducible AI workloads.

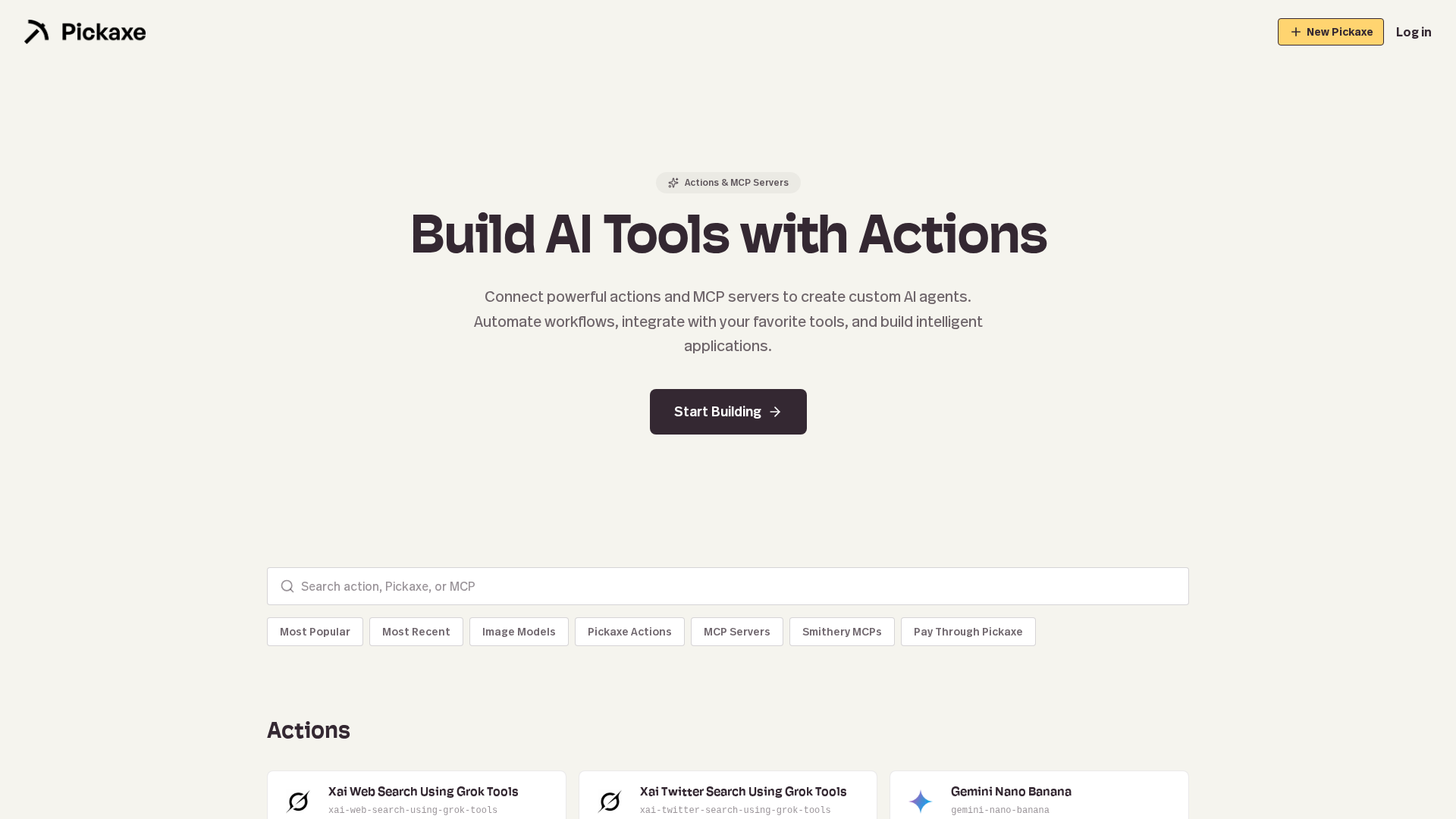

MCP Server Rankings – Top 5

Fast and secure execution of your AI generated code with Daytona sandboxes

An MCP server that runs YepCode processes securely in sandboxed environments for AI tooling.

Deploy, configure & interrogate your resources on the Cloudflare developer platform (e.g. Workers/KV/R2/D1)

Deploy code to Google Cloud Run

Turn your GraphQL API into an efficient MCP server with schema intelligence in a single command.