Topic Overview

This topic covers best practices and provider patterns for hosting high‑performance large language models on modern accelerators (H200/H800) across cloud and on‑prem footprints, with a focus on hypervisor, cloud platform, and Kubernetes integrations. Demand for LLM inference and fine‑tuning at scale has pushed teams to combine hardware-aware orchestration (GPU passthrough, device partitioning, SR‑IOV/MIG) with platform tooling that exposes infrastructure to model workflows in a safe, auditable way. Key tooling trends include MCP (Model Context Protocol) servers and Kubernetes integrations that standardize how models and LLM-driven agents discover and operate on cluster and cloud resources. Examples: an MCP Server for Kubernetes provides a unified MCP-based interface to manage pods, deployments, and services; mcp-k8s-go is a Golang MCP server to browse pods, logs, events and namespaces; an AWS MCP server exposes S3 and DynamoDB operations through MCP to enable LLM-powered resource operations; and the Azure MCP Hub curates MCP servers and guidance for Azure deployments. These tools make it easier to integrate LLMs with orchestration layers while preserving least-privilege access and traceability. As of 2025-12-14, organizations are balancing performance (low latency, high throughput) against cost, data residency, and compliance, driving mixed clouds, on‑prem GPU farms, and hypervisor-based VM models alongside Kubernetes-native deployments. Practical considerations include accelerator selection, cluster networking, node sizing, operator support for model parallelism and quantized runtimes, and using MCP patterns to enable controlled LLM interactions with infrastructure. This ecosystem-oriented view helps teams choose deployment architectures that align performance goals with operational controls and provider capabilities.

MCP Server Rankings – Top 4

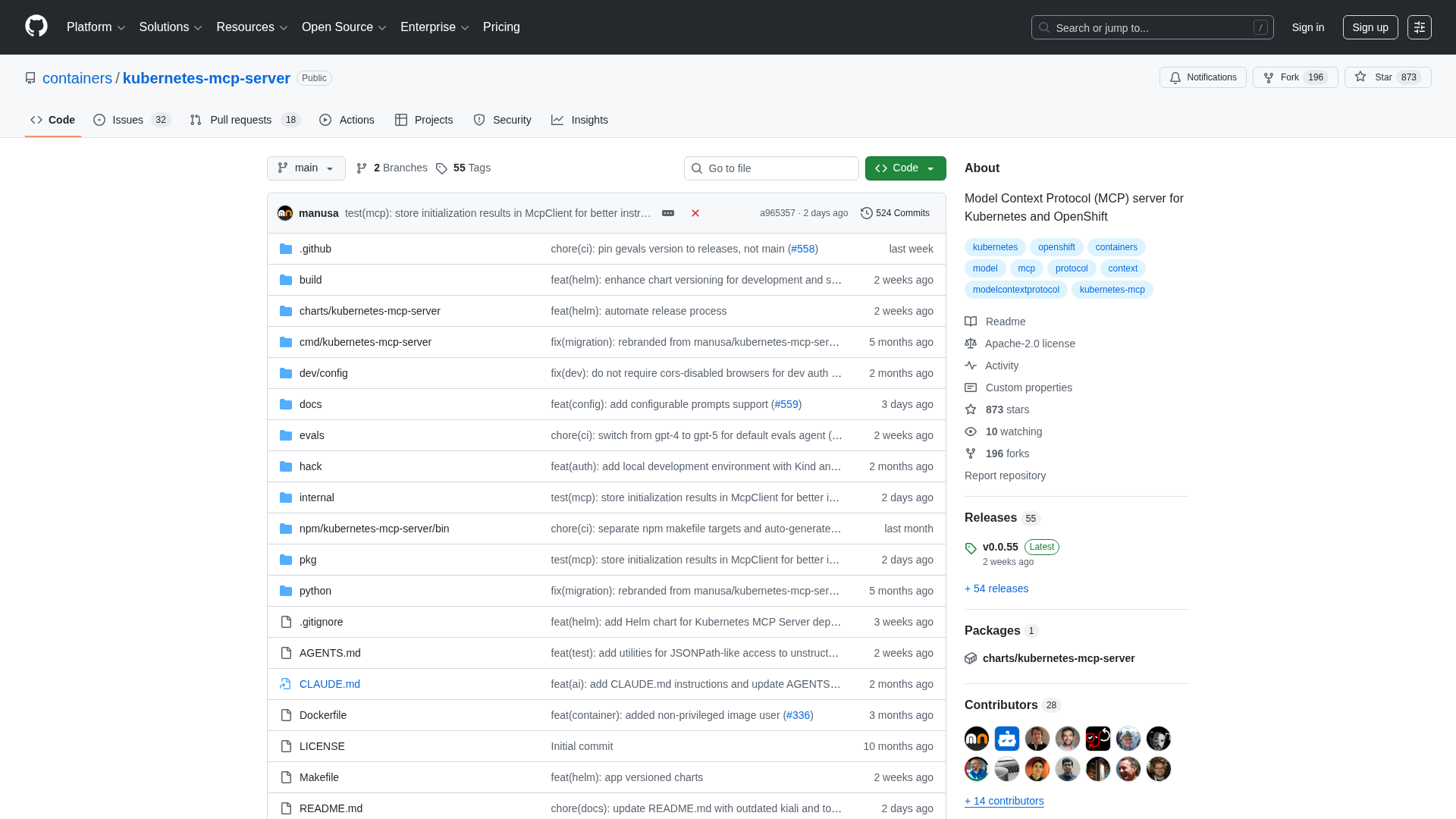

Connect to Kubernetes cluster and manage pods, deployments, and services.

Golang-based MCP server to browse Kubernetes pods, logs, events, and namespaces.

Perform operations on your AWS resources using an LLM.

A curated list of all MCP servers and related resources for Azure developers by