Topic Overview

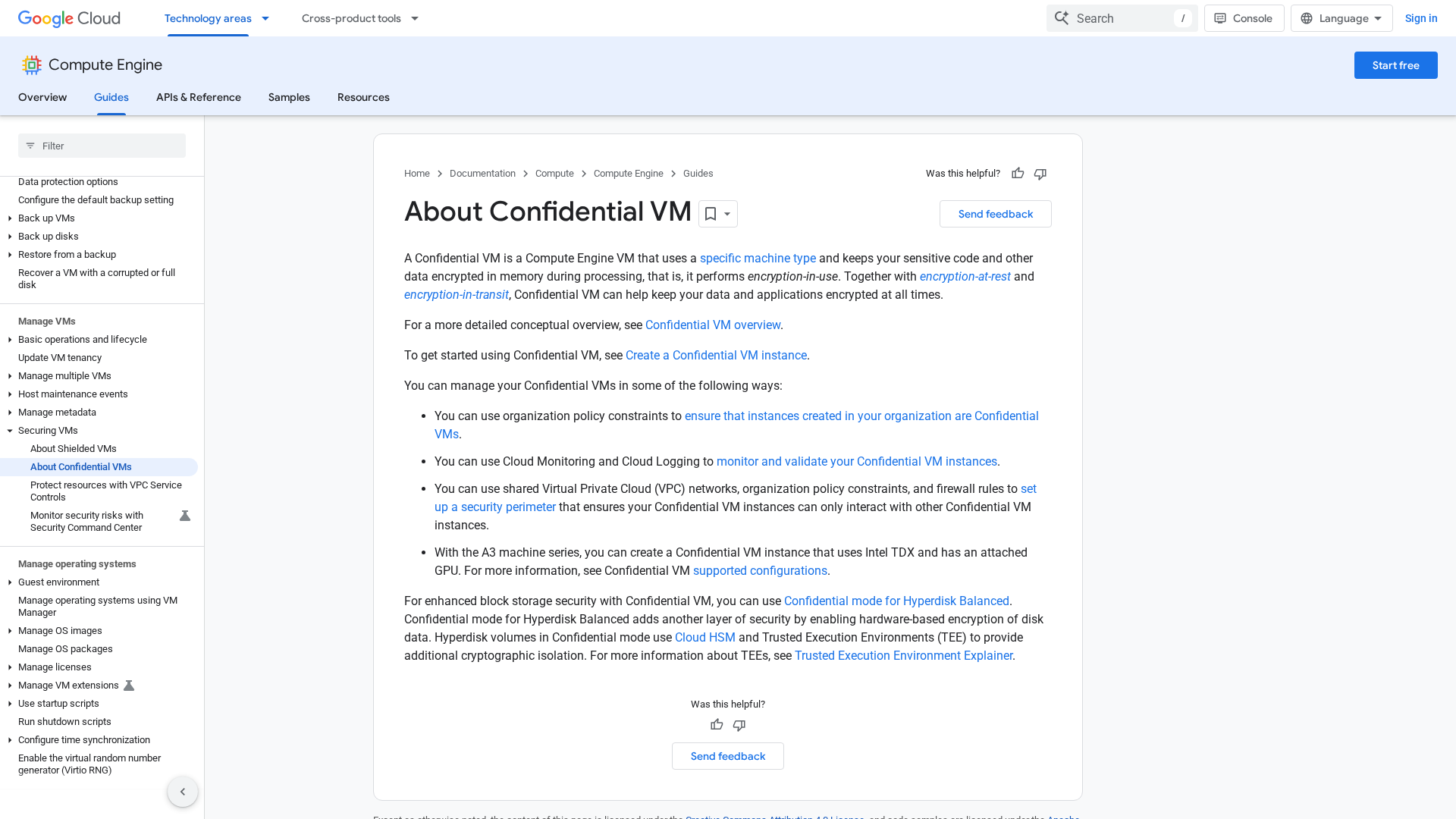

This topic covers techniques and toolkits for running AI workloads while minimizing data exposure: trusted execution environments (TEEs), confidential VMs and subnets, and privacy-preserving inference methods such as multiparty computation and homomorphic encryption. It focuses on the intersection of secure computation primitives with platforms and governance controls needed to deploy agentic and generative AI in regulated or high‑risk contexts. Relevance in 2026 stems from three converging trends: broader regulatory pressure on data residency and model transparency, the proliferation of agentic and automated workflows that interact with many services, and increased deployment of inference at the edge and in multi‑party environments. Organizations are balancing performance and developer ergonomics against the need for attestation, auditability, and provable data handling guarantees. Key tools and categories in this space include infrastructure and governance platforms (Xilos for visibility into agentic AI activity and service connections; Together AI for end‑to‑end acceleration and serverless inference that can be paired with confidential compute backends); no‑code/low‑code agent platforms (StackAI, Lindy) that simplify secure agent deployment and policy controls; code and SDLC governance tools (Qodo, CodeRabbit) that automate context‑aware code review, test generation and security checks; and edge‑friendly models (Stable Code) that reduce data egress by enabling private, on‑device inference. Practical adoption requires combining runtime protections (TEEs, confidential VMs, attestation), cryptographic techniques for specific workflows, and lifecycle tooling for reproducibility and audit. Trade‑offs include throughput and latency limits, integration complexity, and the need for clear governance on attestation and provenance. For teams building privacy‑sensitive AI, the current best practice is to treat secure compute, model lifecycle controls, and developer tooling as an integrated stack rather than isolated components.

Tool Rankings – Top 6

Intelligent Agentic AI Infrastructure

A full-stack AI acceleration cloud for fast inference, fine-tuning, and scalable GPU training.

End-to-end no-code/low-code enterprise platform for building, deploying, and governing AI agents that automate work onun

Quality-first AI coding platform for context-aware code review, test generation, and SDLC governance across multi-repo,팀

Edge-ready code language models for fast, private, and instruction‑tuned code completion.

No-code/low-code AI agent platform to build, deploy, and govern autonomous AI agents.

Latest Articles (67)

OpenAI’s bypass moment underscores the need for governance that survives inevitable user bypass and hardens system controls.

A call to enable safe AI use at work via sanctioned access, real-time data protections, and frictionless governance.

Baseten launches an AI training platform to compete with hyperscalers, promising simpler, more transparent ML workflows.

Explores the human role behind AI automation and how Bell Cyber tackles AI hallucinations in security operations.

A real-world look at AI in SOCs, debunking myths and highlighting the human role behind automation with Bell Cyber experts.