Overview

LlamaIndex is a developer-first platform that turns unstructured content into production-grade AI document agents and RAG (retrieval-augmented generation) applications. The offering centers on three products: LlamaCloud (enterprise document parsing, extraction, and indexing), Workflows (an async-first orchestration engine for multi-step agents), and the LlamaIndex framework/SDK (core building blocks like state, memory, and connectors). It supports layout-aware and multimodal parsing across 90+ file types, page-citation and confidence-scored extraction, and connectors to major LLMs and vector stores. The platform targets enterprise use cases—finance, insurance, healthcare, manufacturing—enabling compliance, private deployments (VPC), and scalable SaaS consumption with developer SDKs in Python and TypeScript. Exact launch year was not found on the public site. Pricing mechanics: LlamaIndex uses a credit-based usage model (1,000 credits ≈ $1 regionally), with different credit costs per action (parsing, indexing, extraction) and per parsing mode. The developer docs break down per-page and per-minute costs by mode (e.g., Standard indexing 1 credit/page, extraction modes range widely), and region rates can vary (example: NA $1.00 per 1,000 credits, EU $1.50 per 1,000 credits). Caching reduces costs for repeated operations. Detailed per-mode/per-region rates are available in the developer pricing docs.

Key Features

Layout-aware Document Parsing

Parses complex page layouts including nested tables, headers/footers, and handwriting to preserve structure and context.

Multimodal Extraction

Extracts structured data from images, charts, tables, audio, and scanned PDFs with citations and confidence scores.

LlamaCloud Indexing

Chunking and embedding pipelines optimized for accurate RAG and retrieval at scale.

Workflows Orchestration

Async-first, event-driven framework to construct multi-step, stateful AI agents with human-in-the-loop support.

Connector Library

Built-in connectors for major LLMs, vector stores, and data sources (OpenAI, Anthropic, Weaviate, S3, Google Drive, etc.).

SDKs and Extensibility

Python and TypeScript SDKs plus a community Hub for data loaders and custom tools.

Who Can Use This Tool?

- Developers:Build RAG apps and AI agents using Python/TypeScript SDKs and connectors.

- Data Teams:Automate document ingestion, parsing, and extraction for analytics and search.

- Enterprises:Deploy secure, scalable document workflows and private VPC solutions for regulated data.

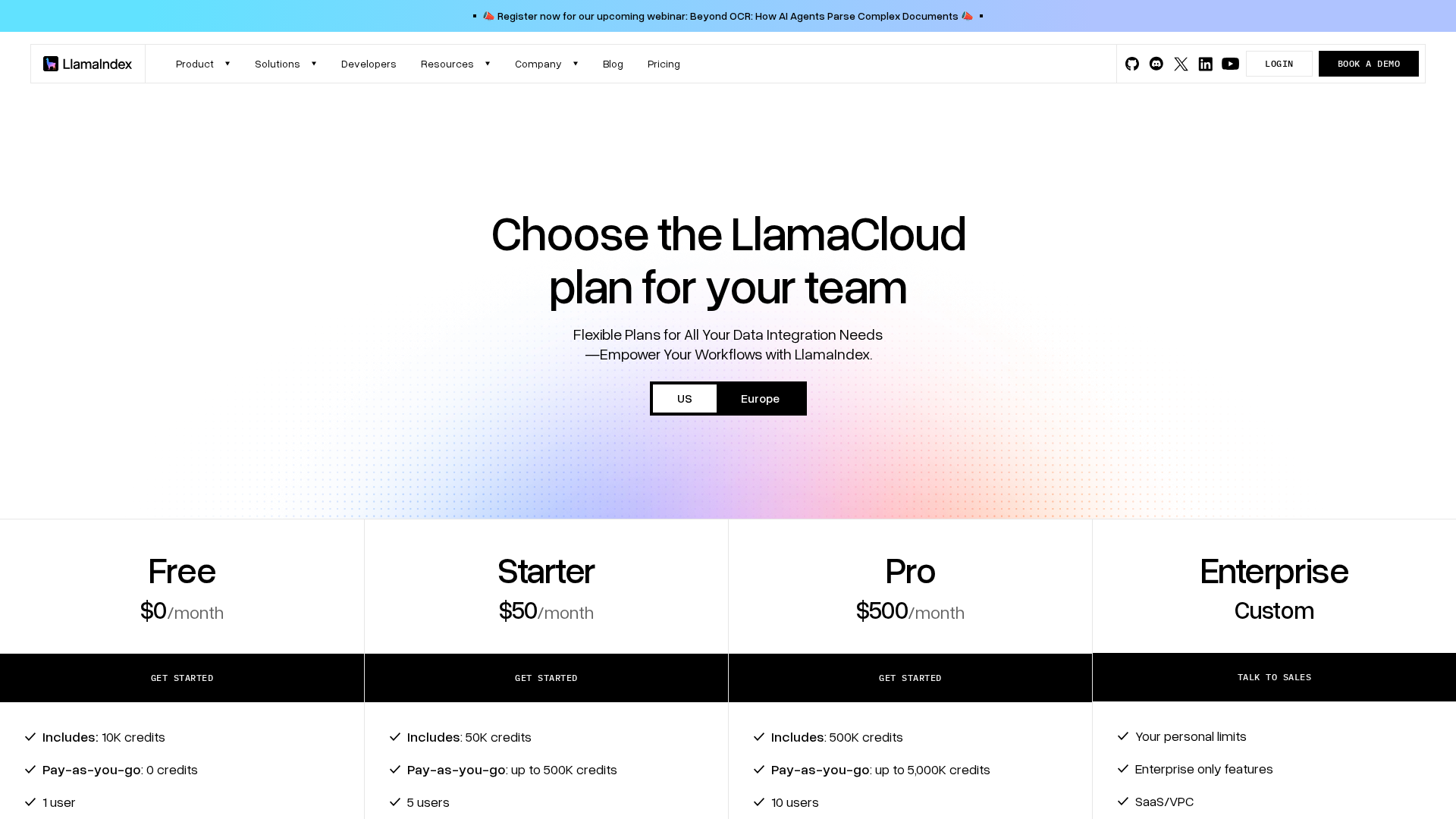

Pricing Plans

Entry tier with limited credits and single-user access for evaluation.

- ✓Includes: 10,000 credits

- ✓1 user

- ✓File upload capability

- ✓Basic support

- ✓Pay-as-you-go credits: 0 (no included PAYG allocation)

- ✓Access to core parsing and indexing features (limited)

- ✓Start-for-free CTA / sandbox

Low-cost team tier with moderate credits and basic integrations.

- ✓Includes: 50,000 credits

- ✓Up to 500,000 pay-as-you-go credits

- ✓Up to 5 users

- ✓Up to 5 external data sources

- ✓Basic support

- ✓Integrations with common data sources (S3, Google Drive, etc.)

- ✓File-upload and project management

Production-ready plan for larger teams with higher credits and expanded integrations.

- ✓Includes: 500,000 credits

- ✓Up to 5,000,000 pay-as-you-go credits

- ✓Up to 10 users

- ✓Up to 25 external data sources

- ✓Slack support

- ✓Higher project/index limits and concurrency

- ✓Access to advanced parsing modes and connectors

Custom enterprise plan with tailored limits, dedicated support, and private deployment options.

- ✓Custom credits and limits

- ✓Enterprise-only features and integrations

- ✓SaaS or private VPC deployment options

- ✓Dedicated support and customer success

- ✓Increased concurrency and scale for large workloads

- ✓Marketplace availability (AWS, Azure) for LlamaCloud

Pros & Cons

✓ Pros

- ✓Enterprise-grade document parsing and extraction (90+ formats, layout-aware)

- ✓Strong developer tooling: Python and TypeScript SDKs with many connectors

- ✓Open-source components (Framework and Workflows) plus a commercial cloud

- ✓Flexible deployment: SaaS and private VPC options for enterprises

- ✓Fine-grained, credits-based pricing allows usage-based cost control

✗ Cons

- ✗Complexity of credited pricing may require careful cost planning

- ✗Some documentation pages (e.g., /features) may be missing or changed (404 observed)

- ✗Pricing details vary by mode/region; many options can be confusing for new users

- ✗Launch year and some corporate metadata not publicly listed on marketing site

Compare with Alternatives

| Feature | LlamaIndex | RagaAI | StackAI |

|---|---|---|---|

| Pricing | $50/month | N/A | N/A |

| Rating | 8.8/10 | 8.2/10 | 8.4/10 |

| Parsing Fidelity | Layout-aware multimodal parsing | Multimodal but not layout-focused | Multimodal handling, layout parsing unclear |

| Indexing & Storage | Cloud indexing with scalable vector stores | Limited indexing, focus on evaluation and tracing | Enterprise RAG indexing with on-prem options |

| Workflow Orchestration | Yes | Yes | Yes |

| Agent Observability | No | Yes | Yes |

| Connector Ecosystem | Yes | Partial | Yes |

| SDK Extensibility | Yes | Yes | Partial |

| Governance & Security | Yes | Yes | Yes |

| Deployment Flexibility | API and browser, cloud and local library support | Web/API with enterprise deployment options | On-prem, VPC, and cloud with enterprise compliance |

Related Articles (9)

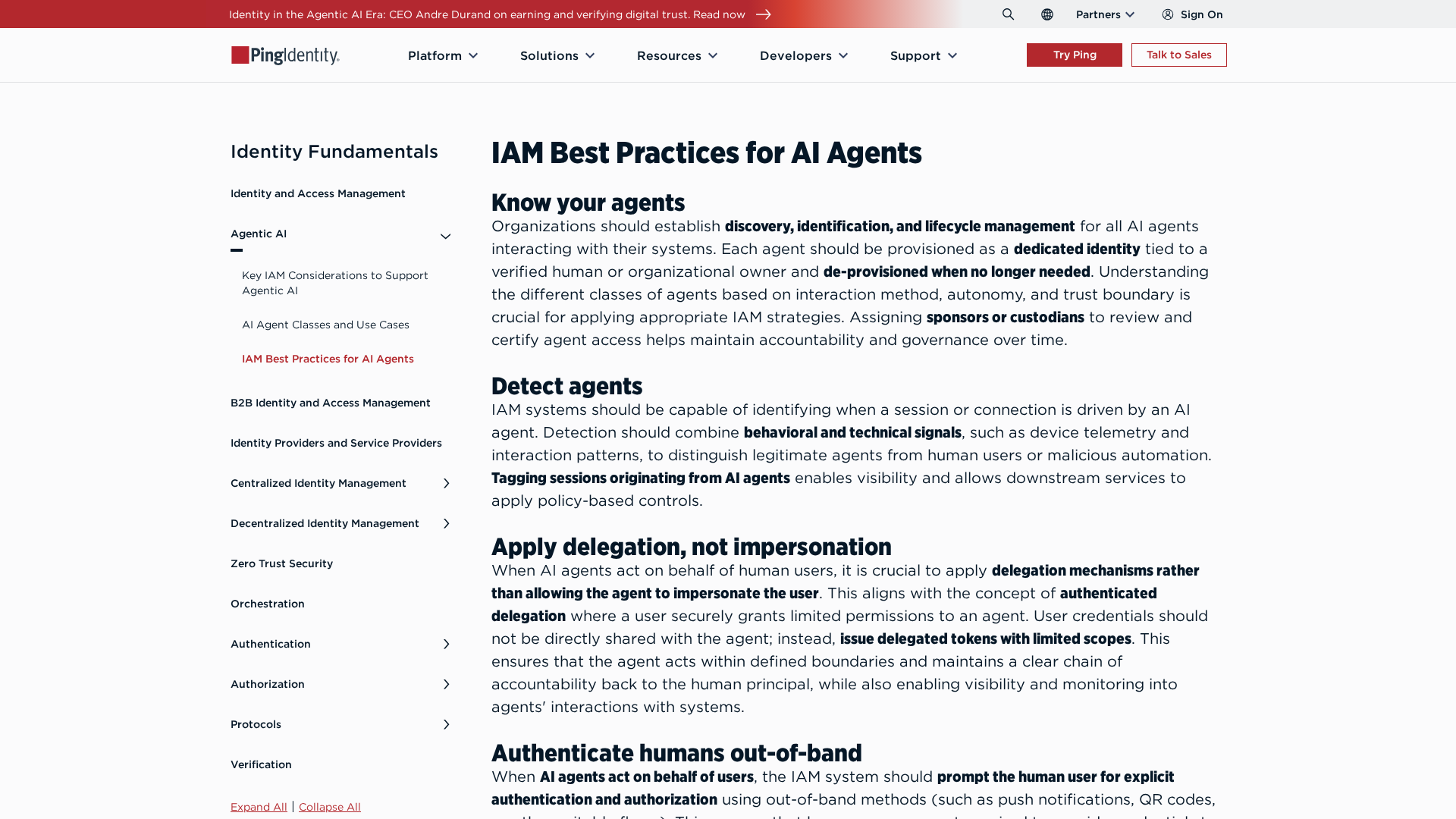

Best-practices for securing AI agents with identity management, delegated access, least privilege, and human oversight.

Google expands Canvas travel planning, global Flight Deals, and agentic booking to handle travel research and reservations inside Search AI Mode.

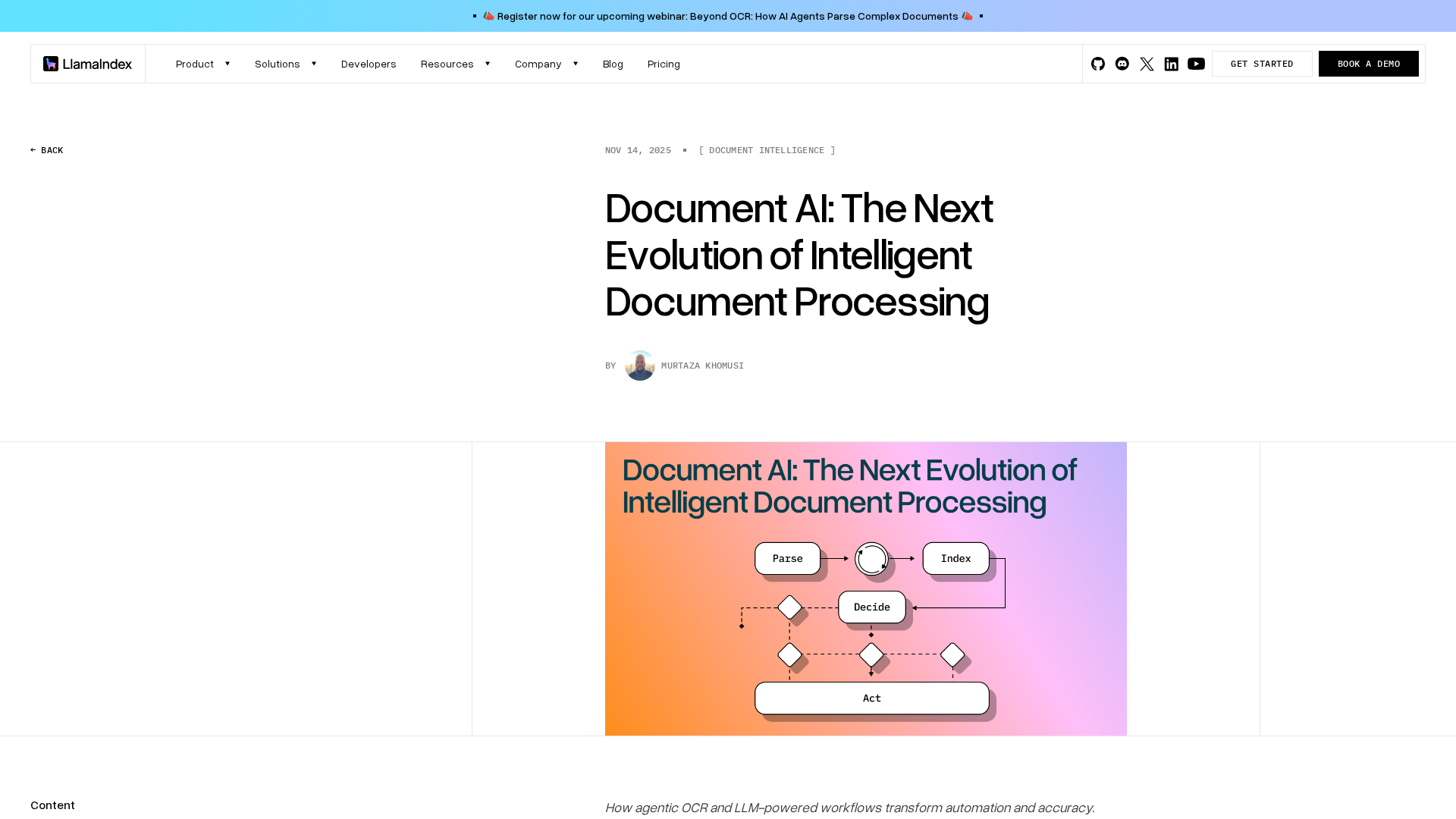

Explains how agentic OCR and LLM-powered workflows enable autonomous, high-accuracy document processing with the LlamaIndex stack.

A roundup of AI agent workflows, enterprise partnerships, case studies, and events from LlamaIndex.

A lightweight PyPI package pairing LlamaIndex embeddings with Ollama, with both source and wheel distributions available for easy installation.