Overview

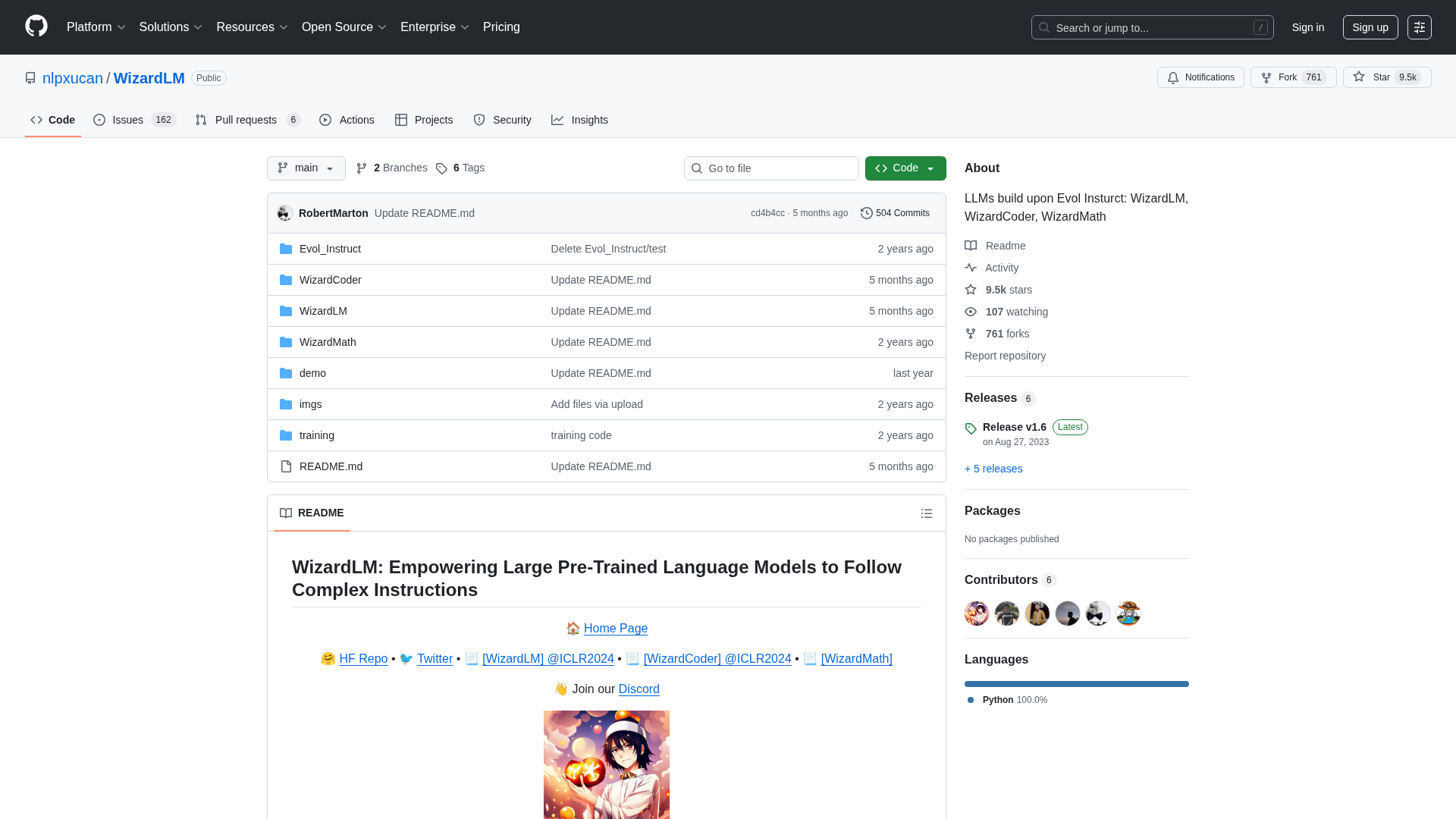

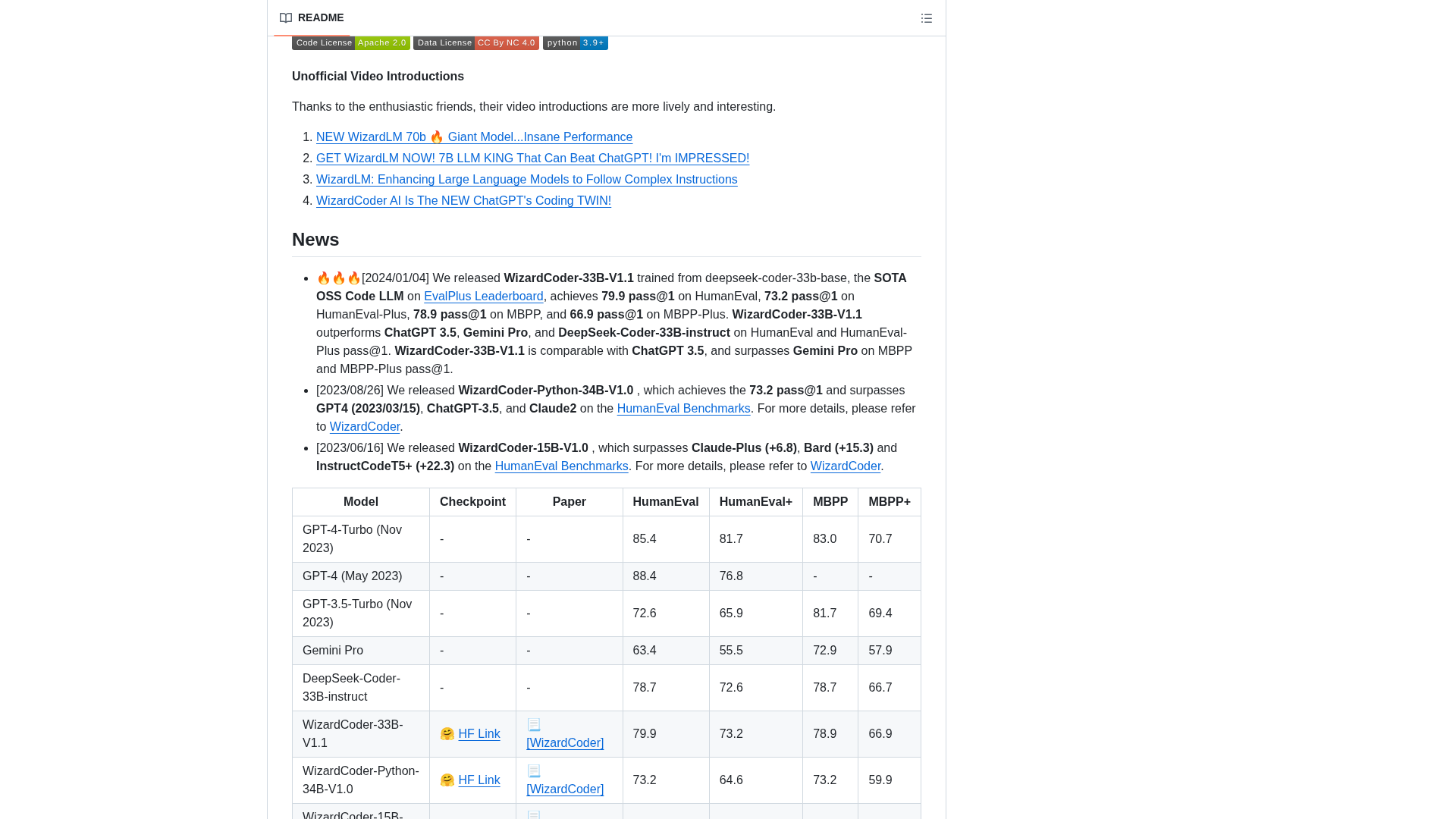

Repository: nlpxucan/WizardLM is a family of open-source instruction-following large language models (WizardLM, WizardCoder, WizardMath) trained using the Evol-Instruct paradigm. The README and releases describe the model family, Evol-Instruct method, multiple model variants, benchmark results, prompt recipes, and reproducibility guidance. Key released models reported in the README/HF include WizardCoder-33B-V1.1 (reported HumanEval pass@1 = 79.9 in the README/HF model card), WizardCoder-34B and other WizardCoder variants, WizardMath-70B-V1.0 and 7B variants (reported strong GSM8K and MATH results), and WizardLM-70B/30B/13B/7B variants with reported improvements on GSM8K, HumanEval, and other benchmarks. Releases document iterative improvements across versions (e.g., v1.1 → v1.6). Weights & distribution: Several released weights are available on Hugging Face (example: https://huggingface.co/WizardLMTeam/WizardCoder-33B-V1.1). Community quantized conversions are noted (e.g., TheBloke repositories hosting GGUF/AWQ/GPTQ variants). The repository releases link to corresponding HF model cards for some releases. Licensing & usage restrictions: The canonical LICENSE file could not be fetched at the raw path (raw LICENSE returned 404). The README and release notes explicitly state resource usage restrictions (resources, data, and weights described as restricted to academic research and not for commercial use). This indicates distribution conditions beyond a standard permissive license. The repo’s legal terms must be confirmed by checking repository root files, HF model cards, or contacting maintainers. Action items: check repo root for LICENSE or license statements; check HF model cards for license fields; contact maintainers before any commercial use. Data provenance & contamination: Training data notes (as reported) include applying Code Evol-Instruct to CodeAlpaca-20k for WizardCoder-33B-V1.1. The authors report contamination checks and deduplication efforts to reduce leakage; README and HF model card include notes on contamination checks and reproducibility. Usage, prompts, reproducibility: Prompt formats reported include Vicuna-style chat prompts for WizardLM, instruction-format (Evol-Instruct) prompts for WizardCoder and WizardMath, and CoT/Copy-Then-Think variants for math models. The README and HF model card provide commands and guidance to reproduce evaluations (HumanEval, HumanEval-Plus, MBPP, MBPP-Plus) and list dependencies (e.g., transformers 4.36.2, vLLM 0.2.5) and example system prompts. Examples for running with and without vLLM are provided in the README/HF card. Links: GitHub repo, releases page, raw README, example HF model card, and example community quantized build links are provided in the external links field below. Notes & outstanding checks: The repository has license ambiguity (raw LICENSE path 404) while README mentions usage restrictions. Recommend checking repo root/CONTRIBUTING/README and HF model cards for explicit license statements and contacting maintainers. Also verify license/terms for each distribution source (HF model cards and community conversions).

Key Features

Model family variety

Multiple model lines (WizardLM, WizardCoder, WizardMath) covering instruction following, coding, and math reasoning.

Evol-Instruct training paradigm

Models are built using the Evol-Instruct method; README describes the training approach and variants.

Reported strong benchmarks

Reported strong results on HumanEval/MBPP (code) and GSM8K/MATH (math) for various released checkpoints (as stated in README/HF).

Weights on Hugging Face

Several released weights are available on Hugging Face with model cards (example: WizardCoder-33B-V1.1).

Prompting & reproducibility guidance

README and HF model cards include example prompts, reproduction commands, dependencies, and instructions for running evaluations with and without vLLM.

Community quantized builds

Community conversions (GGUF/AWQ/GPTQ) are available in third-party HF repos (e.g., TheBloke) linked from releases or community pages.

Who Can Use This Tool?

- Researchers:Research on instruction-following LLMs, reproducibility experiments, and benchmark evaluation under research-only terms.

- Developers:Developers evaluating code generation and reasoning models for experimentation and non-commercial projects; check license before commercial use.

- ML practitioners:Reproducing benchmark results, fine-tuning experiments, and testing prompt strategies with provided reproduction commands.

Pricing Plans

Models and resources are provided under research/non-commercial usage restrictions as stated in README/release notes; verify license before commercial use.

- ✓Model weights available on Hugging Face for research use (where provided)

- ✓Reproducibility instructions and prompt templates included

- ✓Community quantized variants available separately

Third-party community quantized conversions (GGUF/AWQ/GPTQ) available; check each source for its license/terms.

- ✓Community-hosted downloads (e.g., TheBloke on HF)

- ✓May have different terms or redistribution restrictions

Pros & Cons

✓ Pros

- ✓Comprehensive model family covering instruction following, code, and math

- ✓Detailed README and HF model cards with reproducibility instructions and prompt recipes

- ✓Weights available on Hugging Face for several released checkpoints

- ✓Community support for quantized builds (GGUF/AWQ/GPTQ)

✗ Cons

- ✗Canonical LICENSE file at the repository raw path appears missing (raw LICENSE returned 404)

- ✗README and release notes state research/non-commercial restrictions — not a permissive commercial license

- ✗License ambiguity requires confirmation before commercial use

- ✗Community conversions may carry different or unclear licensing/redistribution terms

Compare with Alternatives

| Feature | nlpxucan/WizardLM | Code Llama | StarCoder |

|---|---|---|---|

| Pricing | N/A | N/A | N/A |

| Rating | 8.6/10 | 8.8/10 | 8.7/10 |

| Instruction Alignment | Yes | Partial | Partial |

| Code Specialization | Partial | Yes | Yes |

| Math Reasoning | Yes | No | Partial |

| Context Window | Varied context window across models | Large context variants available | Long-context support |

| Local Inference | Yes | Yes | Yes |

| Model Variety | Yes | Yes | Partial |

| Weights & Reproducibility | Yes | Yes | Yes |

Related Articles (3)

Adobe nears a $19 billion deal to acquire Semrush, expanding its marketing software capabilities, according to WSJ reports.

Adobe’s Semrush acquisition signals a major AI-driven shift and potential consolidation in SEO tools.

A practical guide to WizardLM-2 7B: performance, training, and deployment options.