Topic Overview

This topic covers the practical trade‑offs and integration patterns between private OLLM compute providers (often labeled AILO/OLLm) and other confidential‑compute solutions used to run AI workloads without exposing sensitive data. It’s about how enterprises and decentralized AI projects combine hardware attestation, enclave‑style isolation, self‑hosted models, and governance tooling to meet data‑privacy, IP‑protection, and compliance requirements. Relevance (2025): regulatory pressure, growing adoption of open‑weight models, and increasing client demand for provenance and auditability have accelerated deployments that keep model execution and data inside controlled boundaries. At the same time, hardware advances (confidential VMs, TEEs on Intel/AMD/ARM), cryptographic techniques (MPC, split‑compute), and self‑hosted stacks make multiple viable architectures available. Key tool roles: Tabnine and Tabby illustrate coding assistants optimized for private or self‑hosted deployments; Continue and LangChain represent open frameworks for embedding and orchestrating models and agents in developer pipelines; MindStudio and Anakin are no‑/low‑code platforms that bring enterprise controls and workflow automation to model usage; Harvey shows domain‑specific deployments requiring strict confidentiality; Cline demonstrates client‑side/local agents that minimize server exposure. These tools typically integrate with private OLLM providers, on‑prem inference, or confidential cloud runtimes depending on risk and performance needs. Takeaways: choose based on trust boundary (who must not see data), audit/attestation needs, latency and cost, and developer ecosystem support. Private OLLM providers simplify managed model hosting under enclave guarantees, while self‑hosted and decentralized approaches maximize control and can better satisfy bespoke governance — often requiring additional orchestration and evaluation via platforms such as LangChain, Continue, or no‑code governance layers.

Tool Rankings – Top 6

A no-code AI platform with 1000+ built-in AI apps for content generation, document search, automation, batch processing,

No-code/low-code visual platform to design, test, deploy, and operate AI agents rapidly, with enterprise controls and a

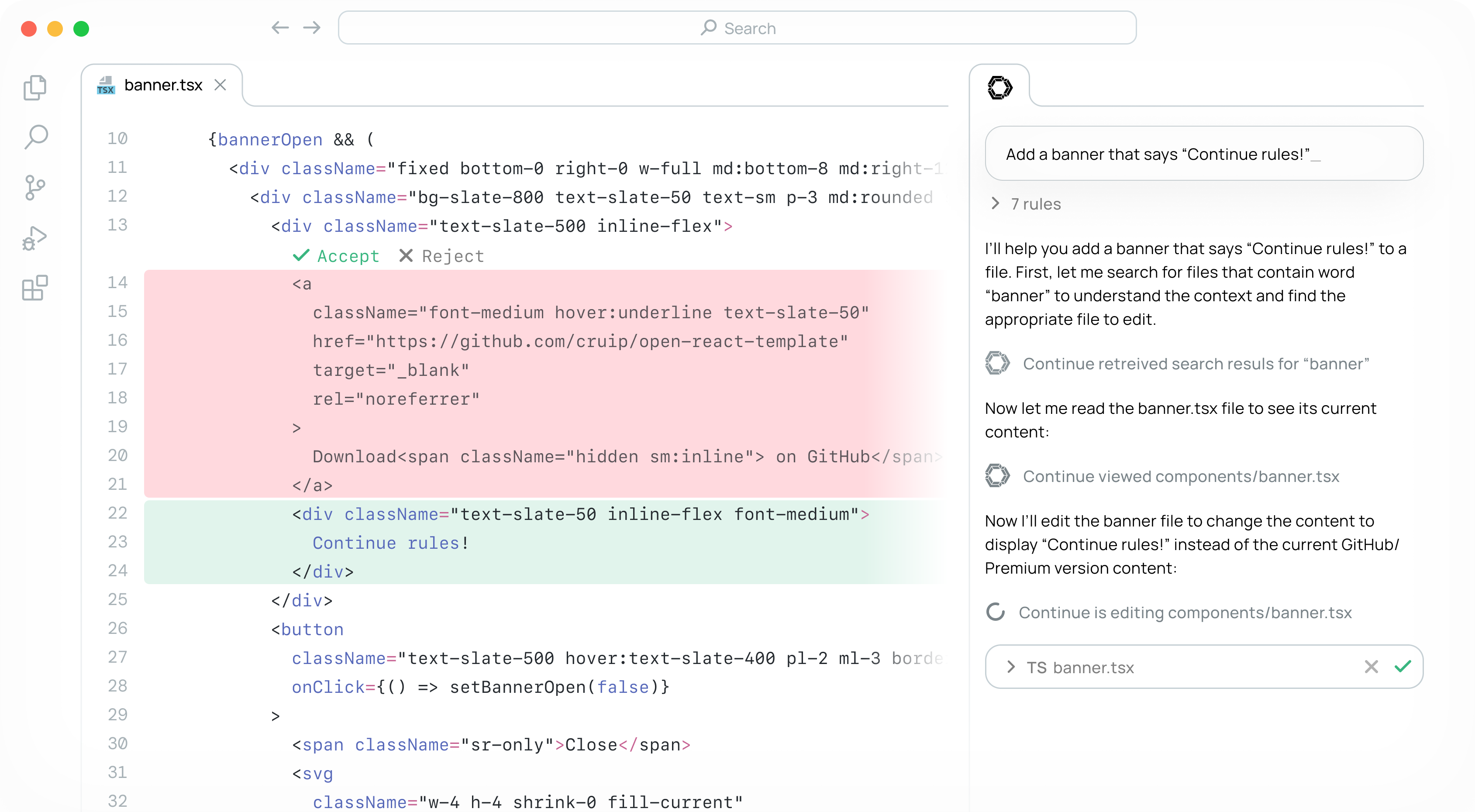

Continue — "Ship faster with Continuous AI": open-source platform to automate developer workflows with configurable AI/”

Enterprise-focused AI coding assistant emphasizing private/self-hosted deployments, governance, and context-aware code.

.avif)

Open-source, self-hosted AI coding assistant with IDE extensions, model serving, and local-first/cloud deployment.

Domain-specific AI platform delivering Assistant, Knowledge, Vault, and Workflows for law firms and professionalservices

Latest Articles (42)

A comprehensive LangChain releases roundup detailing Core 1.2.6 and interconnected updates across XAI, OpenAI, Classic, and tests.

Cannot access the article content due to an access-denied error, preventing summarization.

A quick preview of POE-POE's pros and cons as seen in G2 reviews.

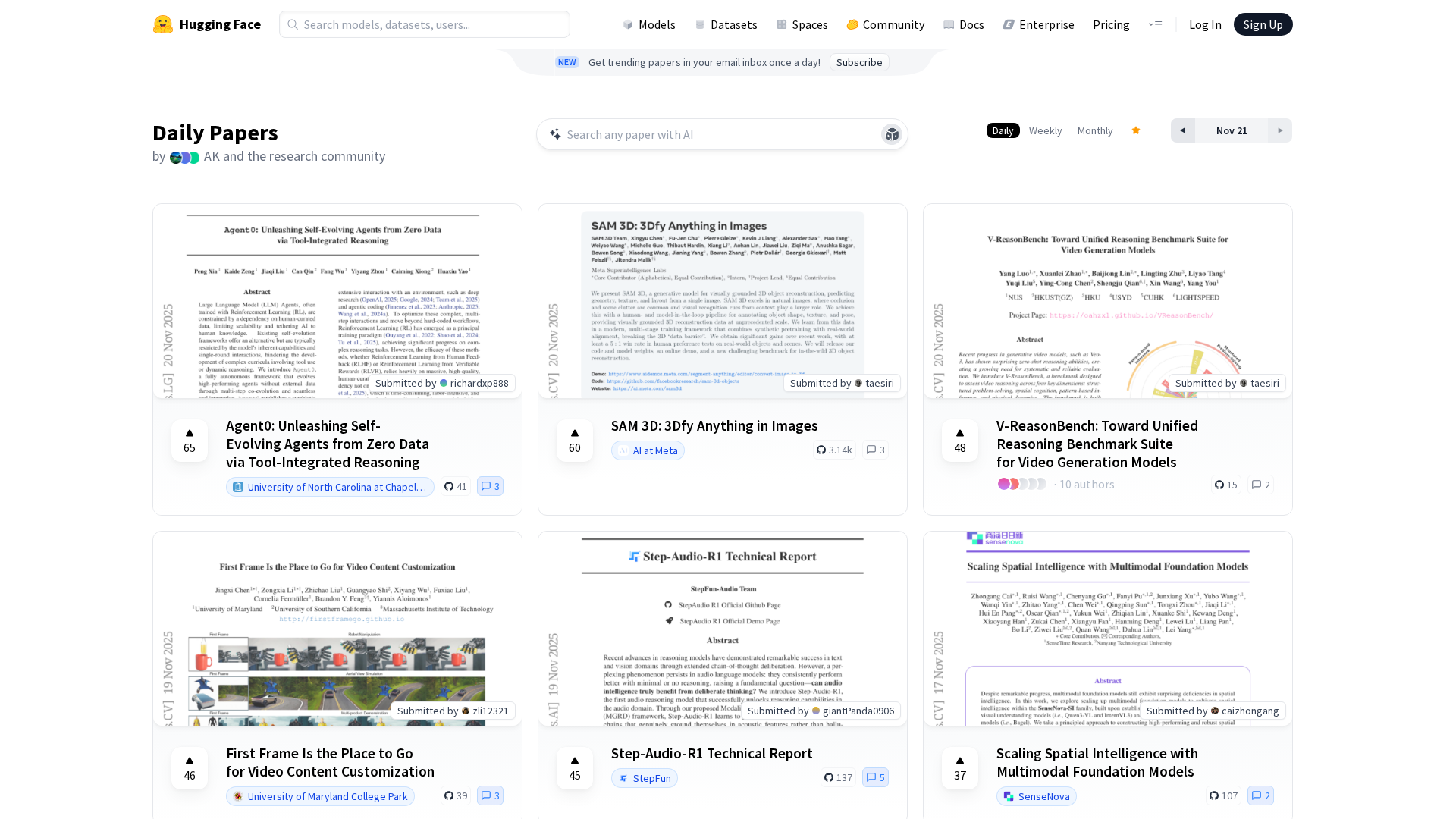

Get daily, curated trending ML papers delivered straight to your inbox.

Dell unveils 20+ advancements to its AI Factory at SC25, boosting automation, GPU-dense hardware, storage and services for faster, safer enterprise AI.