Overview

Summary based on https://www.tabnine.com and https://docs.tabnine.com/main/getting-started/install. Tabnine is presented as an enterprise-focused AI coding assistant emphasizing private/self-hosted deployments, governance, and context-aware code suggestions (Org‑Native Agents / Enterprise Context Engine). Headline capabilities called out include AI code completions (single- and multi-line), IDE chat (context-aware chat inside IDE), workflow AI agents (test generation, code review, Jira automation, optional autonomous agents), and a Model Context Protocol (MCP) to connect LLMs to tools (Git, linters, CI/CD, Jira, Confluence, DBs, Docker, etc.). Tabnine supports multiple LLM providers (Anthropic, OpenAI, Google, Meta, Mistral) and customer‑provided models and describes an Enterprise Context Engine that learns organization-specific context/standards. Integrations listed include unlimited connections to Bitbucket, GitHub, GitLab, Perforce, Atlassian Jira Cloud & Data Center, Confluence, and similar tools. Deployment options described on the site include SaaS, VPC, on‑premises, and fully air‑gapped (self‑hosted). Security and compliance items called out include zero code retention (for hosted flows where stated), end‑to‑end encryption/TLS, SSO, audit trails, and governance/usage controls. The official install/activation guide (docs.tabnine.com) explains plugin installation for IDEs, SaaS vs private installation notes, and activation checks (VS Code status bar, Tabnine Hub in Visual Studio). Company/history notes from public sources in the summary: Tabnine was created by Jacob Jackson in 2018 and was acquired by Codota in late 2019 (per referenced press/writeups and a Tabnine blog post). The site lists leadership such as Dror Weiss in current company information. Pricing: the public pricing page summarized in this report emphasizes an enterprise offering listed at $59 per user/month on annual billing and asks customers to contact sales for custom/enterprise setups; the page also references token‑quota pricing for Tabnine‑hosted LLMs and “unlimited” usage when customers supply their own LLMs. The site indicates a “Get started for free” prompt but exact consumer/individual plan names & pricing were not clearly enumerated on the pricing page reviewed. Reviews/ratings: aggregator pages referenced in the summary showed approximate reviewer ratings in the ~4.0–4.3/5 range on third‑party sites (G2/Capterra) in older summaries; I have not fetched live review pages in this summary. Notes and issues encountered during this collection: the pricing page is detailed with many feature bullets (an automated extraction hit a validation limit when parsing the full list), the Tabnine features page previously responded with a teaser/404 in some checks, and some site pages are emphasized to be accessible (home, pricing, docs). Sources used: https://www.tabnine.com, https://docs.tabnine.com/main/getting-started/install. Remaining items I can fetch on request: full pricing table with exact plan names/currencies, up‑to‑date G2/Capterra counts and ratings, an 8-bullet enterprise feature list, and company timeline citations (Jacob Jackson’s post, Betakit, Tabnine blog post about Codota acquisition, Wikipedia).

Key Features

AI code completions

Single- and multi-line code completions driven by LLMs and organization context.

IDE chat

Context-aware chat inside IDEs to ask questions and get code help in-context.

Workflow AI agents

Agents for test generation, code review, Jira automation and optional autonomous agents.

Model Context Protocol (MCP)

Protocol to connect LLMs to developer tools (Git, linters, CI/CD, Jira, Confluence, DBs, Docker, etc.).

Multiple LLM provider support

Support for Anthropic, OpenAI, Google, Meta, Mistral, and customer-provided models.

Enterprise Context Engine

Learns organization-specific context and standards to improve code suggestions.

Who Can Use This Tool?

- Enterprise teams:Large engineering organizations needing self-hosted AI coding assistants, governance, and integrations.

- Developers:Individual and team developers seeking context-aware completions and IDE chat (public pricing unclear).

Pricing Plans

Enterprise per-user subscription; contact sales for custom setups.

- ✓Enterprise-focused features

- ✓Self-hosting and air-gapped options

- ✓Governance and audit trails

- ✓Priority support and training options

Get started for free prompt on site; exact consumer plan names/pricing not listed.

- ✓Entry access via site prompt

- ✓Details require follow-up to confirm limits and features

Pros & Cons

✓ Pros

- ✓Enterprise-focused with options for private/self-hosted and air-gapped deployments

- ✓Emphasis on governance, audit trails, and organization-specific context

- ✓Model Context Protocol to connect LLMs to developer tools

- ✓Supports multiple LLM providers and customer-supplied models

- ✓Broad integrations with Git hosts and Atlassian tooling

✗ Cons

- ✗Public pricing page emphasizes enterprise/contact-sales; consumer plan names/pricing unclear

- ✗Automated extraction of the full pricing/features list hit validation limits during collection

- ✗Features page previously returned a teaser/404 in some checks (inconsistent availability noted)

Compare with Alternatives

Related Articles (5)

Dell unveils 20+ advancements to its AI Factory at SC25, boosting automation, GPU-dense hardware, storage and services for faster, safer enterprise AI.

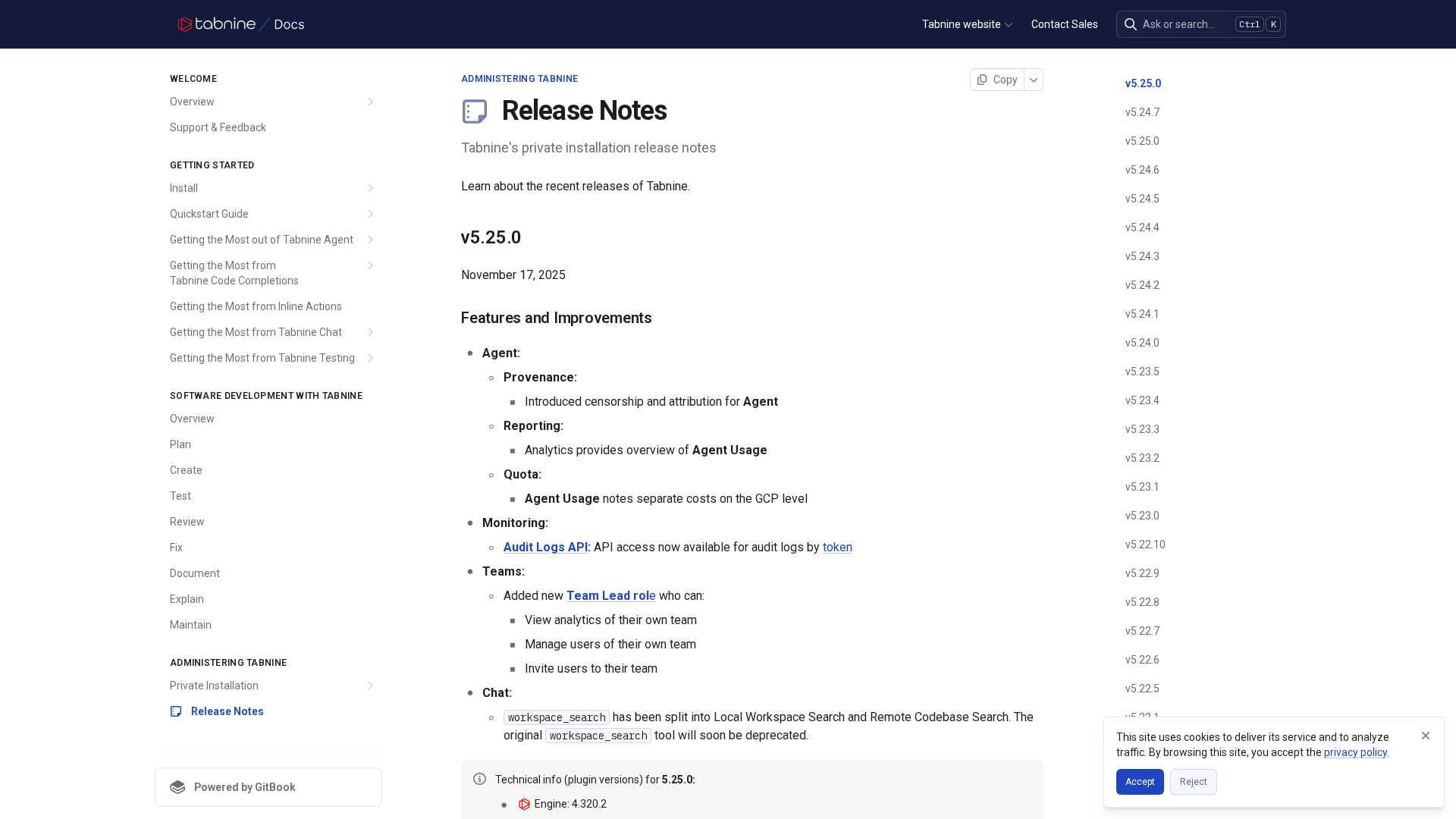

Comprehensive private-installation release notes detailing new features, improvements, and fixes across multiple Tabnine versions.

Dell expands its AI Factory with automated on-prem infrastructure, new PowerEdge servers, enhanced storage software, and scalable networking for enterprise AI.

Dell expands the AI Factory with automated, end-to-end on-prem AI solutions, data management enhancements, and scalable hardware.

Dell updates its AI Factory with automated tools, new AI-ready servers, and reinforced on-prem infrastructure.