Overview

EnCharge AI builds scalable, charge-domain analog in-memory-computing (IMC) accelerators and a software stack to enable highly efficient AI inference from edge devices to data centers. The company emphasizes validated silicon, measurable energy and cost advantages versus digital accelerators, support for multiple form-factors (chiplets, ASICs, PCIe cards), and orchestration between on-device and cloud deployments. Core value propositions include breakthrough energy efficiency, lower total cost of ownership, improved sustainability (claims of dramatically reduced CO2, power, and water use), privacy-preserving on-device inference, and integration with the existing semiconductor supply chain. Founded by experienced semiconductor and AI hardware leaders, EnCharge targets production commercialization of client-focused accelerators and maintains an emphasis on research-driven design and ecosystem partnerships. (Summary based on company website content.) Notes and missing items: No public pricing, plans, or pricing models were listed on the site; the pricing page exists but contains no published plan data. No public developer documentation, SDK, API references, or downloadable datasheets were discovered on the site pages reviewed. Product availability timelines are discussed in news (targeting 2025 commercialization) but exact product SKUs, specifications, or purchase channels are not posted publicly.

Key Features

Charge-domain analog in-memory computation

Uses intrinsically precise metal capacitors for charge-domain IMC to reduce data movement and improve energy efficiency.

Scalable analog IMC architecture

Multiple generations of designs and measured silicon emphasize scalability across nodes and architectures.

Edge-to-cloud orchestration

Focus on seamless deployment and orchestration from on-device (edge) inference to cloud backends.

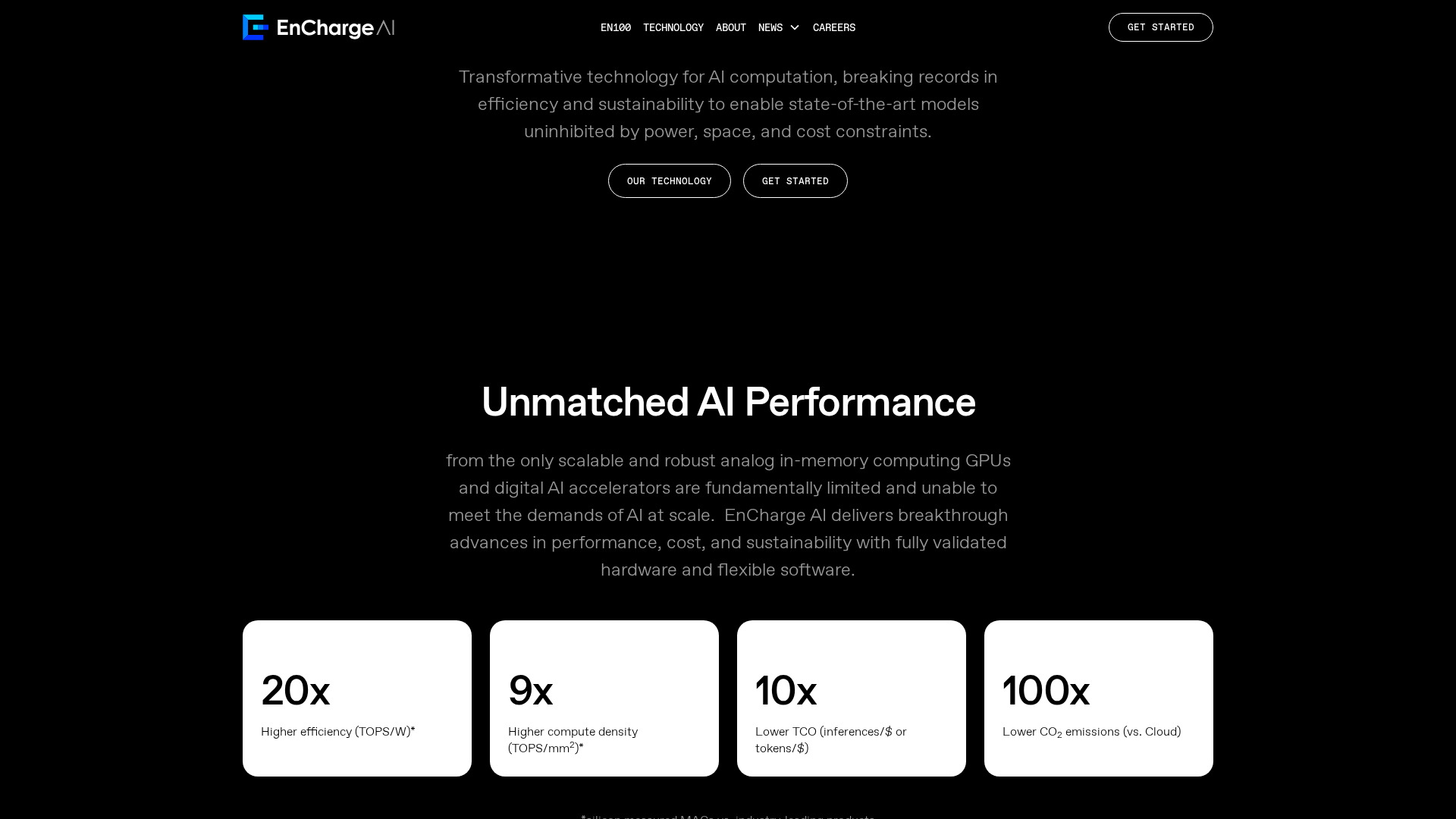

High-efficiency AI inference

Claims orders-of-magnitude improvements in energy per inference and TOPS/W compared to incumbent accelerators.

Programmability and software stack

Provides a software stack intended to integrate with common AI models and frameworks for production deployment.

Multiple form-factor support

Targeted deployment in chiplets, ASICs, PCIe cards, and other partner-configured hardware.

Who Can Use This Tool?

- Edge Device Manufacturers:Integrate ultra-efficient accelerators to enable on-device AI with lower power and cost.

- Enterprises & Cloud Providers:Deploy orchestrated edge-to-cloud inference platforms to lower TCO and emissions at scale.

- Defense & Aerospace:Adopt energy-efficient, resilient AI accelerators suited for constrained and mission-critical deployments.

- Industrial & Robotics:Enable real-time AI for manufacturing, logistics, and robotics with high-efficiency inference hardware.

- Researchers & Partners:Collaborate to advance analog IMC research and productize validated silicon for commercial systems.

Pricing Plans

Pricing information is not available yet.

Pros & Cons

✓ Pros

- ✓Industry-leading efficiency claims (high TOPS/W and energy reductions versus digital accelerators).

- ✓Strong founding team and advisory network with deep semiconductor and AI hardware experience.

- ✓Validated silicon and published measured results cited on the site.

- ✓Clear focus on sustainability, lower TCO, and privacy-preserving on-device inference.

- ✓Significant funding (Series A and >$100M Series B) indicating investor confidence and runway.

✗ Cons

- ✗No public pricing or commercial plans available on the website.

- ✗Limited public product documentation or developer-facing resources (no obvious SDK/docs links).

- ✗Product availability and detailed specifications for commercial customers are not clearly published.

- ✗Website content is promotional; independent third-party benchmarks and broad availability details are limited.

Compare with Alternatives

| Feature | EnCharge AI | Hailo | FlexAI |

|---|---|---|---|

| Pricing | N/A | N/A | N/A |

| Rating | 8.1/10 | 8.2/10 | 8.1/10 |

| Compute Architecture | Charge domain analog in memory compute | Dataflow AI edge processors | Software defined hardware agnostic orchestration |

| Inference Efficiency | High efficiency low power inference | Low power high performance inference | Optimizes compute placement for efficiency |

| Edge-Cloud Orchestration | Yes | No | Yes |

| Programmability Stack | Yes | Yes | Yes |

| Form-Factor Support | Yes | Yes | Partial |

| Privacy Preservation | Yes | Yes | No |

| Developer Ecosystem | Programmability focused SDKs and tools | Model zoo runtime and dataflow tools | Blueprints observability and workload copilot |

Related Articles (3)

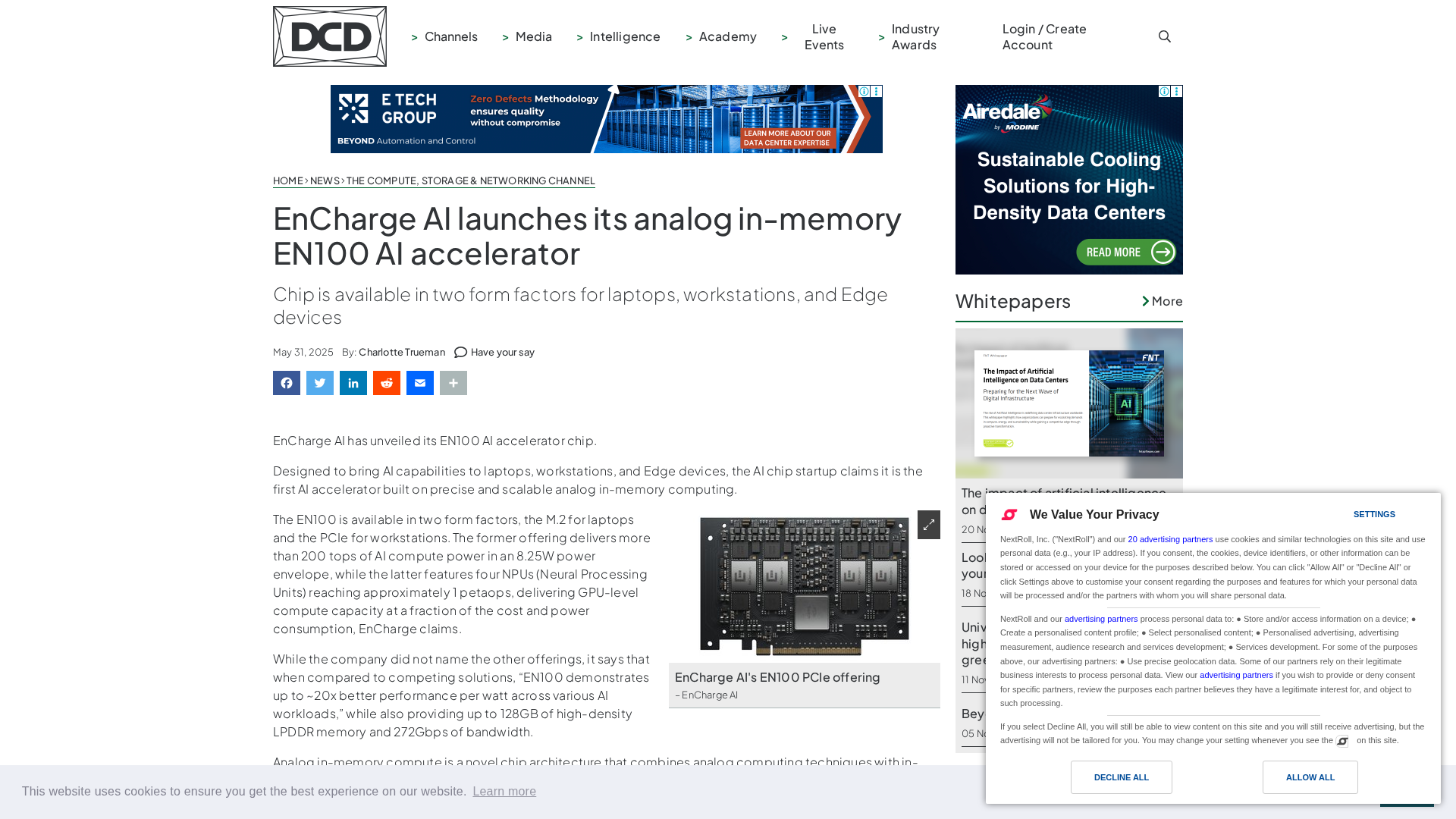

EnCharge AI unveils EN100, a first-of-its-kind accelerator for fast, power-efficient on-device AI.

EnCharge AI debuts the EN100 analog in-memory AI accelerator for laptops, workstations and edge devices, promising high efficiency and on-device AI inference.

EnCharge AI highlights its world-class experts at major events worldwide and invites readers to sign up for updates.