Overview

Hailo provides hardware and software solutions that enable high-performance deep learning inference on resource-constrained edge devices. Its portfolio includes the Hailo-8 family (high-efficiency multi-model, multi-stream inference) and the Hailo-10H (generative-AI-capable accelerator with a DDR interface for scaling large models). The platform combines a structure-driven dataflow architecture with a Dataflow Compiler, the HailoRT runtime, and model zoos (Vision and GenAI) to simplify model porting, optimization, and deployment across x86 and ARM hosts and Linux/Windows/Android targets. Hailo targets commercial, industrial, automotive, and personal-compute edge markets, emphasizing low latency, low power consumption, industrial operating ranges, and a mature software ecosystem (framework support for TensorFlow, PyTorch, ONNX, etc.). Notes & gaps discovered during collection: No public pricing page or self-serve pricing tiers were found on hailo.ai (pricing appears to be provided via sales/distributors). The company's launch/founding year was not found on the visited pages. A single canonical public documentation domain or distinct “docs” URL was not located during the scrape; documentation links and references are present on product and software pages. A clear single support/contact page was not identified on the visited pages.

Key Features

Hailo-10H Generative AI Acceleration

Enables LLMs, VLMs, and diffusion models on edge devices with up to 40 TOPS INT4 (20 TOPS INT8) performance and a DDR interface for scalable memory bandwidth.

Hailo-8 Edge AI Accelerator

High-efficiency neural processor for real-time on-device inference: up to ~26 TOPS at ~2.5W typical, supporting multi-stream and multi-model inference without external memory.

Dataflow Compiler

Offline model translation and optimization that maps networks to Hailo’s structure-driven dataflow architecture for efficient execution and portability.

HailoRT Runtime

Lightweight, production-grade runtime with C/C++ and Python APIs for deploying and running models on host systems or dedicated Hailo hardware.

Model Zoos & Model Explorer

Pre-built Vision and GenAI model zoos with reference binaries, hardware benchmarks, and tools to browse and evaluate models on real hardware.

Multi-Platform & Framework Support

Interoperability with TensorFlow, TensorFlow Lite, PyTorch, Keras, ONNX and runtimes; runs on x86/ARM hosts and Linux/Windows/Android targets.

Who Can Use This Tool?

- Developers:Port, optimize, and deploy deep learning models to edge devices using Hailo toolchain.

- OEMs & Integrators:Integrate Hailo modules/SoCs into products for low-latency, energy-efficient edge AI inference.

- Automotive & Industrial:Deploy robust on-device perception and generative AI solutions in constrained industrial or automotive environments.

Pricing Plans

Pricing information is not available yet.

Pros & Cons

✓ Pros

- ✓High performance per watt (Hailo-8: up to ~26 TOPS @ ~2.5W; Hailo-10H: up to 40 TOPS INT4 / 20 TOPS INT8 with ~2.5W typical cited)

- ✓Designed for multi-stream and multi-model inference (Hailo-8) and scalable DDR interface for larger models (Hailo-10H)

- ✓Mature software stack including Dataflow Compiler, HailoRT runtime, model zoos, profilers, emulator, and examples

- ✓Broad framework support (TensorFlow, TensorFlow Lite, PyTorch, Keras, ONNX) and host-OS support (Linux, Windows, Android)

- ✓Industrial and automotive operating ranges and mentions of applicable compliance where relevant

✗ Cons

- ✗No public pricing or clear self-serve purchase tiers; product inquiries appear to go through sales/distributors

- ✗Integration requires use of a specialized dataflow compiler and toolchain (learning curve for porting and optimization)

- ✗Not targeted at casual/consumer plug-and-play usage (primarily focused on OEMs, system integrators, and product teams)

Compare with Alternatives

| Feature | Hailo | EnCharge AI | Rebellions.ai |

|---|---|---|---|

| Pricing | N/A | N/A | N/A |

| Rating | 8.2/10 | 8.1/10 | 8.4/10 |

| Inference Efficiency | Low-power high-performance inference | Analog in-memory compute for very high efficiency | High-throughput energy-efficient inference for hyperscale |

| On-Device GenAI | Yes | Partial | No |

| Compiler & Runtime | Yes | Partial | Yes |

| Memory Architecture | No | Yes | Yes |

| Form-factor Support | Yes | Yes | Yes |

| Model Ecosystem | Yes | Partial | Yes |

| Industrial Readiness | Yes | Partial | Yes |

Related Articles (6)

ProteanTecs expands in Japan with a new office and Noritaka Kojima as GM Country Manager.

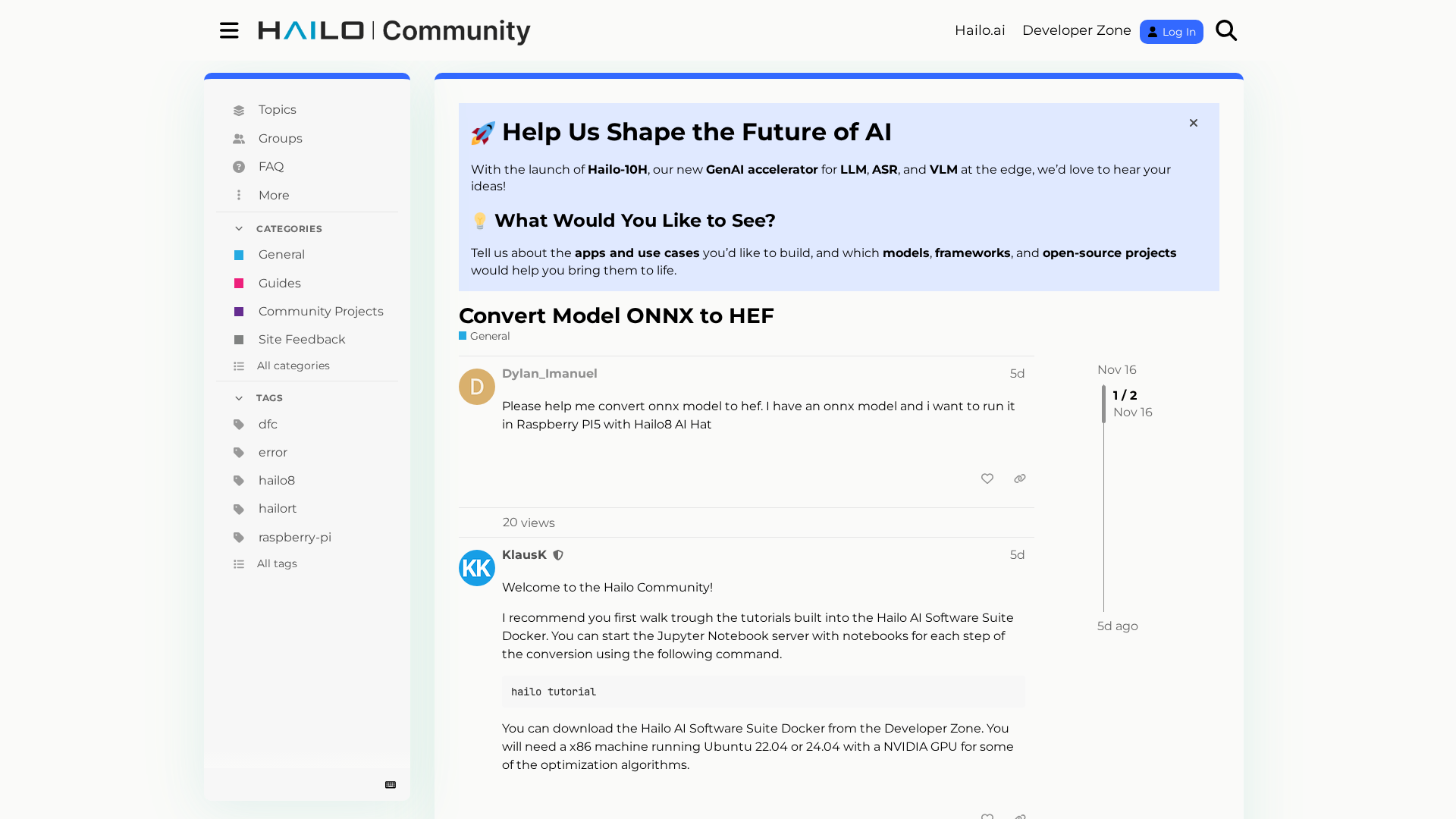

Convert ONNX models to HEF for Hailo8 on Raspberry Pi 5 using Hailo's Docker tutorials.

Explains why the full Hailo AI Suite won’t run on Raspberry Pi 5 and how to use the runtime instead.

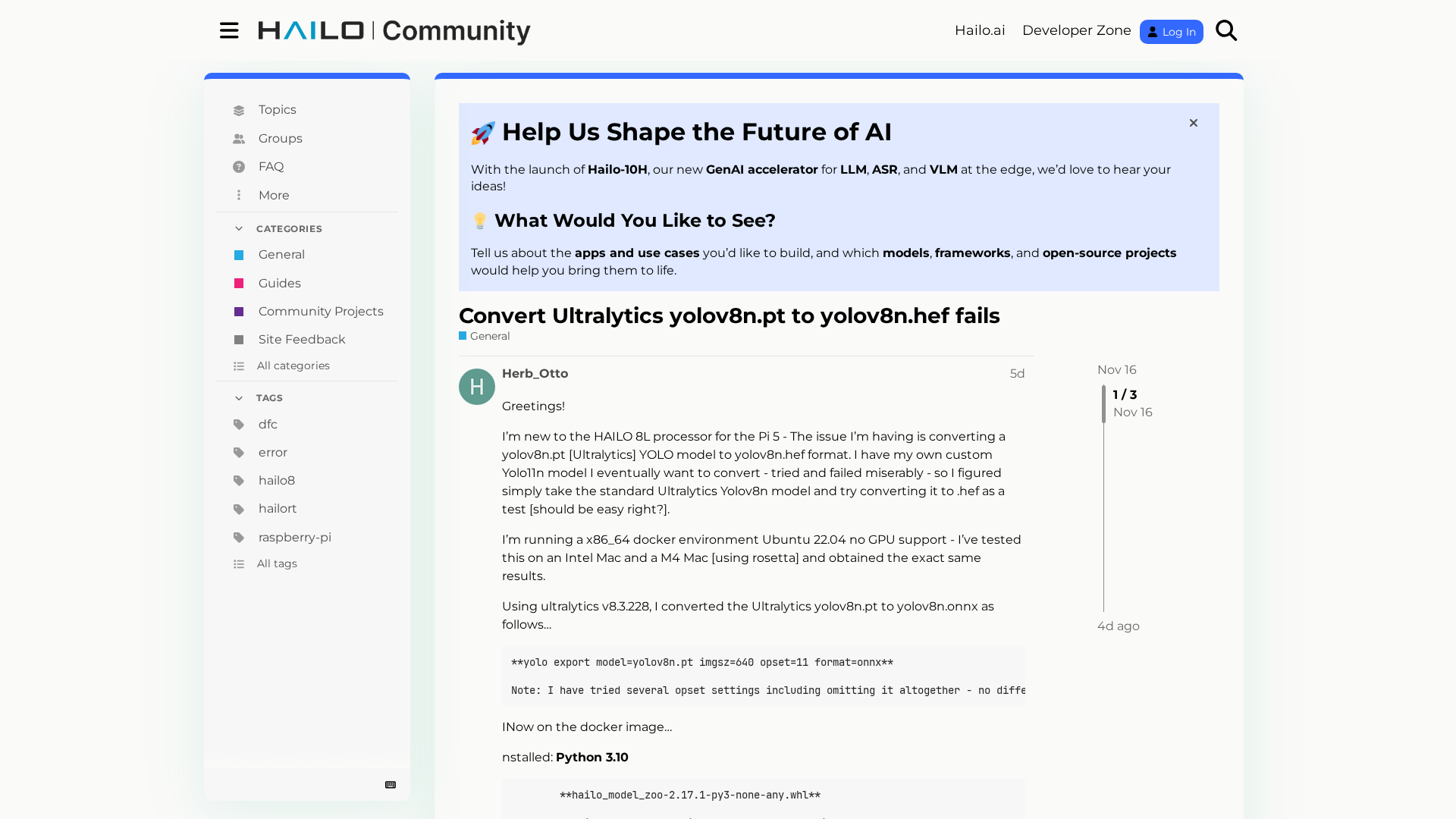

A user reports repeated failure converting Ultralytics yolov8n.pt to yolov8n.hef on Hailo 8L, failing with a TypeError during compilation.

Qualcomm debuts DragonWing IQ-X industrial SoCs with 8/12 cores, up to 45 TOPS AI, PCIe Gen4, and Windows LTSC support for factory/edge AI.