Overview

Rebellions.ai (Rebellions Inc.) develops purpose-built AI inference accelerators (chiplets, SoCs, servers) and a GPU-class software stack to enable high-throughput, energy-efficient LLM and multimodal inference at hyperscale. Their product family includes REBEL chiplets (REBEL-Quad), the ATOM SoC family, and ATOM-Max server/pod systems, paired with the RBLN (Rebellions) SDK, Model Zoo, and developer tools to support production deployments, mixed-precision execution, and distributed rack-to-rack scaling. The company emphasizes UCIe-Advanced chiplet interconnects, HBM3E/GDDR6 memory options, and software compatibility with PyTorch, vLLM, Triton, and Hugging Face for fast adoption in existing pipelines. Rebellions targets energy- and cost-sensitive data-center inference workloads by combining hardware efficiency with an integrated software stack. Public-facing site materials do not list per-unit pricing or public subscription tiers; pricing and procurement are handled via enterprise/direct sales contact.

Key Features

Chiplet SoC Architecture

REBEL-Quad and REBEL chiplets use UCIe-Advanced to present multiple chiplets as a single virtual die, enabling high compute density and low-latency chiplet-to-chiplet communication.

High-Bandwidth Memory & Memory Subsystem

Supports HBM3E (144 GB, multi-TB/s effective bandwidth) and GDDR6-based ATOM-Max variants to deliver the memory throughput required for long-context LLM inference.

Mixed-Precision, High-Throughput Execution

Native mixed-precision pipeline (FP16/FP8 and narrower formats) enabling high TFLOPS/TOPS performance while maintaining single-pipeline execution and kernel compatibility.

Rebellions (RBLN) SDK and Model Zoo

GPU-class, PyTorch-native SDK with compiler/runtime, profiling, Triton backend, vLLM/Hugging Face integration, and >300 supported models for rapid onboarding and optimization.

Rack-to-Rack & Scalable Pod Designs

ATOM-Max Server/Pod and RDMA-friendly designs allow horizontal scaling from a single server to large clusters with orchestration and pod-level management.

Developer Tooling & Observability

Profiler, driver/firmware stack, runtime modules, system-management tools, and example integrations (Kubernetes, Docker, Ray) to support deployment and performance tuning.

Who Can Use This Tool?

- Developers:Build, optimize, and deploy LLM and multimodal models using the RBLN SDK and Model Zoo.

- Enterprises:Deploy energy-efficient inference at scale in data centers with ATOM-Max servers and REBEL-Quad appliances.

- Cloud & OEMs:Integrate chiplet-based accelerators into rack-scale and sovereign deployments for hyperscale inference.

Pricing Plans

Pricing information is not available yet.

Pros & Cons

✓ Pros

- ✓Very high throughput-per-watt focus (energy-efficient inference hardware).

- ✓Modern chiplet-based designs (UCIe-Advanced) and HBM3E support for high memory bandwidth.

- ✓Full-stack approach (hardware + SDK + Model Zoo) and strong developer tooling (PyTorch-native, Triton, vLLM integration).

- ✓Product options for single-server and rack/pod scale (ATOM-Max Server/Pod, REBEL-Quad).

- ✓Global presence and strong industry partnerships (Arm, Samsung Foundry, SK Telecom, Pegatron, Marvell).

✗ Cons

- ✗No public pricing or self-serve purchase flows—enterprise sales/contact required.

- ✗Detailed benchmarks and independent performance/price comparisons are limited on the public site.

- ✗Focused on data-center deployments; not targeted at hobbyists or consumer use.

Compare with Alternatives

| Feature | Rebellions.ai | EnCharge AI | Hailo |

|---|---|---|---|

| Pricing | N/A | N/A | N/A |

| Rating | 8.4/10 | 8.1/10 | 8.2/10 |

| Compute Architecture | Chiplet SoC architecture | Charge-domain analog IMC architecture | Dataflow accelerator architecture |

| Memory Bandwidth | HBM3E high-bandwidth memory | Analog IMC with limited external bandwidth | On-chip buffers modest memory bandwidth |

| Precision & Throughput | Mixed-precision high-throughput execution | High-efficiency analog IMC throughput | INT8-optimized low-power throughput |

| Scalability & Pods | Yes | Partial | Partial |

| Developer SDK | Yes | Yes | Yes |

| Observability Tooling | Yes | Partial | Partial |

| Form Factor Support | Yes | Yes | Yes |

| Power Efficiency | Hyperscale energy-efficient inference | High energy efficiency sustainability focused | Low-power edge optimized |

Related Articles (7)

ProteanTecs expands in Japan with a new office and Noritaka Kojima as GM Country Manager.

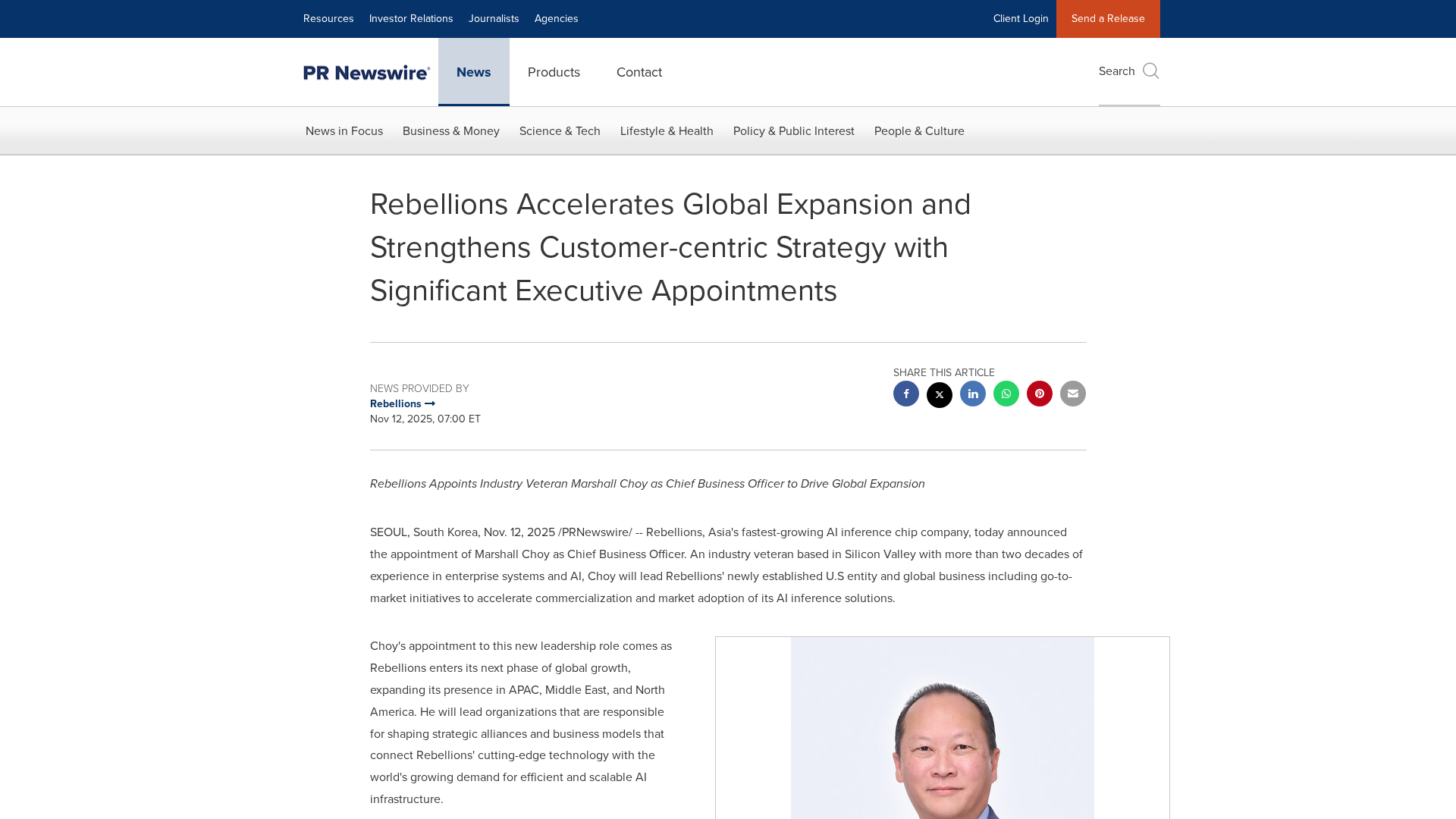

Rebellions names a new CBO and EVP to drive global expansion, while NST commends Qatar’s sustainability leadership.

Rebellions appoints Marshall Choy as CBO to drive global expansion and establish a U.S. market hub.

Expanded GPU cloud ratings across 84 providers with 10 criteria, exposing trends in SLURM-on-Kubernetes, rack-scale reliability, and InfiniBand security.

A comprehensive survey of private LLM inference hardware—GPUs, ASICs, and startups—driving on-prem enterprise AI.