Overview

Snorkel AI positions itself as a data-centric enterprise AI company focused on accelerating production-ready models through programmatic data development, expert-curated datasets, bespoke LLM fine-tuning, and domain-specific evaluation/benchmarks. Core offerings include Snorkel Flow (an enterprise data development platform supporting programmatic labeling, annotation studio, prompt development, guided error analysis, model evaluation, and end-to-end ML app workflows) and Expert Data-as-a-Service (white-glove teams creating domain-specific datasets with multi-layer QA, rubrics, and traceability). Snorkel also provides Snorkel Custom for end-to-end co-development of domain-specific LLMs (data identification, knowledge capture, fine-tuning/alignment, evaluation, distillation, and deployment) and Snorkel Evaluate for custom LLM evaluations and benchmarks. The platform emphasizes evaluate → develop → deliver workflows, ingestion from diverse enterprise sources (databases, documents/PDFs, telemetry), integration with vector DBs and feature stores, and connections to model serving/observability stacks. Product and docs reference integrations with MLflow, AWS SageMaker, Google Vertex AI, and Databricks, and the documentation hub (docs.snorkel.ai) includes getting-started tutorials, API references, and release notes. Public information indicates enterprise-grade QA, traceability, evaluation/benchmarking (Terminal-Bench referenced), and recent release notes noting privacy/security features (2024.R3). Public site does not list pricing or trial terms; pricing appears to be enterprise/custom via sales engagement. Origin and research lineage point to work from the Stanford AI lab; leadership includes co-founder and CEO Alexander Ratner. Known customer/partner references on public pages include Wayfair, Anthropic endorsement, and large enterprise customers referenced in case studies.

Key Features

Snorkel Flow (Platform)

Enterprise AI data development platform supporting programmatic labeling, annotation studio, prompt builder, guided error analysis, model evaluation, and end-to-end ML app workflows.

Expert Data-as-a-Service

White-glove expert teams producing domain-specific, multi-modal datasets with multi-layer QA, rubrics, and traceability for complex data types.

Custom LLM Co-development (Snorkel Custom)

End-to-end co-development of domain-specific LLMs including data identification, fine-tuning/alignment, evaluation, distillation, and deployment; works with any foundation model.

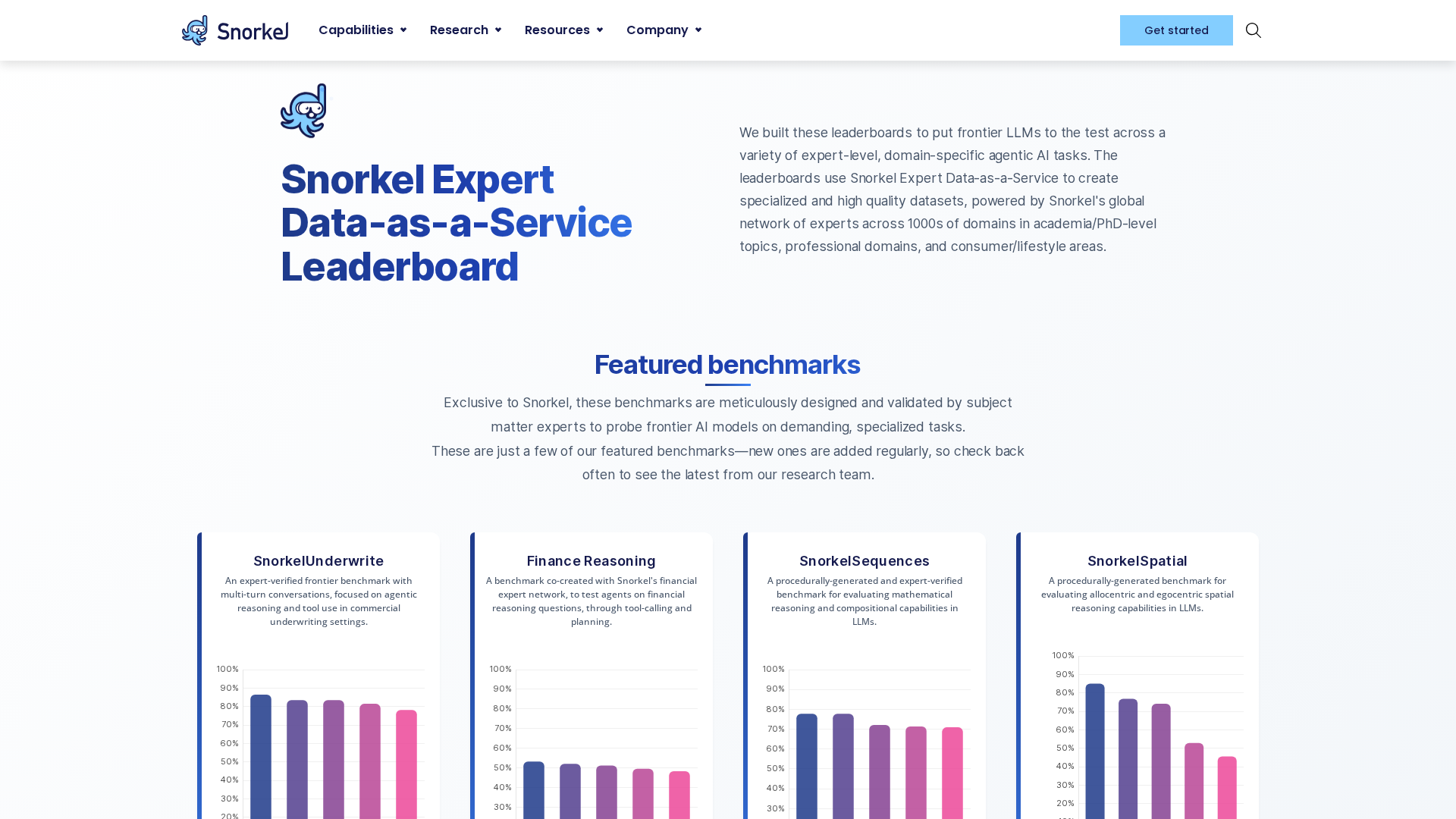

Custom Evaluation & Benchmarks (Snorkel Evaluate)

Purpose-built LLM evaluations and benchmarks tailored to business criteria, with segment diagnostics and bespoke test sets.

Integrations & Deployment

Integrates with common enterprise stacks and model serving tools (MLflow, SageMaker, Vertex AI, Databricks) and ingests enterprise sources (databases, docs, telemetry).

Emphasis on Evaluation and Governance

Strong focus on evaluation, benchmarking (Terminal‑Bench referenced), rubrics, QA, traceability, and observability for production reliability.

Who Can Use This Tool?

- Enterprises:Enterprise teams seeking production-grade data pipelines, governance, and custom ML/LLM solutions.

- ML teams:Data scientists and ML engineers needing programmatic labeling, evaluation, and integration into deployment stacks.

- Domain experts:Subject-matter experts who contribute to or require high-quality, domain-aligned datasets and assessments.

Pricing Plans

Pricing information is not available yet.

Pros & Cons

✓ Pros

- ✓Data-centric approach focusing on programmatic labeling and expert-curated datasets.

- ✓Enterprise-oriented features: QA, traceability, rubrics, and observability.

- ✓Offers both platform (Snorkel Flow) and expert-managed data services (Expert Data-as-a-Service).

- ✓Custom LLM co-development and bespoke evaluation/benchmarking capabilities.

- ✓Integrations with common enterprise stacks (MLflow, SageMaker, Vertex AI, Databricks).

- ✓Public documentation and tutorials available (docs.snorkel.ai).

✗ Cons

- ✗No public pricing or plan tiers published on the website.

- ✗No public free trial or self-serve subscription information visible.

- ✗SLAs, compliance certifications (e.g., SOC2/ISO), and data residency specifics are not explicitly listed on public pages.

- ✗Detailed API limits, hosted pricing, and on-prem vs cloud licensing terms are not publicly available.

Compare with Alternatives

| Feature | Snorkel AI | Ocular AI | OpenPipe |

|---|---|---|---|

| Pricing | N/A | N/A | N/A |

| Rating | 8.0/10 | 8.0/10 | 8.2/10 |

| Labeling Paradigm | Programmatic weak supervision | AI assisted autolabeling and manual annotation | Interaction capture and serverless model workflows |

| Custom Fine-tuning | Yes | Yes | Yes |

| Evaluation Rigor | Custom benchmarks and rubric driven evaluations | Human in the loop and RLHF evaluations | Automated evaluation and reward model tooling |

| Governance Traceability | Yes | Yes | Partial |

| Human-in-Loop | Partial | Yes | Partial |

| Deployment Modes | Partial | Yes | Yes |

| Data Lineage | Yes | Yes | Partial |

| Integration Surface | Enterprise integrations and SDKs | Integrations with lakehouse and governance tools | Unified SDK and API first integrations |

Related Articles (5)

A snapshot of Snorkel's expert-curated benchmarks testing frontier LLMs on domain-specific tasks.

An enterprise team slashes deployment time from hours to 20 minutes by migrating to Prefect 2.0 and Kubernetes.

A snapshot of Snorkel AI’s blog highlighting Terminal-Bench 2.0, rubric-driven evaluation, and enterprise AI benchmarks.

Snorkel AI launches data-centric foundation model development to speed enterprise adoption with governance-friendly, production-ready datasets.

Snorkel AI raises $85M Series C to scale its data-centric AI platform and achieve unicorn status at a $1B valuation.