Topic Overview

This topic covers the hardware and software ecosystems that run production AI inference workloads: large-scale accelerators (NVIDIA Blackwell, Groq), purpose-built inference silicon and chiplets, edge vision stacks, and emerging decentralized infrastructure. Demand for lower latency, reduced energy use, and data-sovereign processing has driven diversification away from a single accelerator model toward specialized silicon, modular software stacks, and distributed orchestration. Key players and patterns: NVIDIA’s Blackwell-class GPUs remain a dominant datacenter inference platform and have absorbed adjacent tooling (e.g., the Deci.ai site now serving NVIDIA-branded content following a May 2024 acquisition), while vendors like Groq focus on minimal-latency, high-throughput inference. Startups such as Rebellions.ai are bringing GPU-class software and chiplet/SoC designs optimized for high-throughput, energy-efficient LLM and multimodal inference in hyperscale settings. On the other end, edge AI vision platforms prioritize compact, power-efficient stacks for on-device perception and real-time decisions. Parallel to silicon trends, projects like Tensorplex Labs explore decentralized AI infrastructure—combining model development workflows with blockchain/DeFi primitives (staking, cross-network coordination) to enable governance and incentive layers for distributed inference. Why it matters now: By late 2025, cost, power, and latency pressures plus regulatory and data-locality requirements are pushing organizations to mix centralized and edge inference, adopt more energy-efficient hardware, and consider decentralized orchestration for resilience and governance. The market is therefore characterized by consolidation of software around dominant GPU vendors, increased specialization in inference silicon, and experimental decentralized stacks that aim to redistribute compute and economic control.

Tool Rankings – Top 3

Energy-efficient AI inference accelerators and software for hyperscale data centers.

Site audit of deci.ai showing NVIDIA takeover after May 2024 acquisition and absence of Deci-branded pricing.

Open-source, decentralized AI infrastructure combining model development with blockchain/DeFi primitives (staking, cross

Latest Articles (22)

AWS commits $50B to expand AI/HPC capacity for U.S. government, adding 1.3GW compute across GovCloud regions.

How AI agents can automate and secure decentralized identity verification on blockchain-enabled systems.

Passage cuts GPU cloud costs by up to 70% using Akash's open marketplace, enabling immersive Unreal Engine 5 events.

ProteanTecs expands in Japan with a new office and Noritaka Kojima as GM Country Manager.

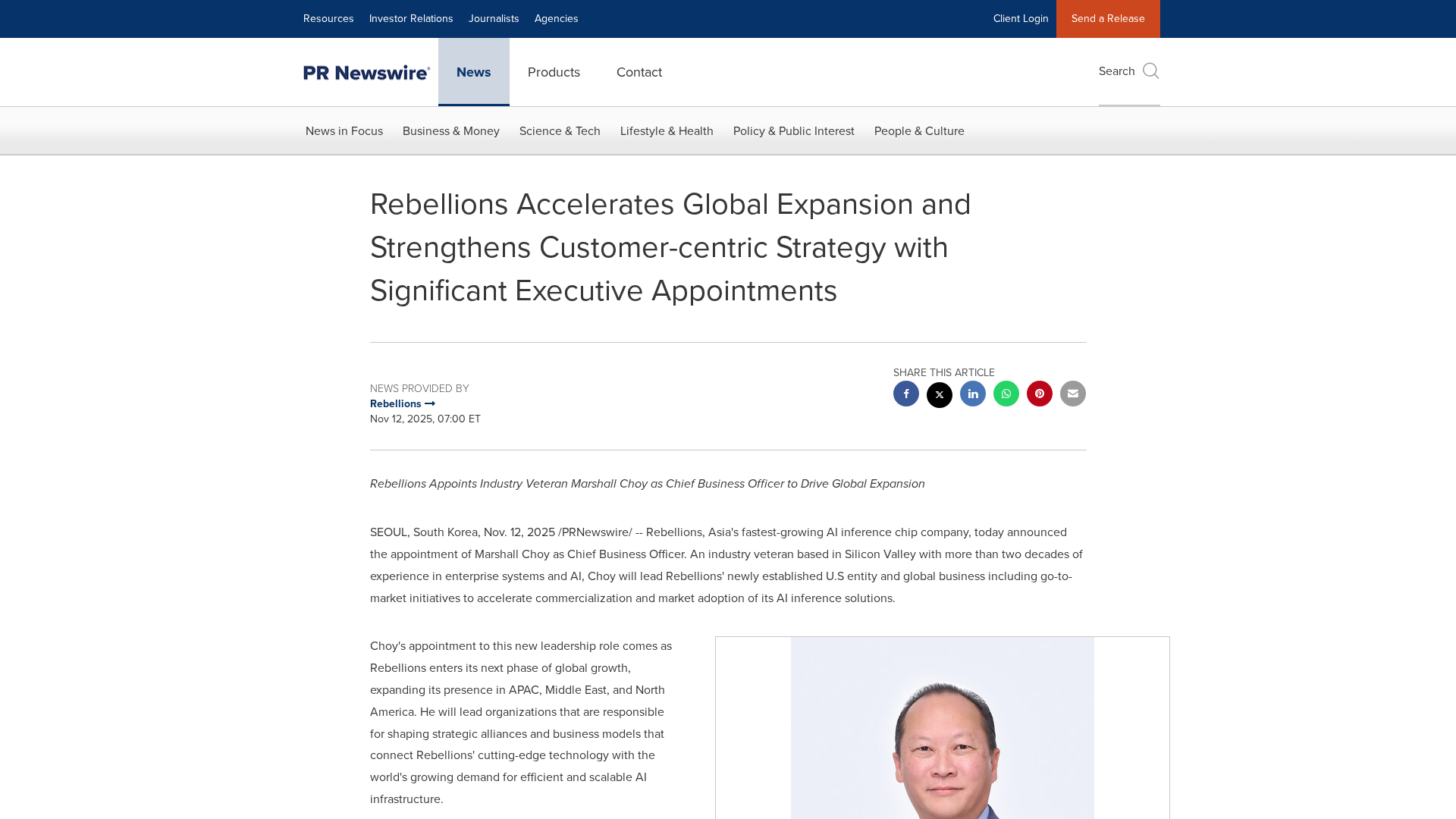

Rebellions appoints Marshall Choy as CBO to drive global expansion and establish a U.S. market hub.