Topic Overview

Bot copy trading platforms let users mirror algorithmic strategies or professional traders across exchanges; comparing Bitget with competitors requires systematic market and competitive intelligence. As of 2026‑01‑13, the space is defined by larger bot marketplaces, tighter regulatory scrutiny, cross‑exchange liquidity challenges, and demand for transparent audit trails — all of which raise the need for automated monitoring, backtesting, and signal attribution. Practical analysis pipelines combine agent frameworks, retrieval systems, private LLMs, and telemetry. LangChain and similar agent platforms enable stateful, testable agents to monitor APIs, scrape marketplaces, and orchestrate evaluation workflows. LlamaIndex supports turning unstructured docs, changelogs, and community posts into retrieval‑augmented (RAG) agents for competitor research. Cohere supplies enterprise‑grade models and embeddings for private summarization, similarity search, and alerting across trade logs. OpenPipe captures interaction logs and fine‑tuning infrastructure to adapt models to domain‑specific language (orderbook events, strategy names, audit evidence). Developer tooling such as Warp (ADE) accelerates building and deploying monitoring agents; Cline can run client‑side coding agents that plan and audit multi‑step analysis; CodeGeeX helps generate and maintain strategy‑testing code. Framing comparisons (fee models, API latency, bot marketplace governance, social graph integrity, slippage/arbitrage performance, compliance features) is best done via automated agents that ingest on‑chain and off‑chain data, run reproducible backtests, and produce explainable reports. Positioning this work under Market Intelligence and Competitive Intelligence emphasizes continuous collection, model‑backed synthesis, and operational tooling — enabling objective, reproducible comparisons between Bitget and its peers.

Tool Rankings – Top 6

Engineering platform and open-source frameworks to build, test, and deploy reliable AI agents.

Agentic Development Environment (ADE) — a modern terminal + IDE with built-in AI agents to accelerate developer flows.

Open-source, client-side AI coding agent that plans, executes and audits multi-step coding tasks.

Managed platform to collect LLM interaction data, fine-tune models, evaluate them, and host optimized inference.

Enterprise-focused LLM platform offering private, customizable models, embeddings, retrieval, and search.

Developer-focused platform to build AI document agents, orchestrate workflows, and scale RAG across enterprises.

Latest Articles (64)

A comprehensive LangChain releases roundup detailing Core 1.2.6 and interconnected updates across XAI, OpenAI, Classic, and tests.

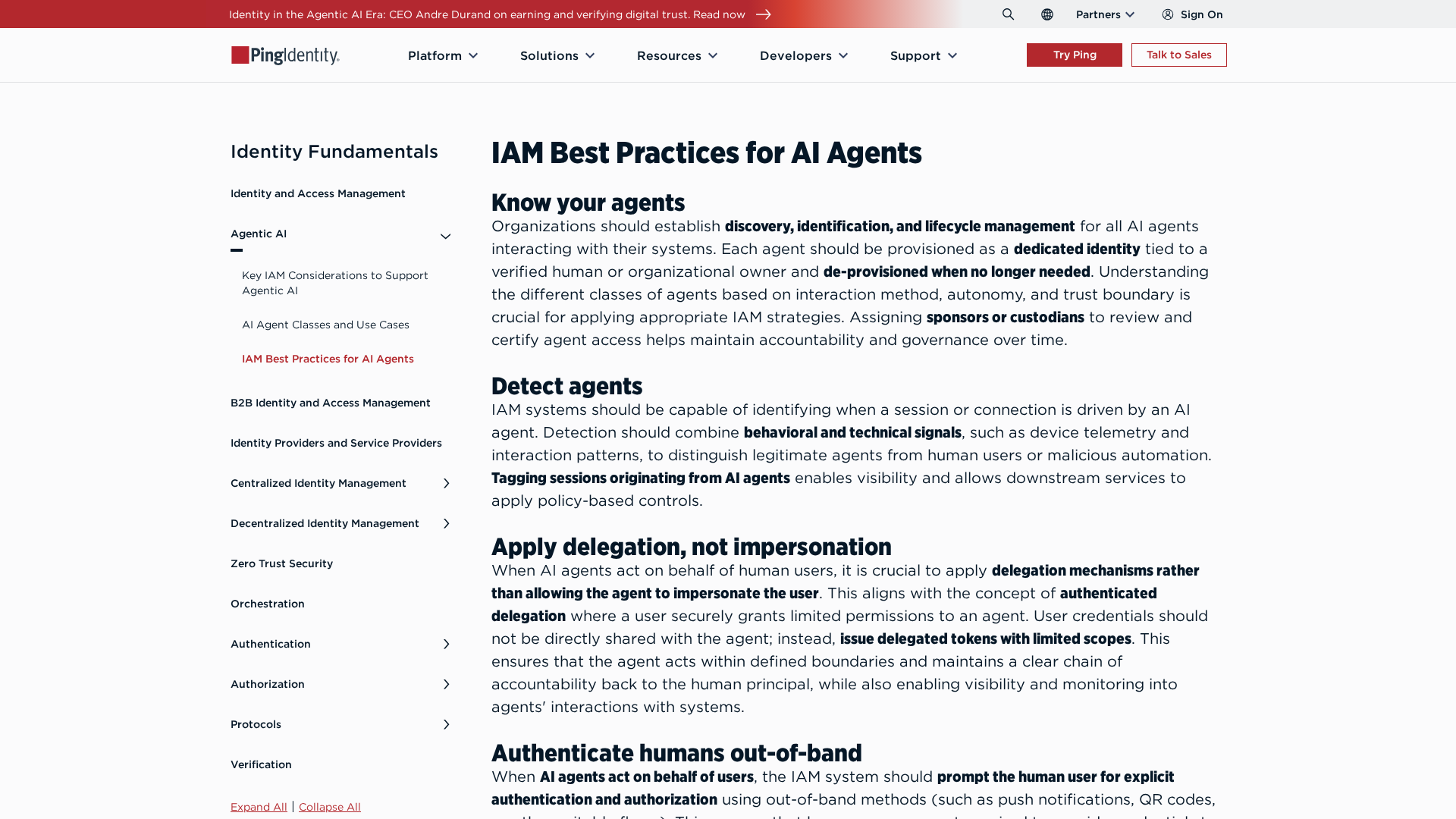

Best-practices for securing AI agents with identity management, delegated access, least privilege, and human oversight.

Cannot access the article content due to an access-denied error, preventing summarization.

A quick preview of POE-POE's pros and cons as seen in G2 reviews.

Meta may partner with Sify to lease a 500 MW Vishakhapatnam data center in a Rs 15,266 crore project linked to the Waterworth subsea cable.