Topic Overview

This topic covers hardware, software and deployment patterns for sub‑millisecond to millisecond AI inference used in algorithmic trading, real‑time decisioning and edge vision. Demand for deterministic, low‑jitter inference has driven a mix of specialized accelerators, optimized compiler stacks and edge/ decentralized deployment models that prioritize latency, power efficiency and regulatory control. Key players and categories: NVIDIA (enterprise GPU ecosystem and recent consolidation of optimization tooling after its May 2024 acquisition of Deci), Groq (deterministic, low‑latency inference accelerators), and alternative vendors such as Rebellions.ai, which focuses on energy‑efficient, GPU‑class chiplets/SoCs and server designs for high‑throughput inference. Complementary software and developer tools—exemplified by Warp’s Agentic Development Environment—accelerate model-to-production flows, reducing iteration time for latency tuning, profiling and observability. Why it matters in 2026: trading and real‑time apps have tightened latency budgets while models have grown larger and more multimodal. That creates pressure to co‑design hardware, compilers, and deployment topology (colocated on exchange-proximate infrastructure, edge vision appliances, or decentralized clusters) to meet strict SLAs. Trends include accelerator heterogeneity, compiler/quantization advances, energy‑aware inference, and vendor consolidation of optimization stacks. Decentralized infrastructure is gaining attention for resilience and regulatory compliance, while edge vision platforms push some inference to devices to avoid network hops. Practitioners should weigh deterministic single‑chip latency, end‑to‑end jitter, power/throughput tradeoffs, and software ecosystem maturity when choosing among NVIDIA, Groq, Rebellions.ai and other alternatives for low‑latency trading and real‑time applications.

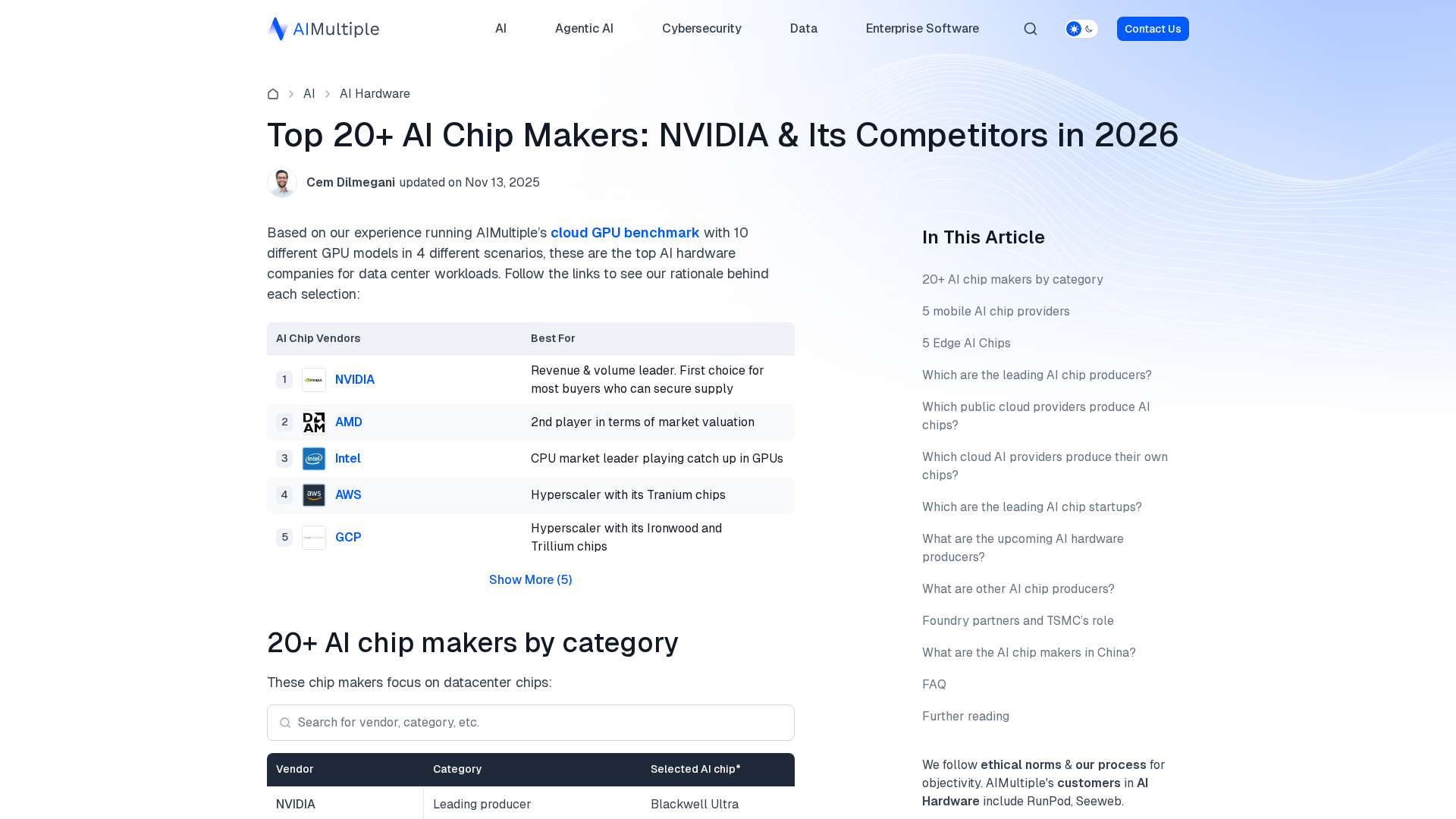

Tool Rankings – Top 3

Energy-efficient AI inference accelerators and software for hyperscale data centers.

Site audit of deci.ai showing NVIDIA takeover after May 2024 acquisition and absence of Deci-branded pricing.

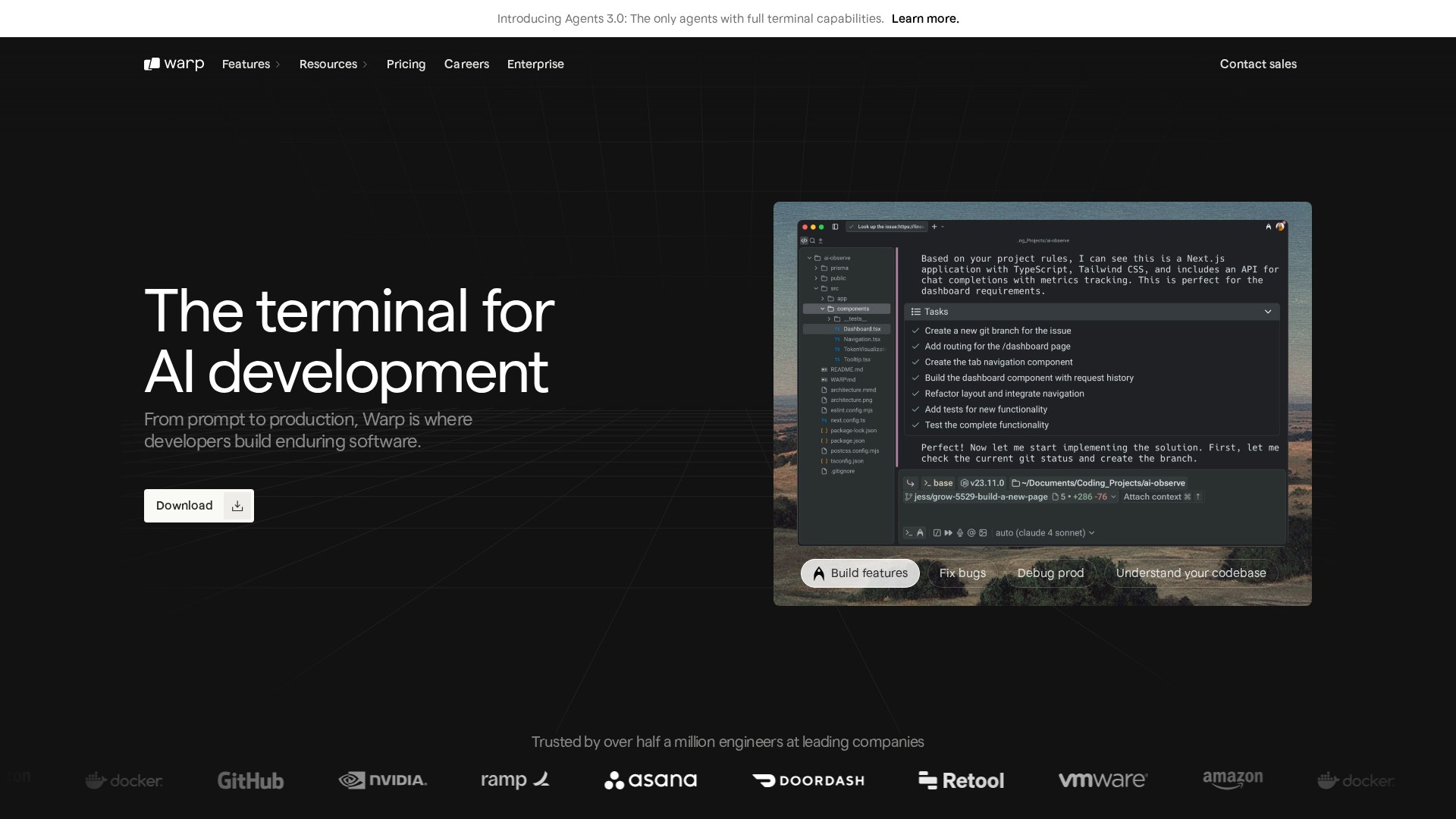

Agentic Development Environment (ADE) — a modern terminal + IDE with built-in AI agents to accelerate developer flows.

Latest Articles (13)

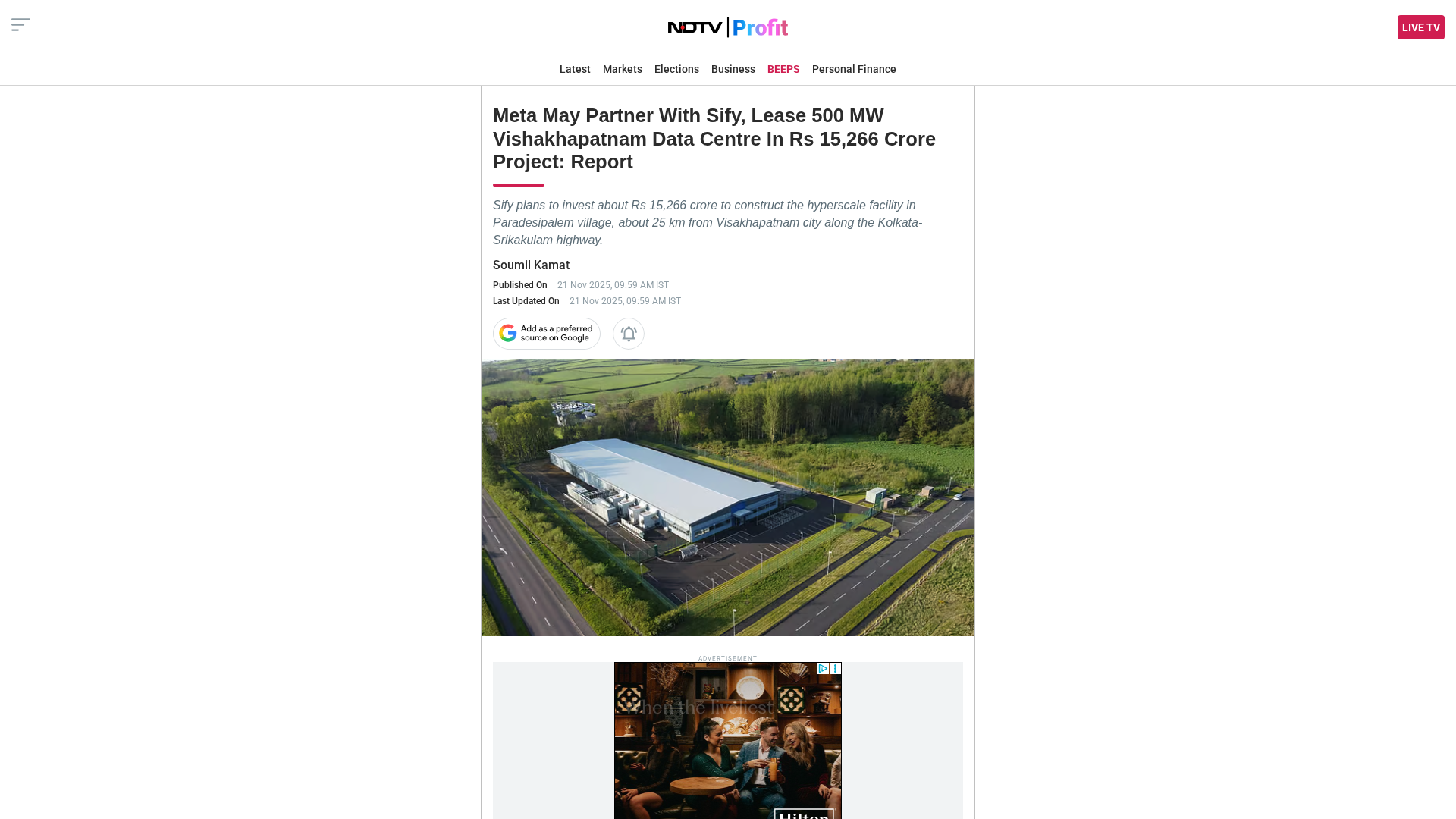

Meta and Sify plan a 500 MW hyperscale data center in Visakhapatnam with the Waterworth subsea cable landing.

Meta may partner with Sify to lease a 500 MW Vishakhapatnam data center in a Rs 15,266 crore project linked to the Waterworth subsea cable.

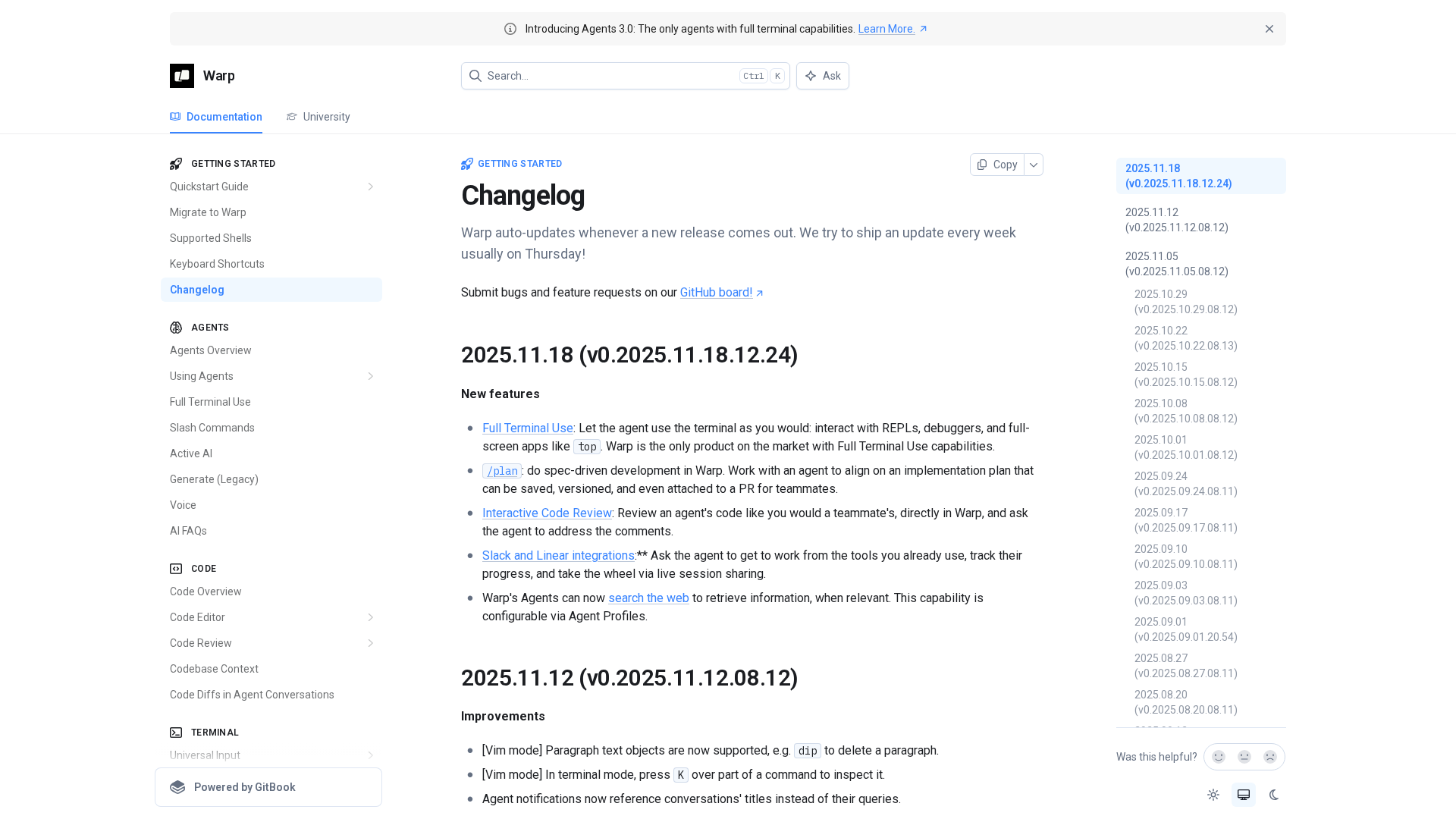

Warp’s weekly changelog highlights new Agent Mode features, improved stability, and cross-platform enhancements.

ProteanTecs expands in Japan with a new office and Noritaka Kojima as GM Country Manager.

Warp ADE combines IDE, CLI, and AI agents to code, review, and deploy—all in one secure development environment.