Topic Overview

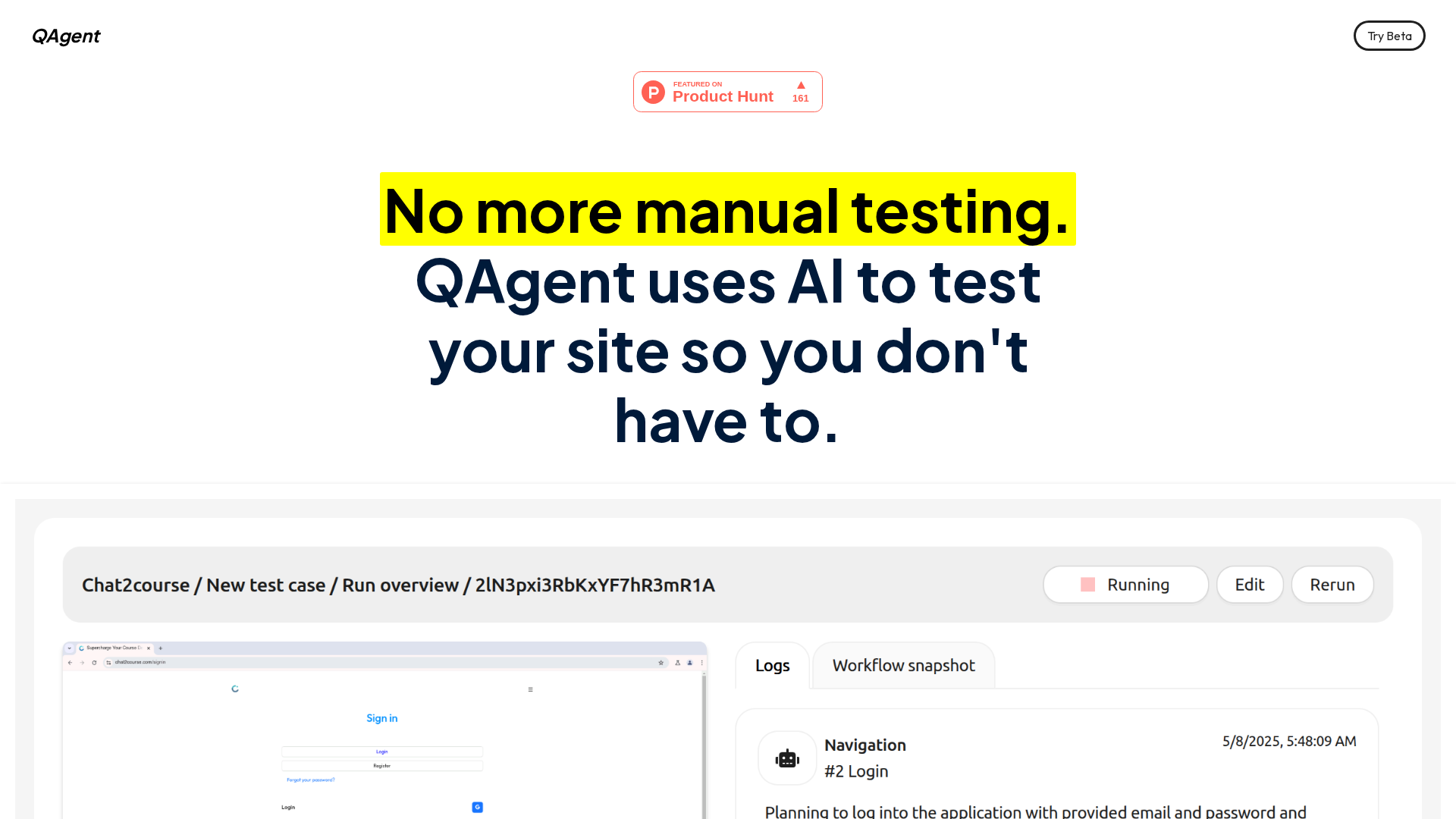

This topic covers the developer SDKs, agent frameworks, and tooling ecosystems used to build, test, and deploy generative AI agents in 2026. It compares vendor SDKs (OpenAI, Anthropic, Google) with third‑party engineering platforms that add orchestration, state, and evaluation layers. Relevance is driven by growing adoption of multi‑agent workflows, enterprise requirements for governance and observability, and the shift toward hybrid hosting (cloud, private model serving, local‑first). Key categories include agent frameworks (LangChain’s open‑source stacks and LangGraph for stateful orchestration), AI agent marketplaces (connectors and reusable agent components), GenAI test automation (goal‑based/adaptive testing exemplified by QAgent), AI code generation and pair‑programming tools (Aider, Tabby), and enterprise assistant platforms (Microsoft 365 Copilot, IBM watsonx Assistant, Kore.ai). Adept represents action‑oriented agents that operate inside software interfaces to automate multistep workflows. Practically, vendor SDKs differ in API primitives, safety controls, latency/hosting options, and model capabilities; third‑party SDKs focus on chaining, tool use, memory/state, evaluation, and deployment pipelines. Trends to note: composition-first development (tool and prompt as first‑class artifacts), built‑in testing and continuous evaluation for agentic behavior, increased emphasis on governance/observability for multi‑agent orchestrations, and more self‑hosting or hybrid deployments to meet privacy and compliance demands. For teams choosing an SDK or framework, priorities are interoperability with existing tool APIs, test automation for agent goals, and enterprise features (access controls, logging, and explainability). This comparison helps engineers and architects select the right mix of vendor SDKs and third‑party tooling for reliable, auditable agent deployments.

Tool Rankings – Top 6

Engineering platform and open-source frameworks to build, test, and deploy reliable AI agents.

AI assistant integrated across Microsoft 365 apps to boost productivity, creativity, and data insights.

Enterprise virtual agents and AI assistants built with watsonx LLMs for no-code and developer-driven automation.

Enterprise AI agent platform for building, deploying and orchestrating multi-agent workflows with governance, observabil

Open-source AI pair-programming tool that runs in your terminal and browser, pairing your codebase with LLM copilots to:

.avif)

Open-source, self-hosted AI coding assistant with IDE extensions, model serving, and local-first/cloud deployment.

Latest Articles (92)

A comprehensive comparison and buying guide to 14 AI governance tools for 2025, with criteria and vendor-specific strengths.

An AI assistant for enhanced Q&A and automation.

A comprehensive LangChain releases roundup detailing Core 1.2.6 and interconnected updates across XAI, OpenAI, Classic, and tests.

In-depth look at Gemini 3 Pro benchmarks across reasoning, math, multimodal, and agentic capabilities with implications for building AI agents.

Cannot access the article content due to an access-denied error, preventing summarization.