Topic Overview

This topic examines how hardware constraints and deployment choices shape LLM design, performance and operational trade‑offs — contrasting on‑device/edge models with cloud‑hosted systems. On‑device and local‑first approaches (enabled by tools like Tabby and Cline) prioritize low latency, data locality, and offline operation through model compression, quantization and NPU/GPU‑aware runtimes. Cloud‑hosted and enterprise platforms (exemplified by Harvey and large provider stacks) favor larger models, centralized data management, and easier lifecycle governance, with platforms such as Qodo addressing code/test governance across distributed SDLCs. Relevance in late 2025 stems from two converging trends: wider availability of mobile and embedded NPUs that make multi‑bit quantized LLMs practical on edge devices, and continued consolidation and hardware optimization in the cloud (notably the NVIDIA alignment after Deci.ai’s 2024 acquisition), which pushes specialized compiler/runtime toolchains for high‑throughput inference. Decentralized infrastructure projects (e.g., Tensorplex Labs) are introducing alternative deployment topologies that combine staking and resource marketplaces, adding new considerations for trust, latency and cost predictability. Key evaluation dimensions include latency, throughput, energy per token, memory footprint, model accuracy under pruning/quantization, privacy/regulatory requirements, and operational complexity (orchestration, updates, observability). Practical comparisons require hardware‑aware benchmarks (Tensor cores, NPUs, mobile accelerators), optimized runtimes (TensorRT, ONNX/MLIR toolchains), and governance controls for multi‑tenant or decentralized environments. In short, the right deployment depends on workload characteristics (real‑time vs batch), domain constraints (privacy, compliance), and the available hardware/software stack — a decision increasingly shaped by edge‑first tooling, cloud GPU economies, and emerging decentralized platforms.

Tool Rankings – Top 6

Domain-specific AI platform delivering Assistant, Knowledge, Vault, and Workflows for law firms and professionalservices

.avif)

Open-source, self-hosted AI coding assistant with IDE extensions, model serving, and local-first/cloud deployment.

AI-native IDE and agentic coding platform (Windsurf Editor) with Cascade agents, live previews, and multi-model support.

Open-source, client-side AI coding agent that plans, executes and audits multi-step coding tasks.

Site audit of deci.ai showing NVIDIA takeover after May 2024 acquisition and absence of Deci-branded pricing.

Open-source, decentralized AI infrastructure combining model development with blockchain/DeFi primitives (staking, cross

Latest Articles (48)

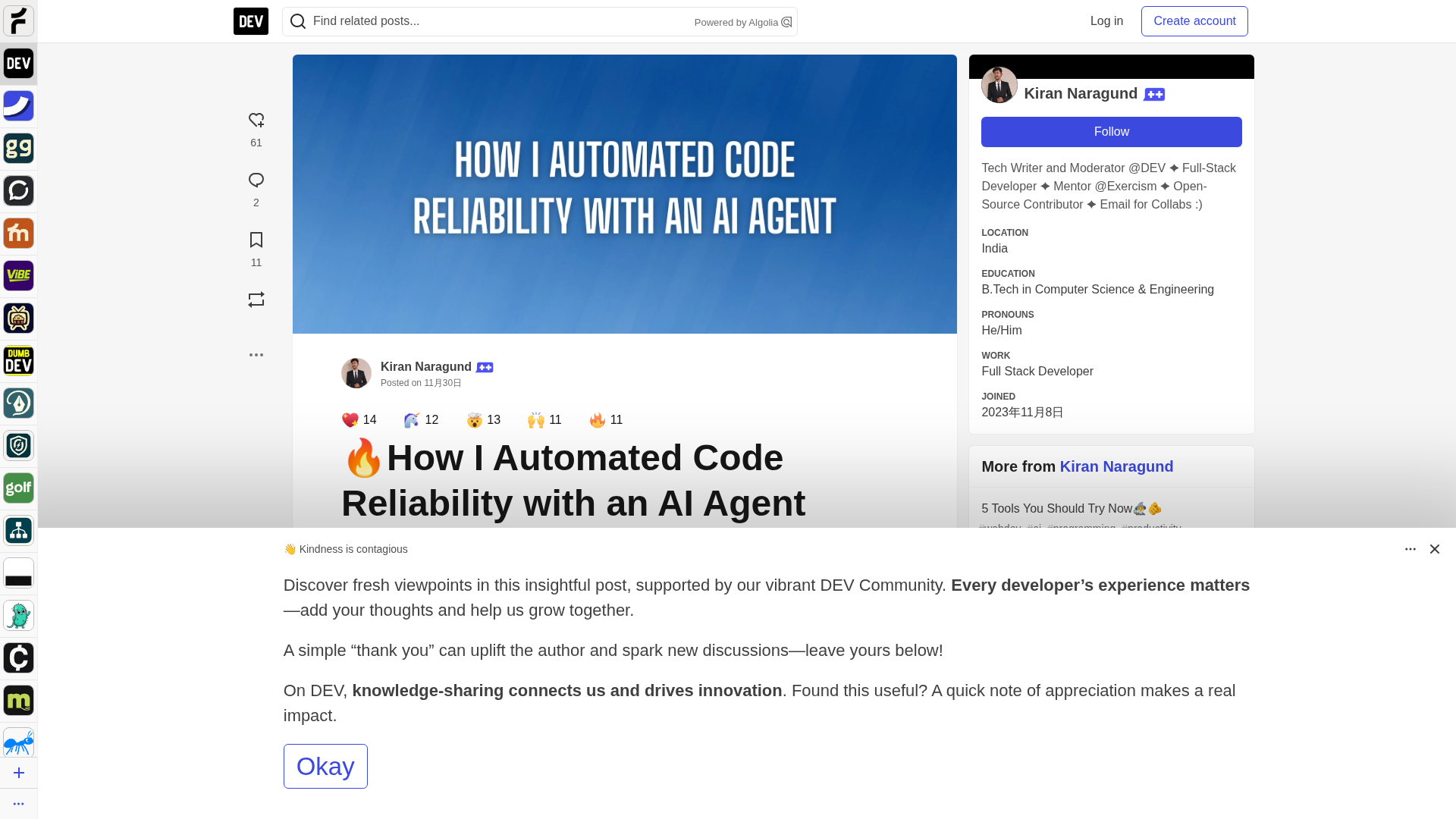

A step-by-step guide to building an AI-powered Reliability Guardian that reviews code locally and in CI with Qodo Command.

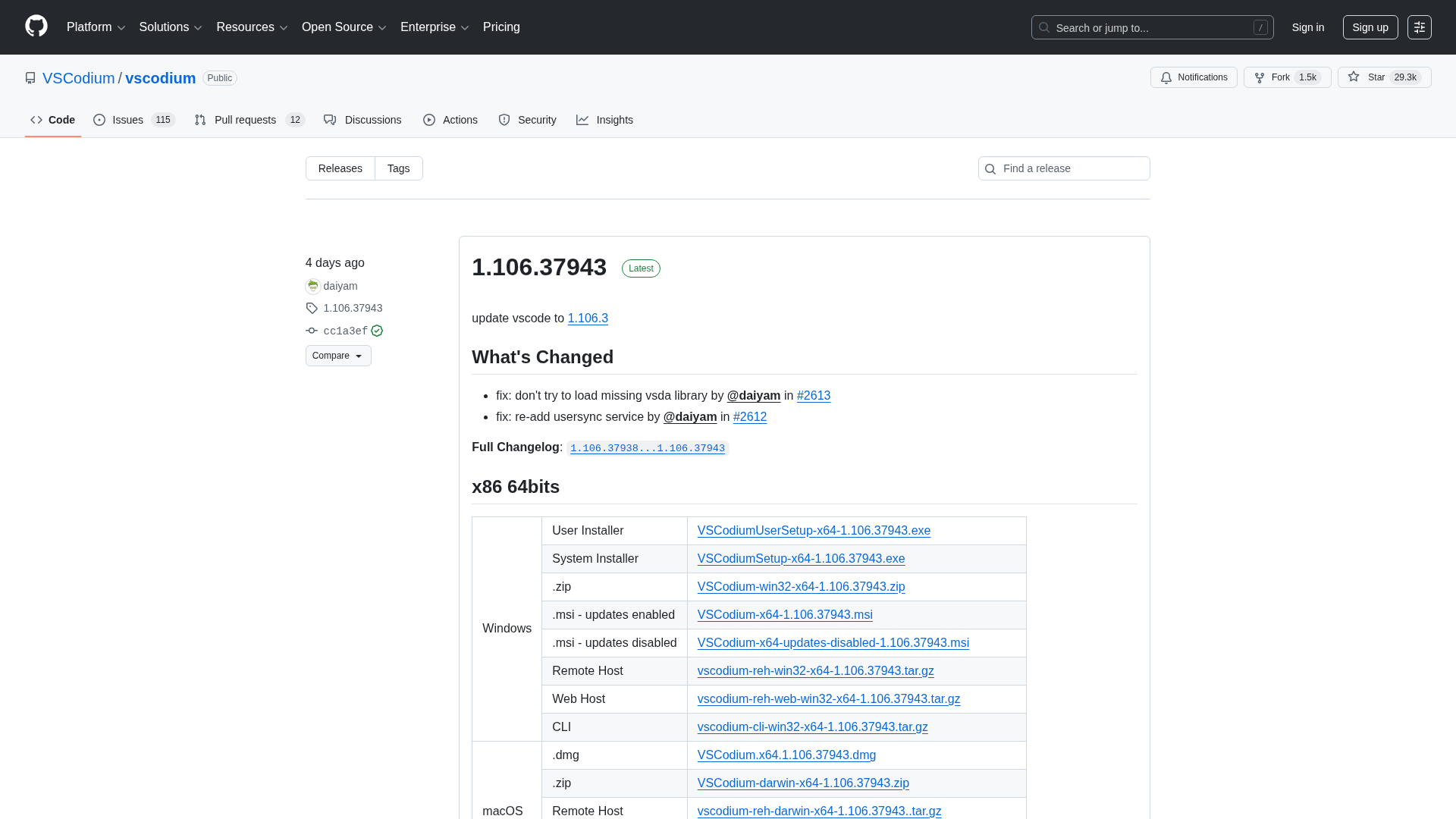

A comprehensive releases page for VSCodium with multi-arch downloads and versioned changelogs across 1.104–1.106 revisions.

A developer chronicles switching to Zed on Linux, prototyping on a phone, and a late-night video correction.

Qodo ranks highest for Codebase Understanding by Gartner, highlighting cross-repo context as essential for scalable AI development.

AWS commits $50B to expand AI/HPC capacity for U.S. government, adding 1.3GW compute across GovCloud regions.