Topic Overview

On‑device and local AI inference & optimization toolkits cover the software, runtimes, model formats and orchestration layers that let machine learning models run with low latency, reduced cloud dependence, and stronger data control. This topic spans edge vision platforms that squeeze neural nets onto NPUs and mobile SoCs, decentralized infrastructure that coordinates agentic workloads across devices, and AI data platforms that prepare and surface local context for private inference. As of 2026, demand for local inference is driven by privacy and compliance requirements, latency-sensitive applications, and the maturing capability of smaller, instruction‑tuned models. Key building blocks include model quantization and compilation, lightweight runtime stacks (WASM, ONNX/TFLite/MLC-style backends), on‑device context plumbing, and observability/orchestration for distributed agents. Representative tools illustrate these roles: Stable Code provides edge‑ready, instruction‑tuned code LLMs for private, fast code completion; JetBrains AI Assistant brings context‑aware generation and refactorings inside the IDE; EchoComet focuses on assembling and processing code context entirely on the developer’s device to avoid sending sensitive project data to remote servers; MindStudio offers a no‑/low‑code visual platform to design, test, deploy and operate AI agents with enterprise controls; and Xilos targets enterprise orchestration and visibility for agentic AI across connected services. Together these tool types address complementary needs—model and runtime optimization for performance, developer UX and local context handling for accuracy and privacy, and infrastructure for governance and lifecycle management—making on‑device inference a practical option across edge vision, decentralized AI, and data platform use cases.

Tool Rankings – Top 5

Edge-ready code language models for fast, private, and instruction‑tuned code completion.

In‑IDE AI copilot for context-aware code generation, explanations, and refactorings.

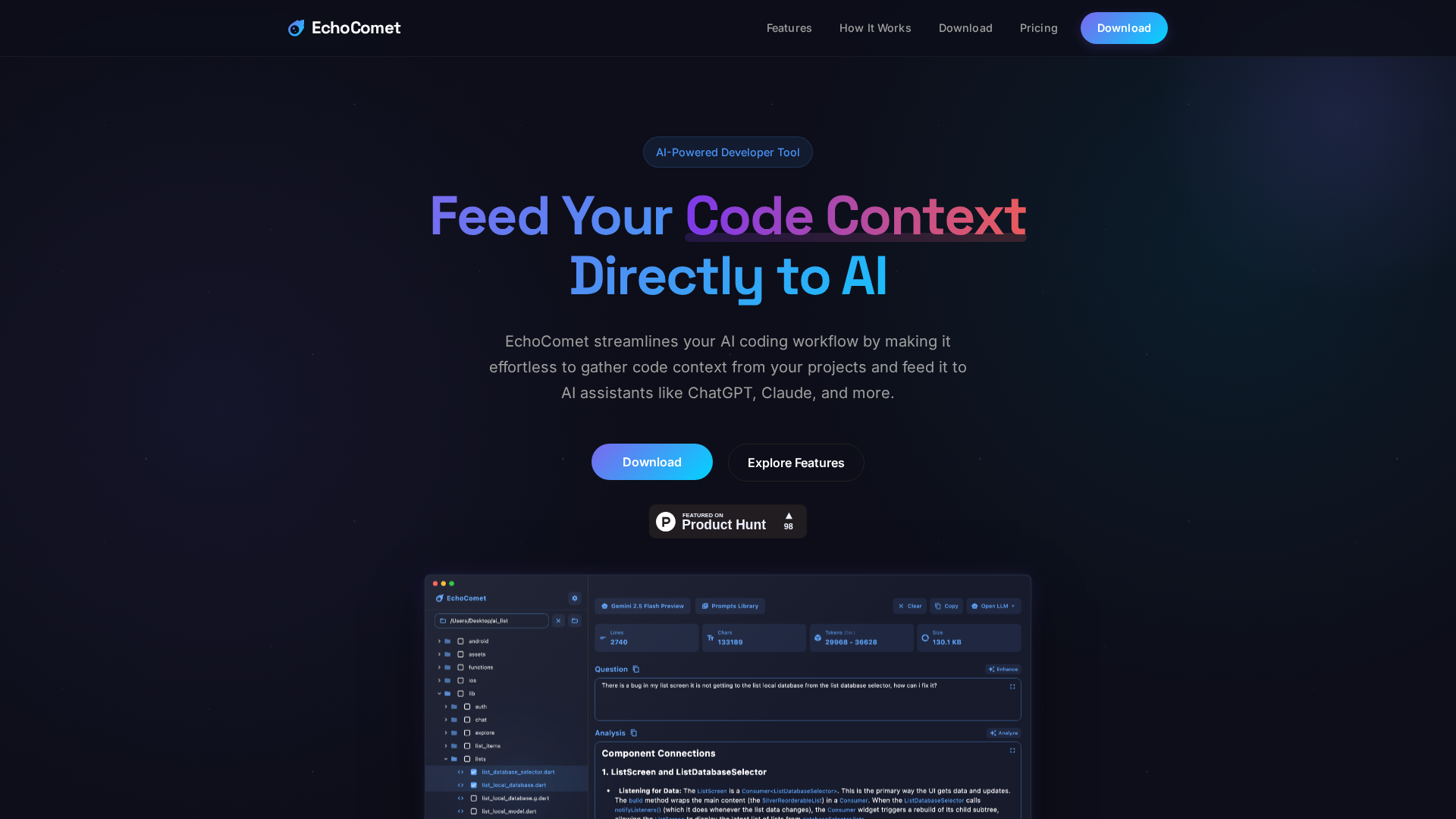

Feed your code context directly to AI

No-code/low-code visual platform to design, test, deploy, and operate AI agents rapidly, with enterprise controls and a

Intelligent Agentic AI Infrastructure

Latest Articles (23)

EchoComet lets you gather code context locally and feed it to AI with large-context prompts for smarter, private AI assistance.

EchoComet's contact page provides fast support, license recovery, and device limits for macOS.

OpenAI’s bypass moment underscores the need for governance that survives inevitable user bypass and hardens system controls.

A call to enable safe AI use at work via sanctioned access, real-time data protections, and frictionless governance.

Explores the human role behind AI automation and how Bell Cyber tackles AI hallucinations in security operations.