Topic Overview

This topic covers the evolving ecosystem of AI inference accelerators and dedicated hardware platforms in the wake of major vendor consolidation (notably the NVIDIA/Groq deal). It examines purpose‑built silicon, server designs, and accompanying software stacks for high‑throughput, energy‑efficient inference—plus alternative decentralized and edge‑focused approaches that change where and how models run. Relevance and timing: as of 2026 the market is settling into a new competitive posture following acquisitions and technology merges (a site audit shows Deci.ai was folded into NVIDIA after a May 2024 acquisition and its domain now hosts NVIDIA‑branded content). That consolidation is prompting suppliers and customers to reassess tradeoffs in throughput, power efficiency, software portability, and vendor lock‑in. At the same time, growth in multimodal LLMs and edge vision use cases is increasing demand for accelerator diversity—from hyperscale chiplets and SoCs to compact vision inference modules. Key tools and categories: Rebellions.ai offers energy‑efficient inference accelerators (chiplets, SoCs, servers) plus a GPU‑class software stack aimed at hyperscalers and high‑throughput LLM/multimodal inference. Tensorplex Labs represents the decentralized infrastructure trend—an open, blockchain/DeFi‑linked stack for model development, staking and distributed hosting that targets resilience and alternative monetization. The Deci.ai audit underscores how large incumbents absorb niche tooling and integrate models/software into larger platforms. Taken together, the landscape is defined by three converging trends: specialization of inference hardware for efficiency and latency, software stacks that bridge accelerator heterogeneity, and decentralized/edge deployments that redistribute compute away from centralized clouds. Buyers should evaluate power and throughput metrics, software compatibility, and governance/monetization models when selecting platforms.

Tool Rankings – Top 3

Energy-efficient AI inference accelerators and software for hyperscale data centers.

Open-source, decentralized AI infrastructure combining model development with blockchain/DeFi primitives (staking, cross

Site audit of deci.ai showing NVIDIA takeover after May 2024 acquisition and absence of Deci-branded pricing.

Latest Articles (22)

AWS commits $50B to expand AI/HPC capacity for U.S. government, adding 1.3GW compute across GovCloud regions.

How AI agents can automate and secure decentralized identity verification on blockchain-enabled systems.

Passage cuts GPU cloud costs by up to 70% using Akash's open marketplace, enabling immersive Unreal Engine 5 events.

ProteanTecs expands in Japan with a new office and Noritaka Kojima as GM Country Manager.

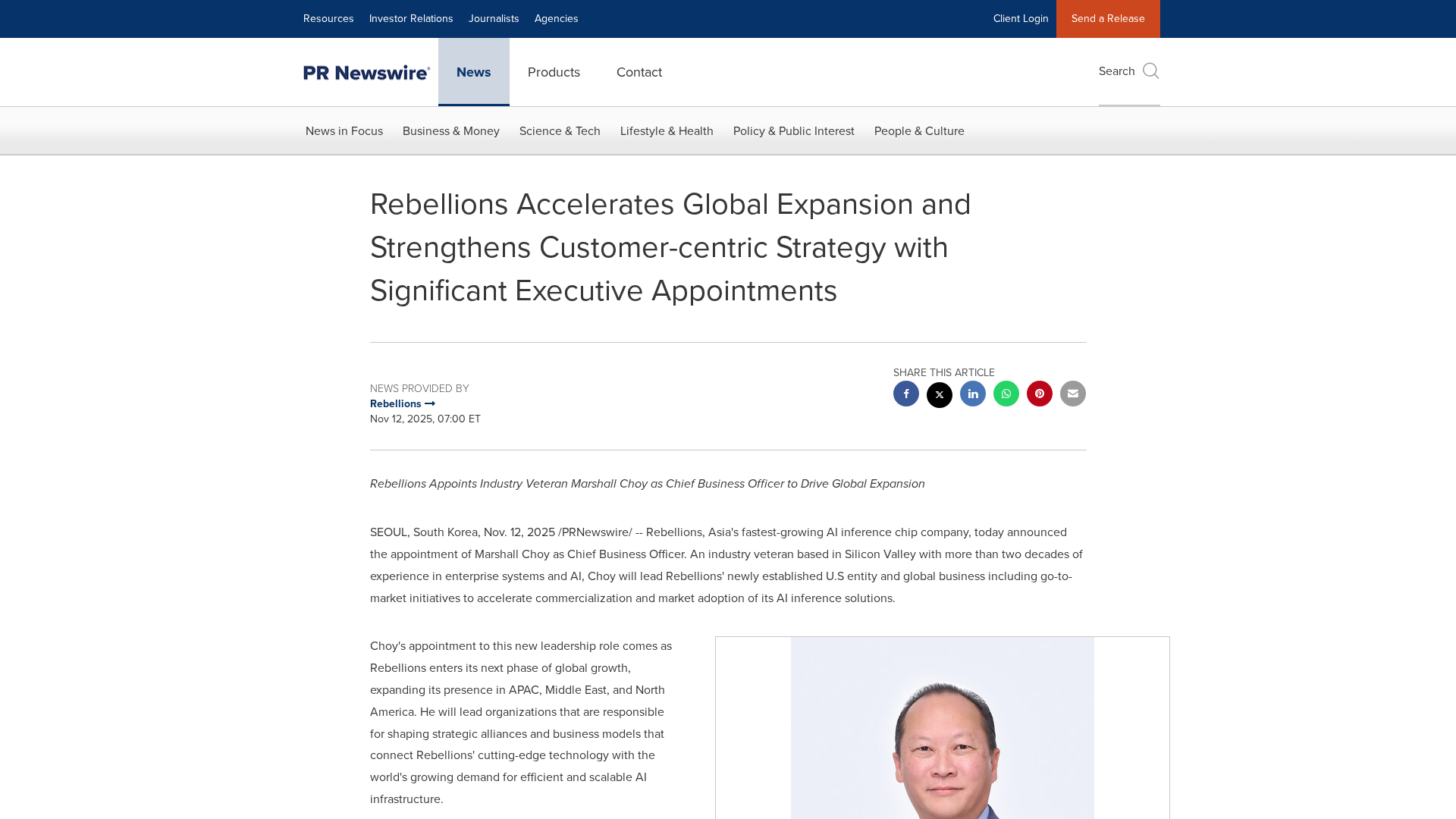

Rebellions appoints Marshall Choy as CBO to drive global expansion and establish a U.S. market hub.