Topic Overview

Automated AI behavioral evaluation frameworks are toolchains and test suites that exercise, measure, and monitor how large language models and agentic systems behave across safety, reliability, and functional metrics. This topic examines Anthropic’s Bloom approach (as referenced in industry comparisons) alongside alternative evaluation strategies used by engineering platforms and enterprise vendors. Relevance in 2025 stems from broad LLM deployment in customer service, productivity suites, and autonomous agents, plus growing regulatory and procurement requirements for demonstrable safety, reproducibility, and continuous testing. Teams now need automated, CI-integrated checks for hallucination rates, instruction-following, tool-use safety, prompt-injection resilience, latency/scale tradeoffs, and conversational QA. Key players and categories: Anthropic’s Claude family (developer and conversational assistants) illustrates the kinds of models evaluation frameworks target; LangChain provides an open engineering stack and evaluation modules to build, debug, and automate behavioral tests for agentic workflows; Observe.AI and Yellow.ai represent enterprise platforms that combine monitoring, real-time QA, and post-deployment behavioral telemetry for contact centers and CX/EX automation; Microsoft 365 Copilot exemplifies productivity-integrated assistants that require application-level testing and governance. Practically, comparisons focus on test coverage (safety vs. functional), automation and CI/CD support, observability in production, and extensibility to multimodal/agentic behavior. Effective frameworks combine scenario libraries, adversarial and red-team tests, metrics pipelines, and deployment hooks. Choosing between Anthropic Bloom-style tooling and LangChain-based or vendor-integrated alternatives depends on required depth of behavioral specification, integration with existing agent runtimes, and enterprise monitoring needs.

Tool Rankings – Top 5

Anthropic's Claude family: conversational and developer AI assistants for research, writing, code, and analysis.

Engineering platform and open-source frameworks to build, test, and deploy reliable AI agents.

Enterprise conversation-intelligence and GenAI platform for contact centers: voice agents, real-time assist, auto QA, &洞

Enterprise agentic AI platform for CX and EX automation, building autonomous, human-like agents across channels.

AI assistant integrated across Microsoft 365 apps to boost productivity, creativity, and data insights.

Latest Articles (77)

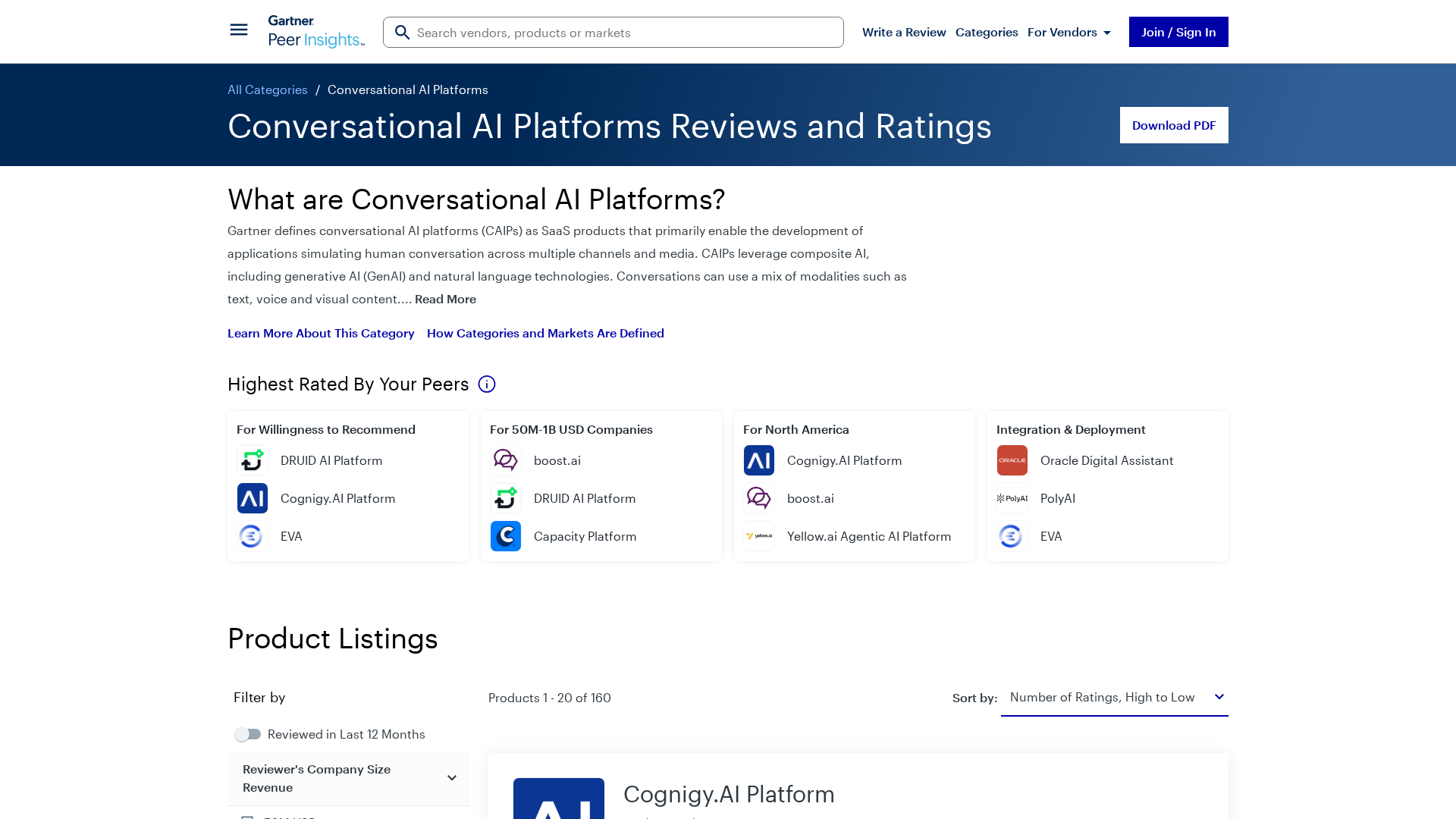

Gartner’s market view on conversational AI platforms, outlining trends, vendors, and buyer guidance.

A comprehensive LangChain releases roundup detailing Core 1.2.6 and interconnected updates across XAI, OpenAI, Classic, and tests.

Cannot access the article content due to an access-denied error, preventing summarization.

A practical, step-by-step guide to fine-tuning large language models with open-source NLP tools.

A quick preview of POE-POE's pros and cons as seen in G2 reviews.