Topic Overview

This topic covers the AI platforms and agent frameworks used to automate materials‑science workflows and laboratory operations — from literature retrieval and experiment design to instrument control and closed‑loop optimization. As of 2026‑02‑02, the field is characterized by tighter integration between large language models (LLMs), agent orchestration layers, specialized compute clouds, and automated data‑curation pipelines, enabling faster iteration on materials hypotheses while improving reproducibility and traceability. Key platform categories include developer frameworks (e.g., LangChain) for building, observing, and deploying LLM‑powered agents; no‑code/low‑code visual builders (e.g., MindStudio, IBM watsonx Assistant) for assembling multi‑agent automations and virtual lab assistants; enterprise LLM and embedding services (e.g., Cohere) for secure text generation and retrieval; open‑source instruction models (e.g., nlpxucan/WizardLM) for domain adaptation and local fine‑tuning; and infrastructure/acceleration providers (e.g., Together AI) for scalable training and inference. Data platforms such as DatologyAI automate conversion of raw experimental records into model‑ready datasets, while agentic systems like Adept focus on software‑level action (interacting with lab management systems and GUI tools). StationOps represents emerging AI‑driven DevOps capabilities for deploying these stacks on cloud providers. Practical use cases include automated protocol retrieval via embeddings, active‑learning loops for high‑throughput experiments, multi‑agent orchestration of planning + execution steps, and automated dataset curation for model training. Current trends emphasize end‑to‑end pipelines combining secure enterprise LLMs, reproducible data curation, and agentic interfaces to lab systems — enabling pragmatic, auditable automation without overstating immediate capabilities.

Tool Rankings – Top 6

Enterprise virtual agents and AI assistants built with watsonx LLMs for no-code and developer-driven automation.

An open-source framework and platform to build, observe, and deploy reliable AI agents.

Enterprise-focused LLM platform offering private, customizable models, embeddings, retrieval, and search.

Open-source family of instruction-following LLMs (WizardLM/WizardCoder/WizardMath) built with Evol-Instruct, focused on

No-code/low-code visual platform to design, test, deploy, and operate AI agents rapidly, with enterprise controls and a

A full-stack AI acceleration cloud for fast inference, fine-tuning, and scalable GPU training.

Latest Articles (90)

A comprehensive comparison and buying guide to 14 AI governance tools for 2025, with criteria and vendor-specific strengths.

A guided look at StationOps’ internal Dev Platform for AWS—enabling governed, self‑serve environments at scale.

An AWS-centric internal developer platform comparison between StationOps and Netlify.

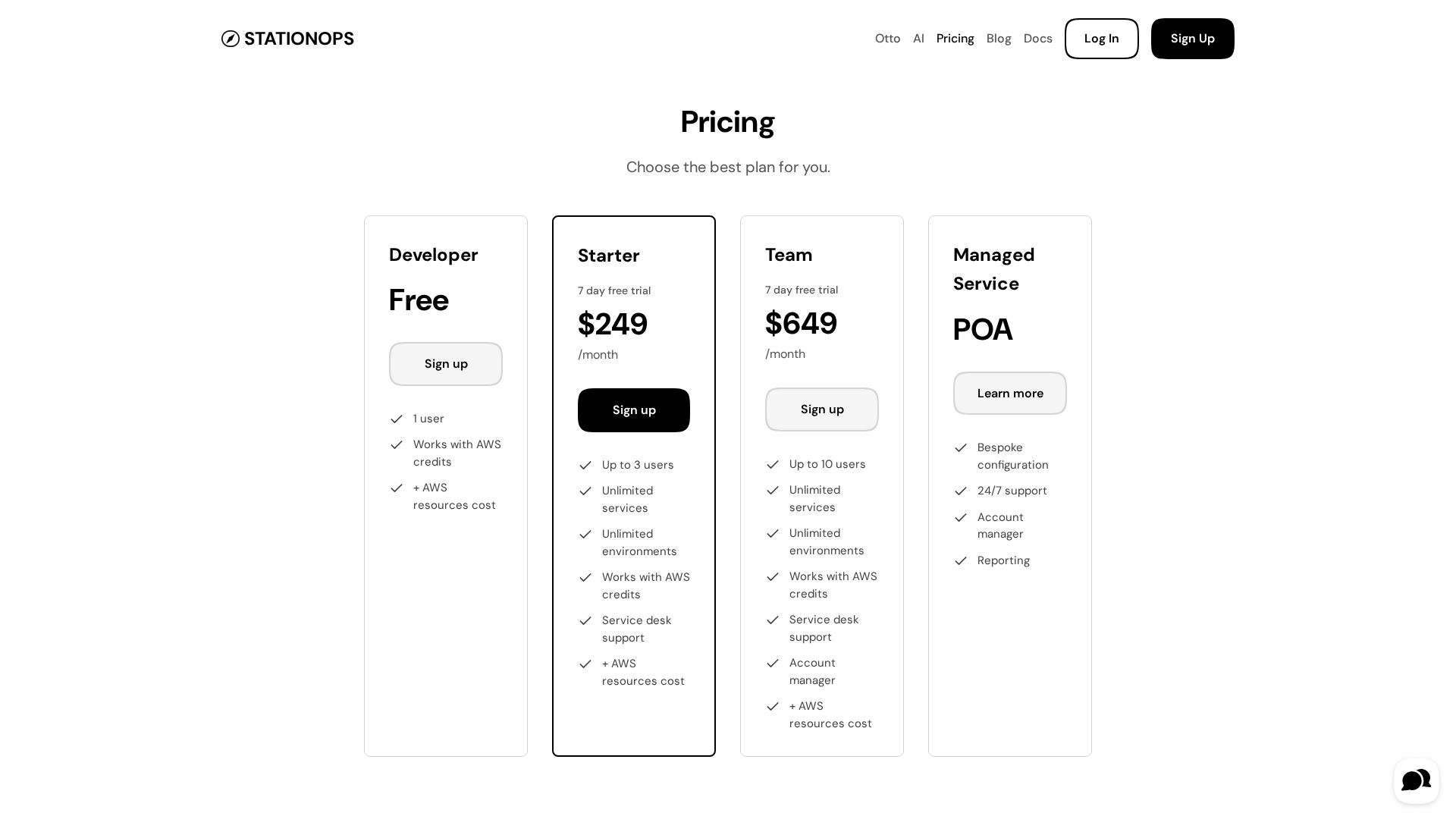

Pricing details for StationOps' AWS Internal Developer Platform, including tiers and features.

A concise look at how an internal developer platform on AWS accelerates delivery with governance and self-service.