Topic Overview

This topic covers vector databases and long‑term memory solutions that let LLMs persist, index and retrieve semantic context across sessions. Modern AI systems use embeddings and vector search as the backbone of “memory”: storing documents, notes, and agent experiences as dense vectors, then retrieving relevant context to ground generations or drive retrieval‑augmented workflows. As of 2026‑01‑16 this space emphasizes standardized memory interfaces, hybrid/local architectures, and combinations of graph and vector approaches. Key tools and patterns include managed vector services (Pinecone) and open‑source engines (Milvus, Qdrant, Weaviate) for scalable nearest‑neighbor search; application databases like Chroma that add document storage and full‑text alongside embeddings; and specialized MCP (Model Context Protocol) memory servers and connectors that expose read/write memory APIs. Examples: Qdrant and Chroma have MCP server implementations to serve semantic memory; Cognee blends graph databases with vector search for GraphRAG workflows; mcp‑memory‑service offers a production hybrid memory store with local fast reads plus cloud sync and lock‑free semantics; Basic Memory provides a local‑first Markdown knowledge graph; Neo4j and Context Portal support structured graph context via MCP; Graphlit focuses on ingestion from Slack, email and web into a searchable project graph. Trends to weigh when choosing: managed vs self‑hosted control, scale and latency, hybrid local/cloud synchronization, graph‑augmented retrieval, and MCP compatibility for composability. The right choice depends on whether you prioritize multi‑source ingestion and graph reasoning, strict data locality, operational simplicity, or the highest performance for large vector indexes.

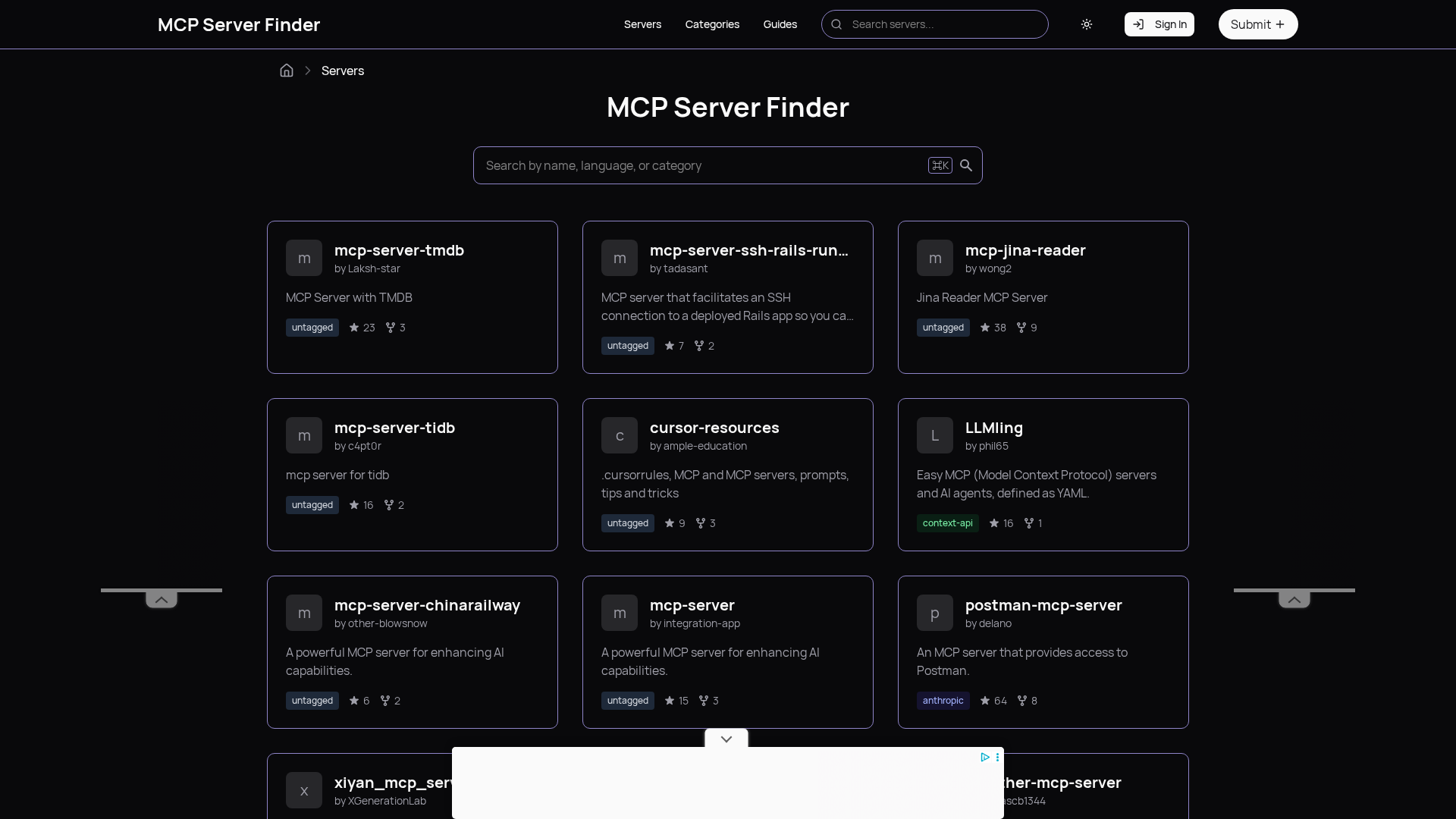

MCP Server Rankings – Top 8

Implement semantic memory layer on top of the Qdrant vector search engine

Embeddings, vector search, document storage, and full-text search with the open-source AI application database

GraphRAG memory server with customizable ingestion, data processing and search

Production-ready MCP memory service with zero locks, hybrid backend, and semantic memory search.

Local-first MCP server enabling LLMs to read/write a local Markdown knowledge graph.

Neo4j graph database server (schema + read/write-cypher) and separate graph database backed memory

Database-backed MCP server for managing structured project context and knowledge graphs.

Ingest anything from Slack to Gmail to podcast feeds, in addition to web crawling, into a searchable Graphlit project.