Topic Overview

This topic covers the technical and market choices for obtaining GPU-class compute in 2025: native Nvidia H200/H800 accelerators, engineered or modified hardware workarounds, and cloud-provider instance offerings — plus emergent decentralized and purpose-built inference silicon. Demand for large models and low-latency multimodal inference has kept pressure on H200/H800-class capacity, driving a mix of strategies: hyperscalers expanding instance types, customers using tailored server designs or interconnect adaptations to scale GPU clusters, and specialist suppliers producing energy-efficient AI inference accelerators to reduce operating cost and dependency on monolithic GPUs. Key tools and roles: Rebellions.ai focuses on chiplet/SoC inference accelerators and a GPU-class software stack to enable high-throughput, energy-efficient hosting for LLMs and multimodal models; OpenPipe provides managed dataset capture, fine-tuning pipelines and optimized inference hosting that abstract hardware differences; developer-facing products such as Warp, Replit and Amazon’s CodeWhisperer (now integrated into Amazon Q Developer) rely on consistent, proximate inference backends to deliver agentic workflows and inline coding assistants. Why this matters now (2025-12-11): model sizes and production inference volumes continue to grow while energy, latency, cost and supply constraints push operators to diversify compute. That has accelerated adoption of specialized inference chips, creative hardware configurations, and hybrid on‑prem/cloud deployments. Practical considerations — orchestration, cost per token, memory capacity, NVLink/PCIe topology, compliance and ROI — determine whether teams choose native H200/H800 paths, cloud instances, or decentralized/purpose-built alternatives. Understanding these trade-offs and the software layers that smooth them is essential for teams deploying scalable, cost-effective AI services today.

Tool Rankings – Top 5

Energy-efficient AI inference accelerators and software for hyperscale data centers.

Agentic Development Environment (ADE) — a modern terminal + IDE with built-in AI agents to accelerate developer flows.

Managed platform to collect LLM interaction data, fine-tune models, evaluate them, and host optimized inference.

AI-driven coding assistant (now integrated with/rolling into Amazon Q Developer) that provides inline code suggestions,

AI-powered online IDE and platform to build, host, and ship apps quickly.

Latest Articles (39)

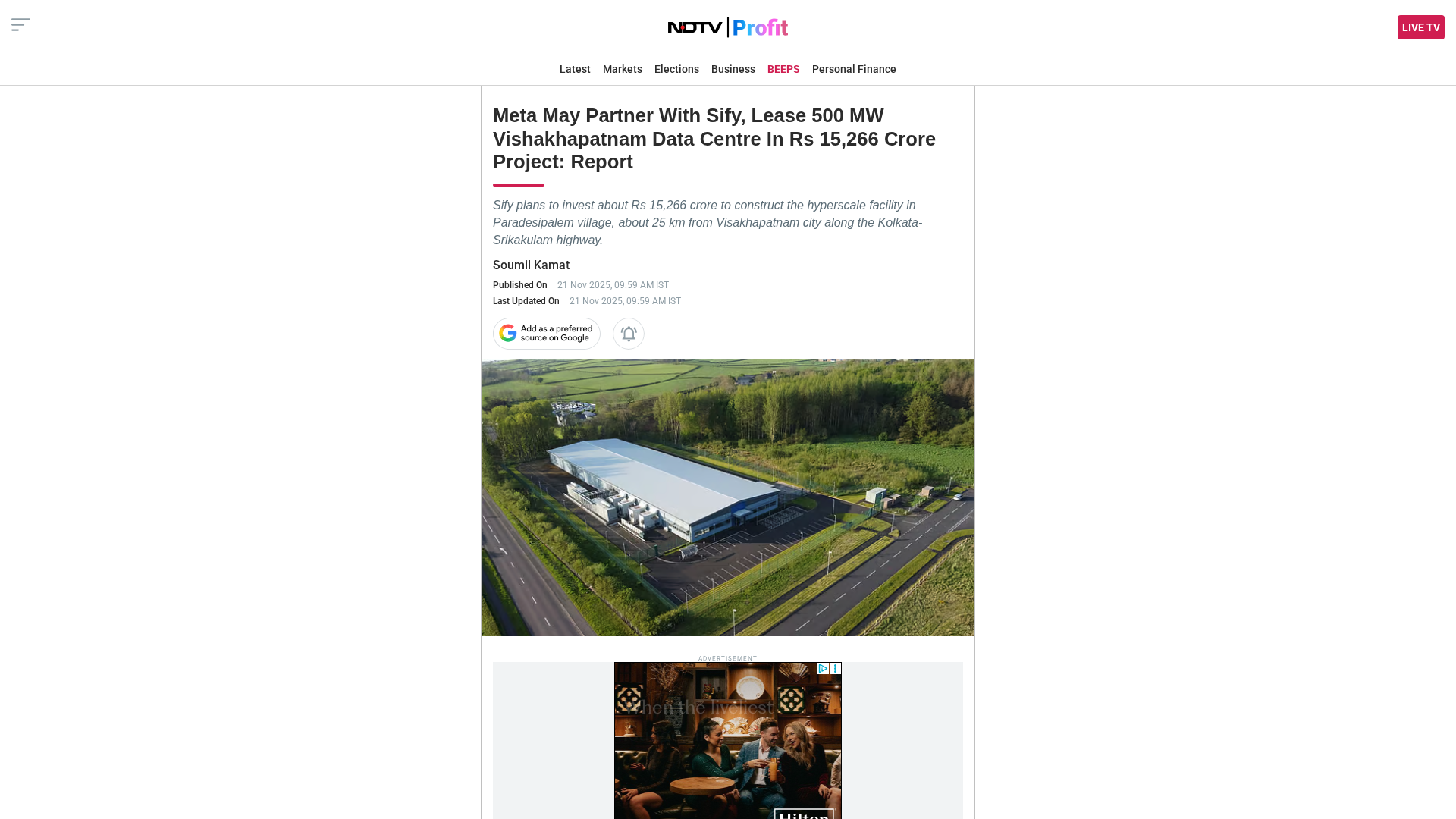

Meta to lease 500 MW Visakhapatnam data centre capacity from Sify and land Waterworth submarine cable.

Meta plans a 500MW AI data center in Visakhapatnam with Sify, linked to the Waterworth subsea cable.

Meta and Sify plan a 500 MW hyperscale data center in Visakhapatnam with the Waterworth subsea cable landing.

Meta may partner with Sify to lease a 500 MW Vishakhapatnam data center in a Rs 15,266 crore project linked to the Waterworth subsea cable.

A 404/private page on Replit’s Discourse forum with login prompts and a curated list of popular topics.