Topic Overview

This topic examines the landscape of AI inference platforms for production GenAI—comparing Red Hat–centric deployments on AWS inference ASICs (Trainium/Inferentia) with NVIDIA GPU stacks and emerging cloud and on‑prem alternatives. It focuses on the technical tradeoffs organizations face when scaling LLM and multimodal inference: throughput, latency, energy efficiency, software ecosystem, and total cost of ownership. Red Hat environments (RHEL/OpenShift) are frequently used to standardize orchestration and security across hybrid cloud and on‑prem sites, enabling teams to deploy AWS Trainium/Inferentia instances or NVIDIA GPU clusters with consistent tooling. NVIDIA’s mature ecosystem (CUDA, TensorRT, Triton, broad model support) favors maximum compatibility and tooling; AWS silicon prioritizes cost‑optimized, high‑throughput inference when paired with AWS Neuron and cloud services. New entrants and categories broaden choices: Rebellions.ai targets energy‑efficient, GPU‑class inference hardware and software for hyperscale datacenters; Rebellions‑style accelerators lower energy/TCO for persistent high‑volume inference. Decentralized infrastructure projects (Tensorplex Labs) and edge/optimized model families (Stable Code) offer alternatives for private or latency‑sensitive deployments. Operational layers and data tooling matter: OpenPipe centralizes request/response logging, fine‑tuning and hosted inference; Activeloop’s Deep Lake provides multimodal storage, versioning and vector indexes for RAG workflows; developer platforms (Blackbox.ai, Qodo) improve model integration, testing and SDLC governance. Current trends (late‑2025) emphasize inference efficiency (quantization, kernel tuning), hybrid deployment patterns, tighter data-to-model observability, and vendor choice driven by workload profiles rather than one‑size‑fits‑all claims.

Tool Rankings – Top 6

Energy-efficient AI inference accelerators and software for hyperscale data centers.

Managed platform to collect LLM interaction data, fine-tune models, evaluate them, and host optimized inference.

Deep Lake: a multimodal database for AI that stores, versions, streams, and indexes unstructured ML data with vector/RAG

Open-source, decentralized AI infrastructure combining model development with blockchain/DeFi primitives (staking, cross

All-in-one AI coding agent and developer platform offering chat, code generation, debugging, IDE plugins, and enterprise

Quality-first AI coding platform for context-aware code review, test generation, and SDLC governance across multi-repo,팀

Latest Articles (67)

A step-by-step guide to building an AI-powered Reliability Guardian that reviews code locally and in CI with Qodo Command.

A developer chronicles switching to Zed on Linux, prototyping on a phone, and a late-night video correction.

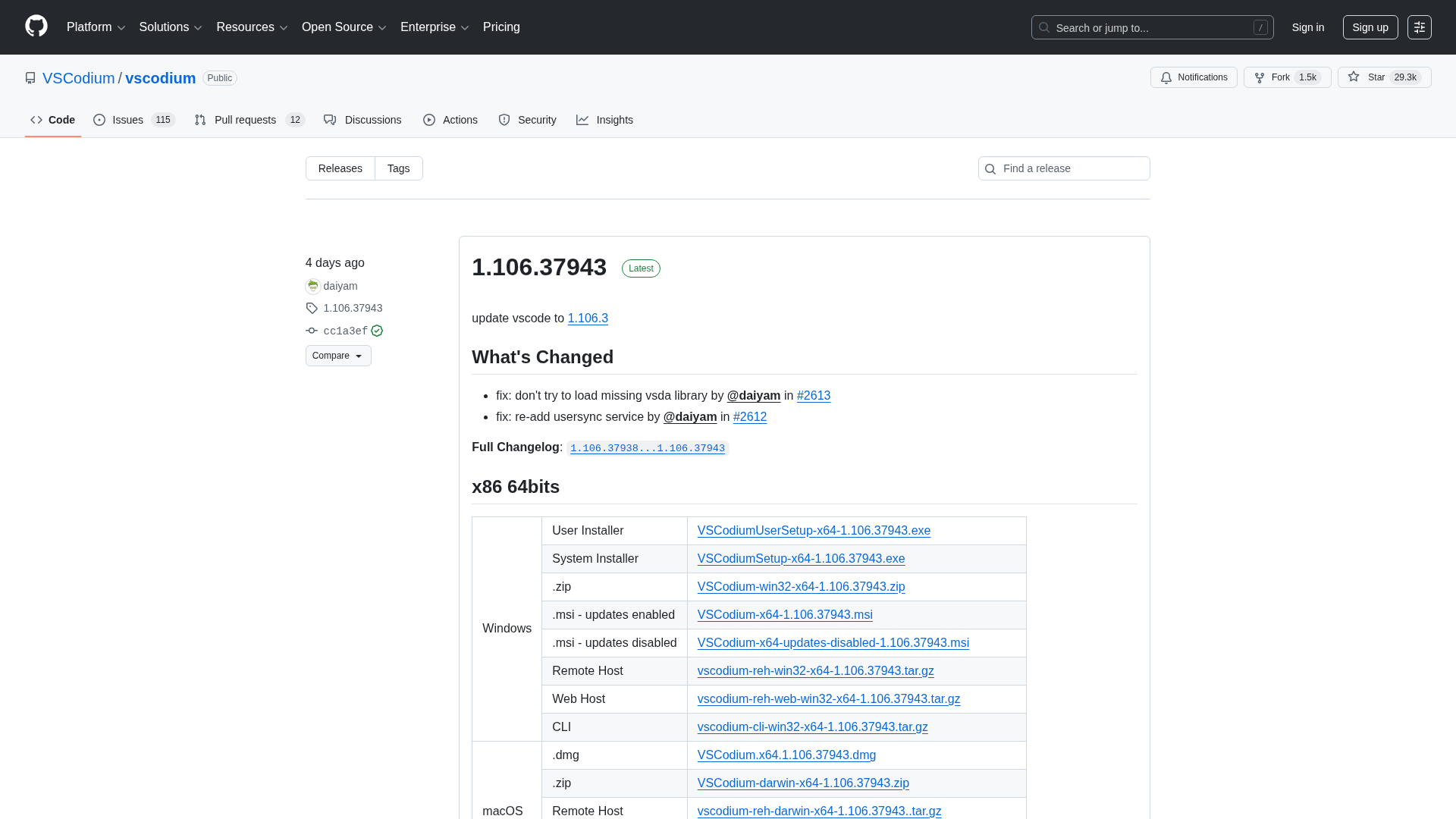

A comprehensive releases page for VSCodium with multi-arch downloads and versioned changelogs across 1.104–1.106 revisions.

Qodo ranks highest for Codebase Understanding by Gartner, highlighting cross-repo context as essential for scalable AI development.

AWS commits $50B to expand AI/HPC capacity for U.S. government, adding 1.3GW compute across GovCloud regions.