Topic Overview

Autonomous & Automotive AI Stacks examines how vehicle makers, silicon vendors and Tier‑1 suppliers assemble software, hardware and data infrastructure to run advanced driver assistance and autonomous features. By 2026 the space is defined by two trends: vertically integrated OEM stacks (Tesla’s in‑house FSD approach) versus platform offerings from suppliers (NVIDIA Rubin, Mobileye and Tier‑1 systems) that combine vehicle‑grade compute, perception stacks and lifecycle tools. Relevance stems from rising on‑device compute, regulatory scrutiny for safety, and the need to close the loop from fleet data to model updates. Key components and supporting tools: on‑vehicle edge AI vision platforms for sensor fusion and inference; large multimodal AI data platforms for labeling, versioning and retrieval; and cloud/edge orchestration for training, validation and deployment. Practical tooling examples include NVIDIA Run:ai for Kubernetes‑native GPU orchestration across on‑prem and multi‑cloud training fleets; Activeloop Deep Lake as a multimodal database for storing, versioning and streaming images, video and embeddings; Google Vertex AI for managed model lifecycle (training, deployment, monitoring); and LlamaIndex and RAG patterns for operational document agents and diagnostics. Developer productivity and code automation are supported by agentic environments and assistants such as Warp’s ADE, MindStudio’s no‑/low‑code agent builder, and CodeGeeX for coding assistance. Taken together, these stacks require integrated data ops, safety validation, and continuous deployment pipelines. Evaluations today center on latency and reliability for edge inference, data bandwidth and labeling strategies, GPU utilization for large model training, and governance tooling that meets automotive safety and regulatory requirements.

Tool Rankings – Top 6

Kubernetes-native GPU orchestration and optimization platform that pools GPUs across on‑prem, cloud and multi‑cloud to提高

Deep Lake: a multimodal database for AI that stores, versions, streams, and indexes unstructured ML data with vector/RAG

Agentic Development Environment (ADE) — a modern terminal + IDE with built-in AI agents to accelerate developer flows.

Unified, fully-managed Google Cloud platform for building, training, deploying, and monitoring ML and GenAI models.

Developer-focused platform to build AI document agents, orchestrate workflows, and scale RAG across enterprises.

No-code/low-code visual platform to design, test, deploy, and operate AI agents rapidly, with enterprise controls and a

Latest Articles (45)

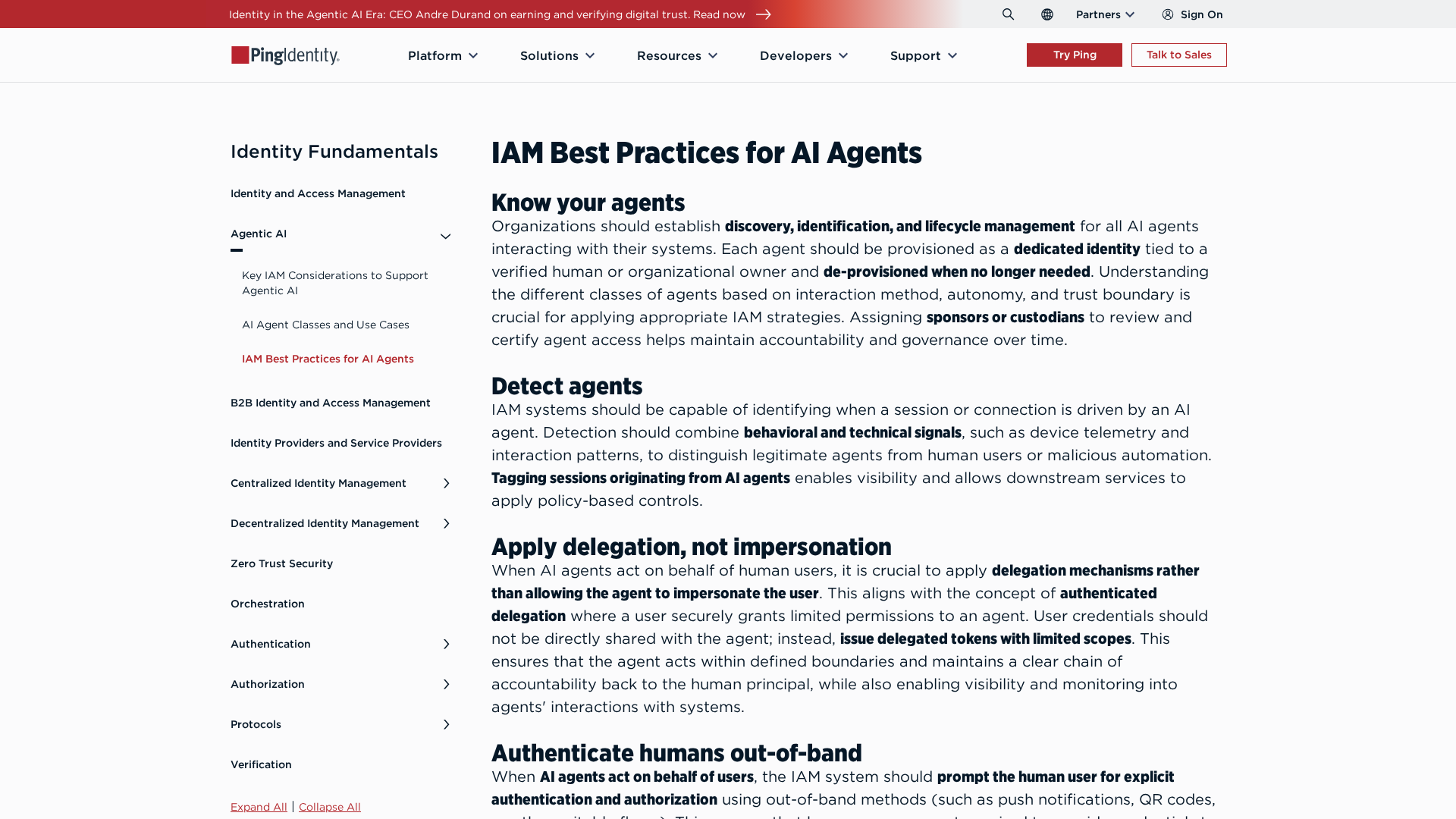

Best-practices for securing AI agents with identity management, delegated access, least privilege, and human oversight.

A foundational Core overhauL that speeds up development, simplifies authentication with JWT, and accelerates governance for Akash's decentralized cloud.

Meta and Sify plan a 500 MW hyperscale data center in Visakhapatnam with the Waterworth subsea cable landing.

Saudi xAI-HUMAIN launches a government-enterprise AI layer with large-scale GPU deployment and multi-year sovereignty milestones.

Meta may partner with Sify to lease a 500 MW Vishakhapatnam data center in a Rs 15,266 crore project linked to the Waterworth subsea cable.