Topic Overview

Autonomous & Connected Vehicle AI Platforms cover the software, compute and data infrastructure that production automakers and suppliers use to run perception, decisioning, in‑vehicle assistants and fleet services. As OEMs move beyond lab prototypes, platforms that combine edge vision processing, robust data management and lifecycle tooling are becoming essential. NVIDIA Rubin and similar OEM collaborations are examples of integrated vehicle AI stacks that pair in‑car compute with partner ecosystems for simulation, validation and over‑the‑air updates. Key trends in 2026 include consolidation of compute across edge and cloud, data‑centric ML pipelines for multimodal sensor streams, and tighter OEM–platform vendor integration to meet safety and regulatory demands. Tool categories reflect these needs: Run:ai for Kubernetes‑native GPU orchestration across on‑prem, cloud and edge; Vertex AI for end‑to‑end model training, deployment and monitoring; Activeloop Deep Lake for versioned, searchable multimodal datasets and vector indexing; and OpenPipe for capturing LLM interaction logs, fine‑tuning and managed inference. Developer and agent tools such as MindStudio, Warp and LlamaIndex accelerate building in‑vehicle agents, retrieval‑augmented systems and production workflows while reducing integration friction. Together these components address the full ML lifecycle: sensor data capture, scalable annotation and storage, model training and fine‑tuning, fleet rollout and continuous evaluation. For OEMs and tier‑1 suppliers the practical challenge is integrating these platforms under safety, latency and connectivity constraints—balancing on‑device inference with cloud‑assisted updates and fleet learning. This intersection of edge vision platforms and AI data platforms defines how vehicle autonomy and connected services are operationalized today.

Tool Rankings – Top 6

Kubernetes-native GPU orchestration and optimization platform that pools GPUs across on‑prem, cloud and multi‑cloud to提高

Unified, fully-managed Google Cloud platform for building, training, deploying, and monitoring ML and GenAI models.

Deep Lake: a multimodal database for AI that stores, versions, streams, and indexes unstructured ML data with vector/RAG

No-code/low-code visual platform to design, test, deploy, and operate AI agents rapidly, with enterprise controls and a

Agentic Development Environment (ADE) — a modern terminal + IDE with built-in AI agents to accelerate developer flows.

Developer-focused platform to build AI document agents, orchestrate workflows, and scale RAG across enterprises.

Latest Articles (56)

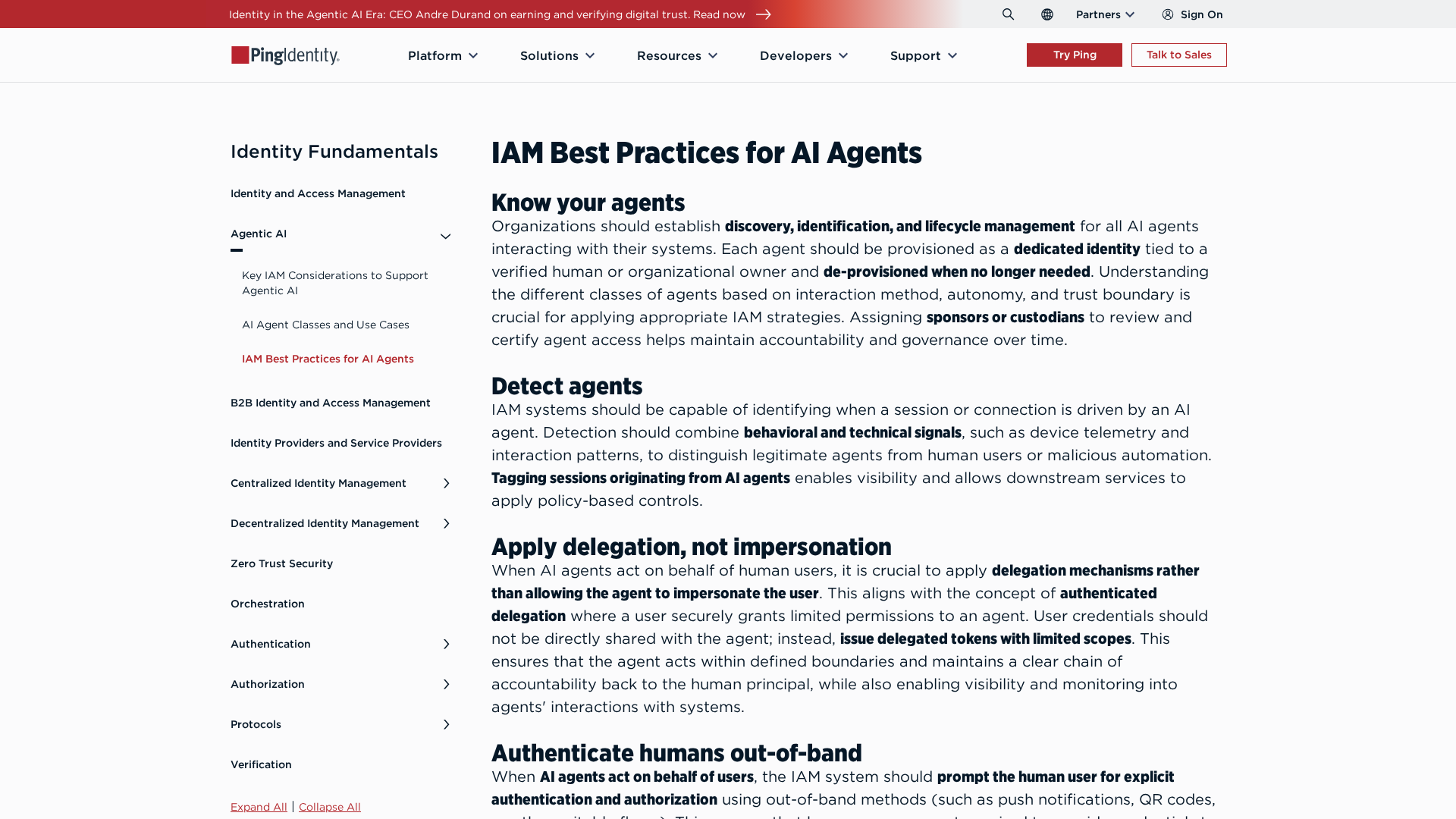

Best-practices for securing AI agents with identity management, delegated access, least privilege, and human oversight.

A foundational Core overhauL that speeds up development, simplifies authentication with JWT, and accelerates governance for Akash's decentralized cloud.

Meta to lease 500 MW Visakhapatnam data centre capacity from Sify and land Waterworth submarine cable.

Meta plans a 500MW AI data center in Visakhapatnam with Sify, linked to the Waterworth subsea cable.

Meta may partner with Sify to lease a 500 MW Vishakhapatnam data center in a Rs 15,266 crore project linked to the Waterworth subsea cable.