Topic Overview

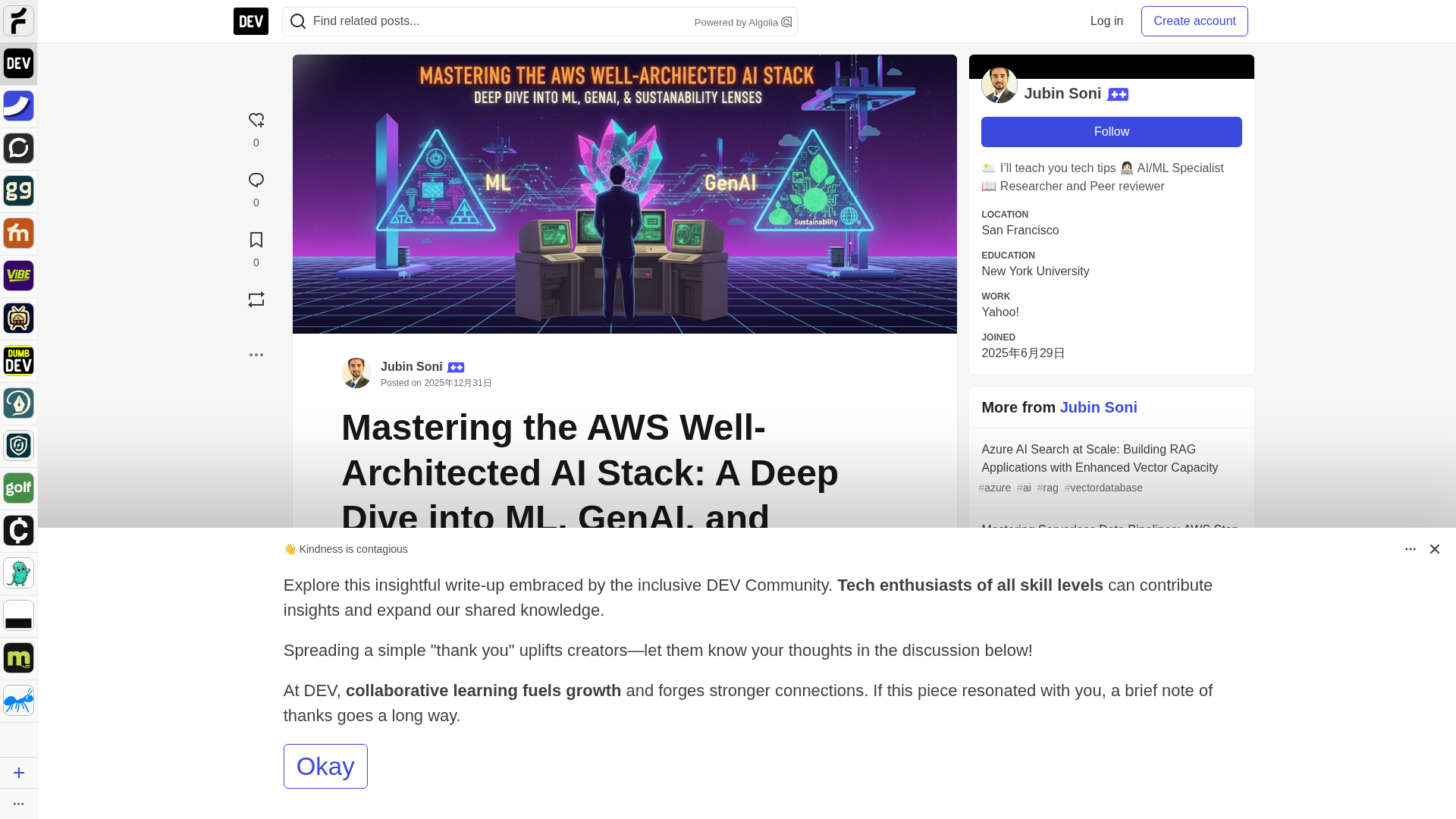

Cost-Optimized GenAI Tooling covers the patterns, infrastructure and toolchains teams use to minimize inference and training spend while keeping latency, privacy and developer velocity acceptable. By 2026, production GenAI has moved from experimental pilots to steady, high-volume services, making hardware selection (AWS Trainium/Inferentia and comparable accelerators), model choice (specialized or distilled variants like Code Llama for code tasks), and runtime optimizations central to operational budgets. This topic spans AI tool marketplaces (discovering cost- and performance-profiled models and runtime images), decentralized AI infrastructure (self-hosted agents and private model serving via Tabby/Tabnine-style deployments), GenAI test automation (end-to-end cost-aware evaluation), AI data platforms (efficient data pipelines and caching to reduce repeated inference), and AI code generation tools (GitHub Copilot, Replit, Cline, GPTConsole) that influence developer productivity vs. compute tradeoffs. Engineering frameworks such as LangChain are critical for orchestrating stateful agent flows and routing work to cheaper backends; IBM watsonx Assistant and Anthropic’s Claude illustrate enterprise-grade assistant stacks where multi-model routing and fallback policies reduce expensive calls. Practical levers include model selection and distillation, quantization and compilation for Trainium/Inferentia, batching and request shaping, spot/ephemeral instance strategies, and telemetry-driven routing implemented by cost-optimization platforms (e.g., Unicorne-style orchestration). Integrating test automation and observability into the toolchain ensures cost regressions are caught early. Overall, the focus is on building repeatable, vendor-agnostic toolchains that balance cost, compliance, and developer ergonomics for scalable GenAI services.

Tool Rankings – Top 6

Engineering platform and open-source frameworks to build, test, and deploy reliable AI agents.

Enterprise virtual agents and AI assistants built with watsonx LLMs for no-code and developer-driven automation.

Code-specialized Llama family from Meta optimized for code generation, completion, and code-aware natural-language tasks

An AI pair programmer that gives code completions, chat help, and autonomous agent workflows across editors, theterminal

Enterprise-focused AI coding assistant emphasizing private/self-hosted deployments, governance, and context-aware code.

Anthropic's Claude family: conversational and developer AI assistants for research, writing, code, and analysis.

Latest Articles (89)

A comprehensive comparison and buying guide to 14 AI governance tools for 2025, with criteria and vendor-specific strengths.

A comprehensive LangChain releases roundup detailing Core 1.2.6 and interconnected updates across XAI, OpenAI, Classic, and tests.

Cannot access the article content due to an access-denied error, preventing summarization.

Adobe nears a $19 billion deal to acquire Semrush, expanding its marketing software capabilities, according to WSJ reports.

Wolters Kluwer expands UpToDate Expert AI with UpToDate Lexidrug to bolster drug information and medication decision support.