Topic Overview

This topic examines the leading enterprise and edge large language models in 2025—notably Mistral 3, Claude Opus 4.5 and Amazon Nova—and how organizations select, deploy, test, and govern them across AI data platforms, model marketplaces, GenAI test automation, and governance tooling. As of 2025-12-06, decision makers balance three pressures: latency and privacy demands that push inference to the edge and on‑prem, enterprise requirements for safety/compliance and fine‑tuning, and operational needs for scalable cloud integration and monitoring. Mistral 3 represents the open/portable end of the spectrum (strong for self‑hosting and edge quantization), Claude Opus 4.5 emphasizes assistant-oriented safety and instruction tuning for enterprise workflows, and Amazon Nova targets deep cloud integration and managed ops. Across categories, AI data platforms ingest and curate training/operational data for fine‑tuning; AI tool marketplaces simplify model discovery and procurement; GenAI test automation validates prompts, guardrails, and behaviors pre‑deployment; and AI governance tools provide auditing, policy enforcement, and lineage tracking. Tooling ecosystems reflect these needs: developer‑centric platforms and coding agents (Cline, Tabby, Windsurf, Blackbox.ai) focus on multi‑model workflows, local-first deployment, and agent orchestration; domain platforms (Harvey, IBM watsonx Assistant, Microsoft 365 Copilot, Observe.AI, Skit.ai) embed LLMs into vertical processes with compliance features; and low‑code/agent builders (MindStudio, Flowpoint) accelerate safe productionization. The practical trend is hybrid, composable stacks—mixing hosted models for throughput with quantized edge models for latency and privacy—backed by automated testing and governance to control hallucinations, bias, and data leakage. This overview helps enterprise architects weigh model attributes, integration patterns, and supporting platforms when choosing LLMs for production and edge deployments.

Tool Rankings – Top 6

Open-source, client-side AI coding agent that plans, executes and audits multi-step coding tasks.

.avif)

Open-source, self-hosted AI coding assistant with IDE extensions, model serving, and local-first/cloud deployment.

AI-native IDE and agentic coding platform (Windsurf Editor) with Cascade agents, live previews, and multi-model support.

Domain-specific AI platform delivering Assistant, Knowledge, Vault, and Workflows for law firms and professionalservices

Enterprise virtual agents and AI assistants built with watsonx LLMs for no-code and developer-driven automation.

AI assistant integrated across Microsoft 365 apps to boost productivity, creativity, and data insights.

Latest Articles (79)

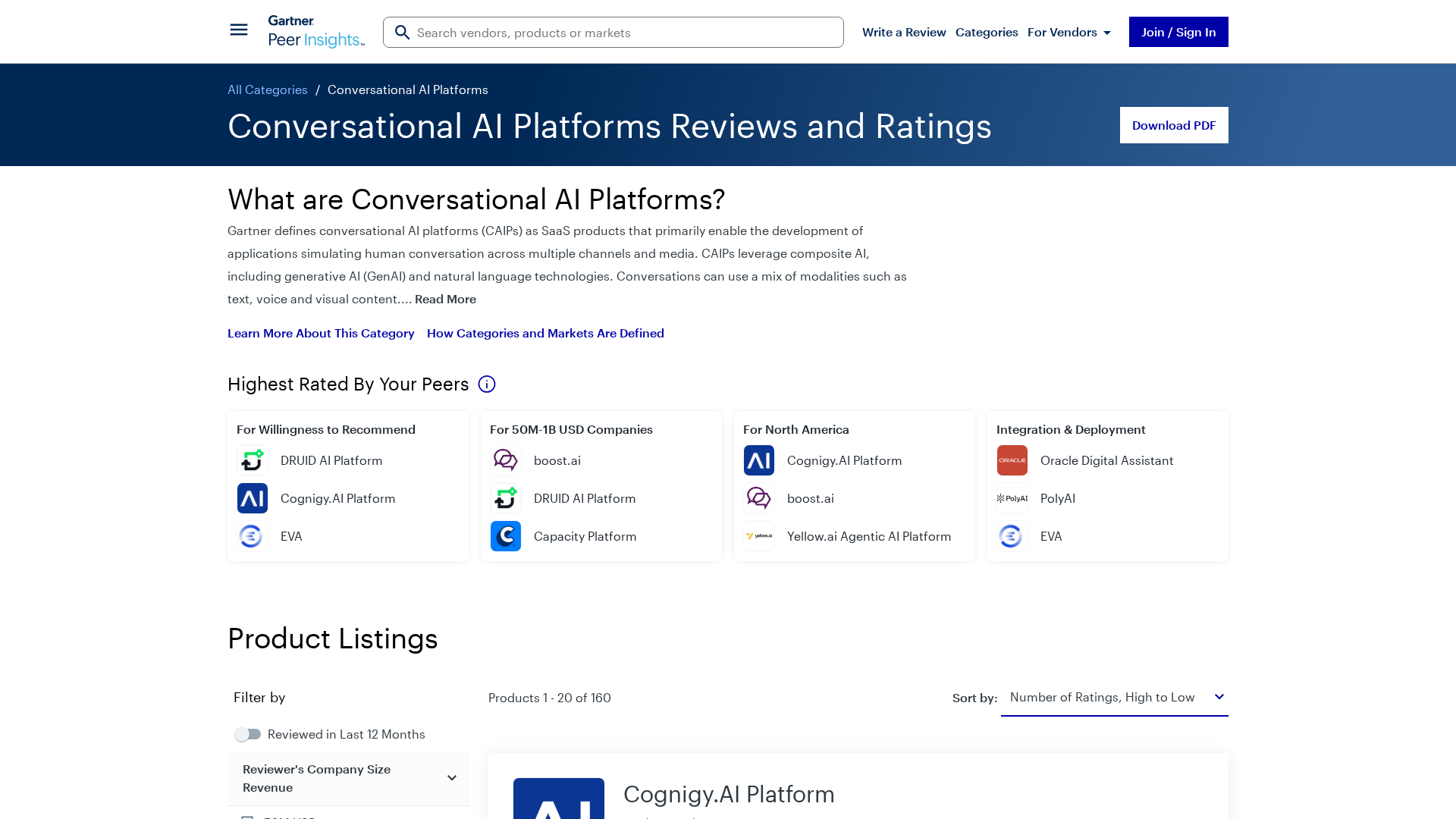

Gartner’s market view on conversational AI platforms, outlining trends, vendors, and buyer guidance.

A comprehensive comparison and buying guide to 14 AI governance tools for 2025, with criteria and vendor-specific strengths.

Adobe nears a $19 billion deal to acquire Semrush, expanding its marketing software capabilities, according to WSJ reports.

Wolters Kluwer expands UpToDate Expert AI with UpToDate Lexidrug to bolster drug information and medication decision support.