Topic Overview

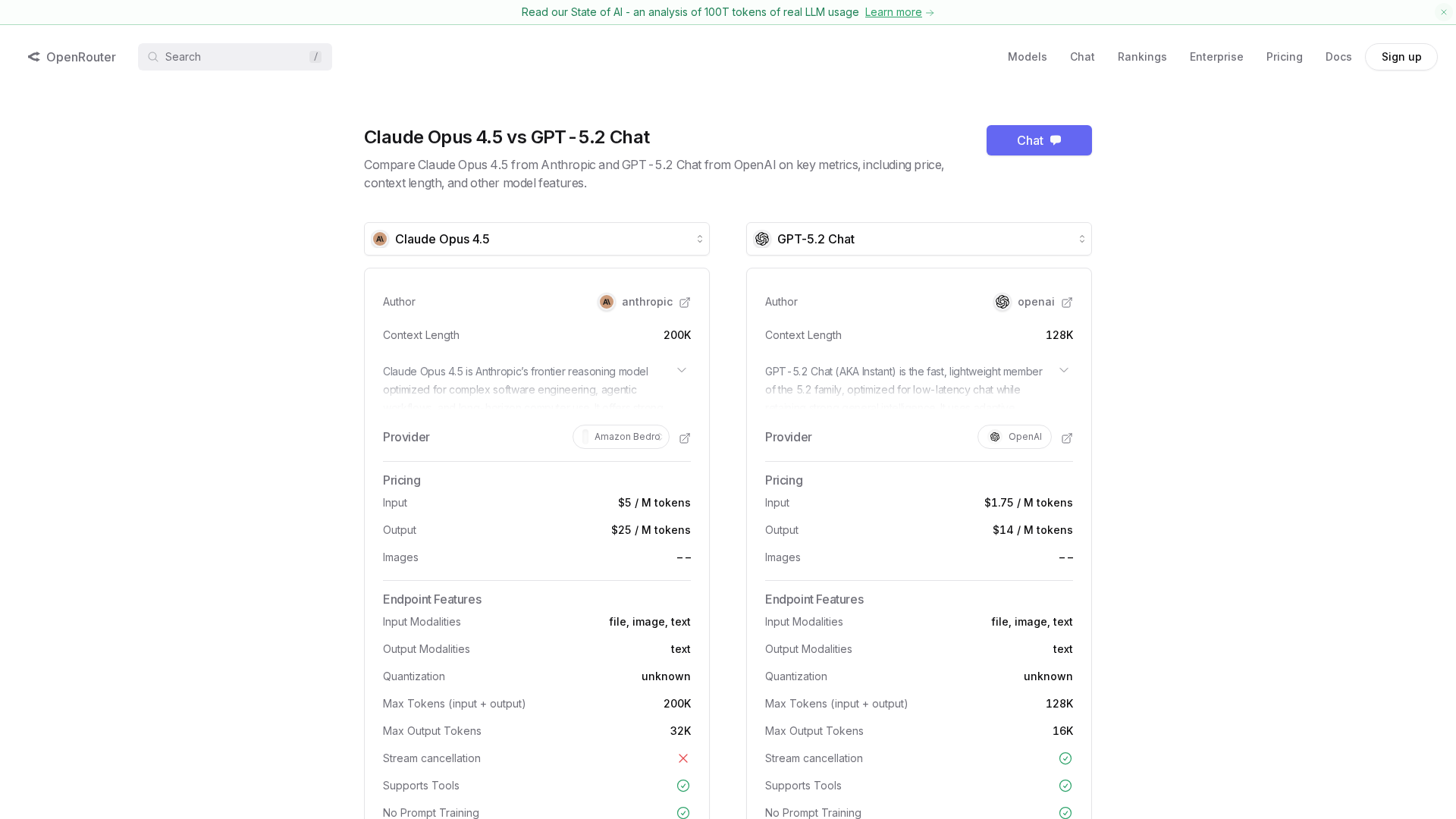

This topic compares two contemporary large language model releases (GPT-5.2 and Claude Opus 4.5) through the lens of technical, mathematical and scientific use cases: symbolic reasoning, numerical accuracy, reproducibility, and tool integration. With no related articles supplied here, the overview synthesizes available product and platform descriptions and observable 2025 trends: teams now prioritize models’ stepwise reasoning, callable toolchains (solvers, calculators, code execution), retrieval-augmented generation for source grounding, and clear outputs that support verification and reproducible workflows. Key considerations for choosing between these models include math/formal-reasoning fidelity, support for external tools and APIs, context-window size for long technical documents, and model behavior under chain-of-thought or constrained prompting. Supporting tools and platforms play complementary roles: enterprise copilots and content platforms (e.g., Jasper, Writesonic, Copy.ai, Rytr) focus on marketing and writer productivity; QuillBot offers paraphrasing, grammar and summarization utilities that are useful for editing technical prose; and broad-access services (e.g., ChatGPT-facing sites) often gate features or tiers behind JS/cookie flows and tiered pricing, affecting availability for heavy technical workloads. Practical guidance emphasized here is empirical: run task-specific benchmarks (proof checking, symbolic algebra, numerics, code generation + execution), verify outputs with external solvers, and prefer models with robust tool integrations and audit logs for scientific reproducibility. This comparison framework helps technical teams select and validate whichever LLM—GPT-5.2 or Claude Opus 4.5—best meets the accuracy, traceability, and integration needs of their scientific and mathematical workflows.

Tool Rankings – Top 6

Summary of a site scrape of https://chatgpt.com, noting extracted content, JS/cookie gating, and inferred pricing tiers.

AI content-automation platform for marketing teams to produce on‑brand content at scale.

AI-powered writing assistant for paraphrasing, grammar, citations, summarization, AI detection, and audio/image tools.

All-in-one AI marketing and content platform with 80+ writing tools, SEO automation and AI Search Visibility (GEO).

Rytr — AI writing assistant for short-form (and some long-form) content with templates, tones, Chrome extension, and an

AI-native GTM platform unifying workflows, agents, and content tools for sales and marketing.

Latest Articles (21)

A comparative look at Nano Banana Pro and Wan 2.5 Image Edit, showing how generation and precise editing converge in modern AI workflows.

A practical guide to 10 AI email assistants for inbox management and campaign creation, with features, pricing, and best-use cases.

You’ve published content. You’ve tracked your AI visibility. Now you’re staring at the numbers, wond...

OpenAI debuts GPT-5.1 in API with codex variants, extended caching, and configurable reasoning, amid broader docs discussions.

Explains GEO vs SEO, their similarities and differences, and why integrating both is essential for AI-driven and traditional search visibility.