Topic Overview

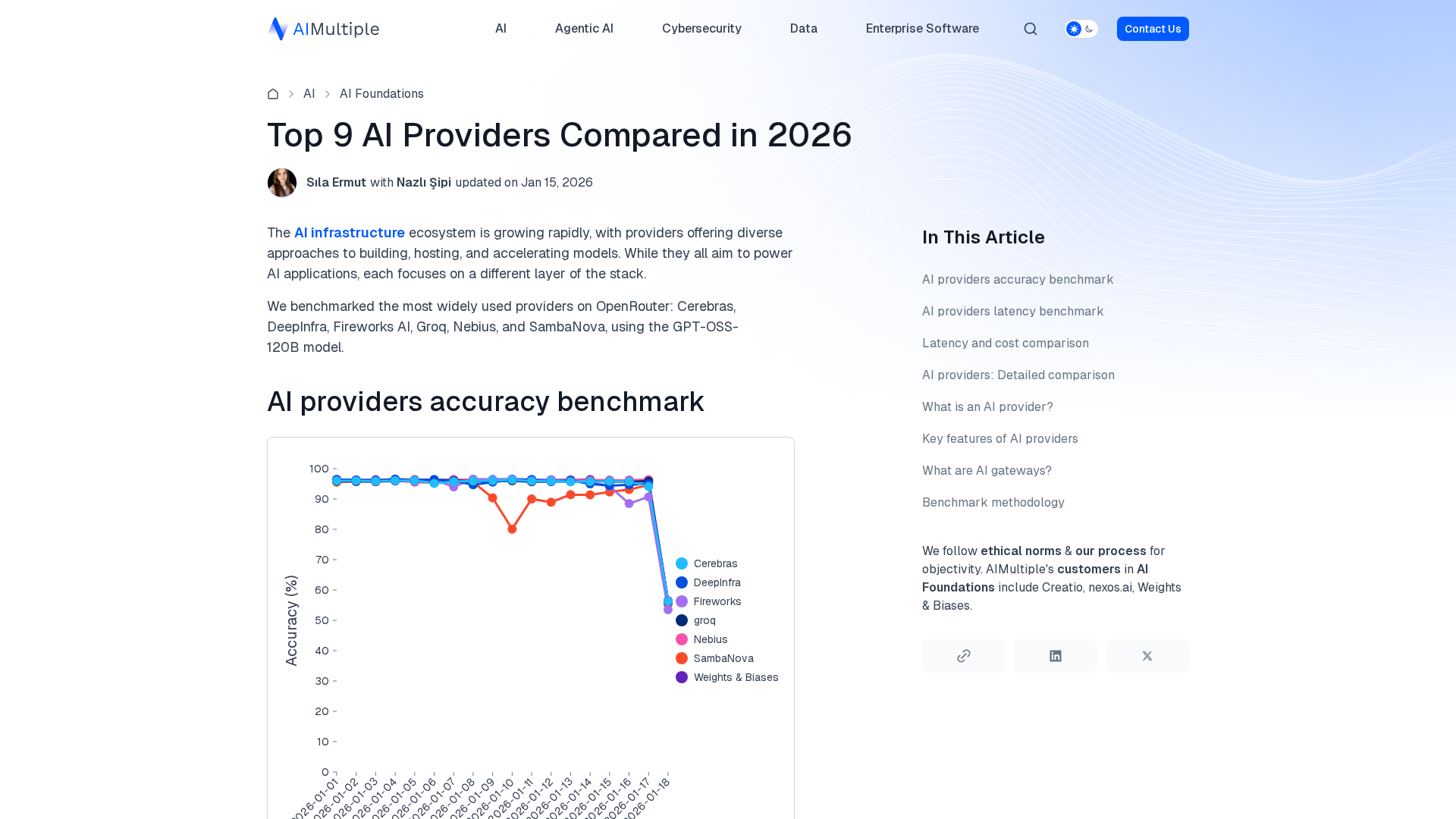

AI inference platforms and “inference‑as‑a‑service” describe the systems that run trained models in production: hosted APIs, managed cloud runtimes, model marketplaces, and self‑hosted stacks that prioritize latency, privacy, and governance. This topic covers hosted providers (e.g., Baseten, Replicate and major clouds), integrated ML platforms (Vertex AI), and specialized deployments—ranging from model marketplaces that make models discoverable and runnable to decentralized or on‑prem stacks for private inference. Relevance in 2026 stems from three converging trends: growing demand for real‑time and agentic workloads, tighter enterprise requirements around data governance and cost predictability, and an expanding ecosystem of open models and marketplaces. Providers like Replicate and Baseten simplify running third‑party models via APIs and deployment tooling; Vertex AI offers an end‑to‑end managed suite for discovery, training, fine‑tuning, deployment and monitoring; while tools such as Tabby and Tabnine illustrate the move toward hybrid/self‑hosted assistants and model serving for privacy‑sensitive code workflows. No‑code/low‑code platforms like StackAI and infrastructure players such as Xilos highlight needs for orchestration and visibility when agentic systems call external services. Key considerations when comparing offerings include latency and geographic coverage, cost and pricing model (per‑token vs per‑compute), model provenance and licensing, observability and policy enforcement, and ease of integration with data platforms and developer tooling. The landscape favors flexible stacks that combine hosted inference for scale with self‑hosted or edge deployments for compliance and performance, plus marketplaces and governance layers to manage model lifecycle and risk.

Tool Rankings – Top 5

Unified, fully-managed Google Cloud platform for building, training, deploying, and monitoring ML and GenAI models.

.avif)

Open-source, self-hosted AI coding assistant with IDE extensions, model serving, and local-first/cloud deployment.

Enterprise-focused AI coding assistant emphasizing private/self-hosted deployments, governance, and context-aware code.

End-to-end no-code/low-code enterprise platform for building, deploying, and governing AI agents that automate work onun

Intelligent Agentic AI Infrastructure

Latest Articles (27)

OpenAI’s bypass moment underscores the need for governance that survives inevitable user bypass and hardens system controls.

A call to enable safe AI use at work via sanctioned access, real-time data protections, and frictionless governance.

A real-world look at AI in SOCs, debunking myths and highlighting the human role behind automation with Bell Cyber experts.

Explores the human role behind AI automation and how Bell Cyber tackles AI hallucinations in security operations.

Identity won’t secure agentic AI; you need runtime visibility and action-based policy.