Topic Overview

AI‑powered brain–computer interface (BCI) platforms and SDKs provide the software backbone for converting neural signals into actionable outputs, integrating models, developer tools, and operational controls. As of 2026, the space centers on low‑latency neural decoding, multimodal fusion (neural + audio/video + context), on‑device inference, and privacy‑preserving pipelines that link sensors to cloud services and agentic control loops. Key components include model training and deployment platforms (e.g., Vertex AI for end‑to‑end model workflows), multimodal generative and perception models (e.g., Google Gemini) for interpreting complex neural and contextual inputs, and enterprise LLM services (e.g., Cohere, Claude) for personalization, command interpretation, and conversational feedback. Retrieval and knowledge augmentation layers (LlamaIndex) enable RAG‑style personalization and long‑term user models; no‑/low‑code agent design tools (MindStudio) speed prototyping of closed‑loop interactions; developer ergonomics and embedded agents (Warp) accelerate integration into production code; and multi‑agent orchestration frameworks (CrewAI) coordinate parallel control, monitoring, and safety agents. Trends driving adoption include improved model efficiency enabling edge inference, standardized SDKs and data formats for sensor and neurodata interoperability, heightened regulatory and privacy requirements demanding on‑device or private‑cloud options, and the use of agent frameworks to manage real‑time safety, adaptation, and explainability. For practitioners, the landscape now emphasizes modular stacks—neural preprocessing, real‑time decoders, retrieval‑augmented personalization, agent orchestration, and observability—so teams can assemble compliant, latency‑sensitive BCI applications without reinventing core infrastructure.

Tool Rankings – Top 6

Unified, fully-managed Google Cloud platform for building, training, deploying, and monitoring ML and GenAI models.

Google’s multimodal family of generative AI models and APIs for developers and enterprises.

Enterprise-focused LLM platform offering private, customizable models, embeddings, retrieval, and search.

Anthropic's Claude family: conversational and developer AI assistants for research, writing, code, and analysis.

Developer-focused platform to build AI document agents, orchestrate workflows, and scale RAG across enterprises.

No-code/low-code visual platform to design, test, deploy, and operate AI agents rapidly, with enterprise controls and a

Latest Articles (72)

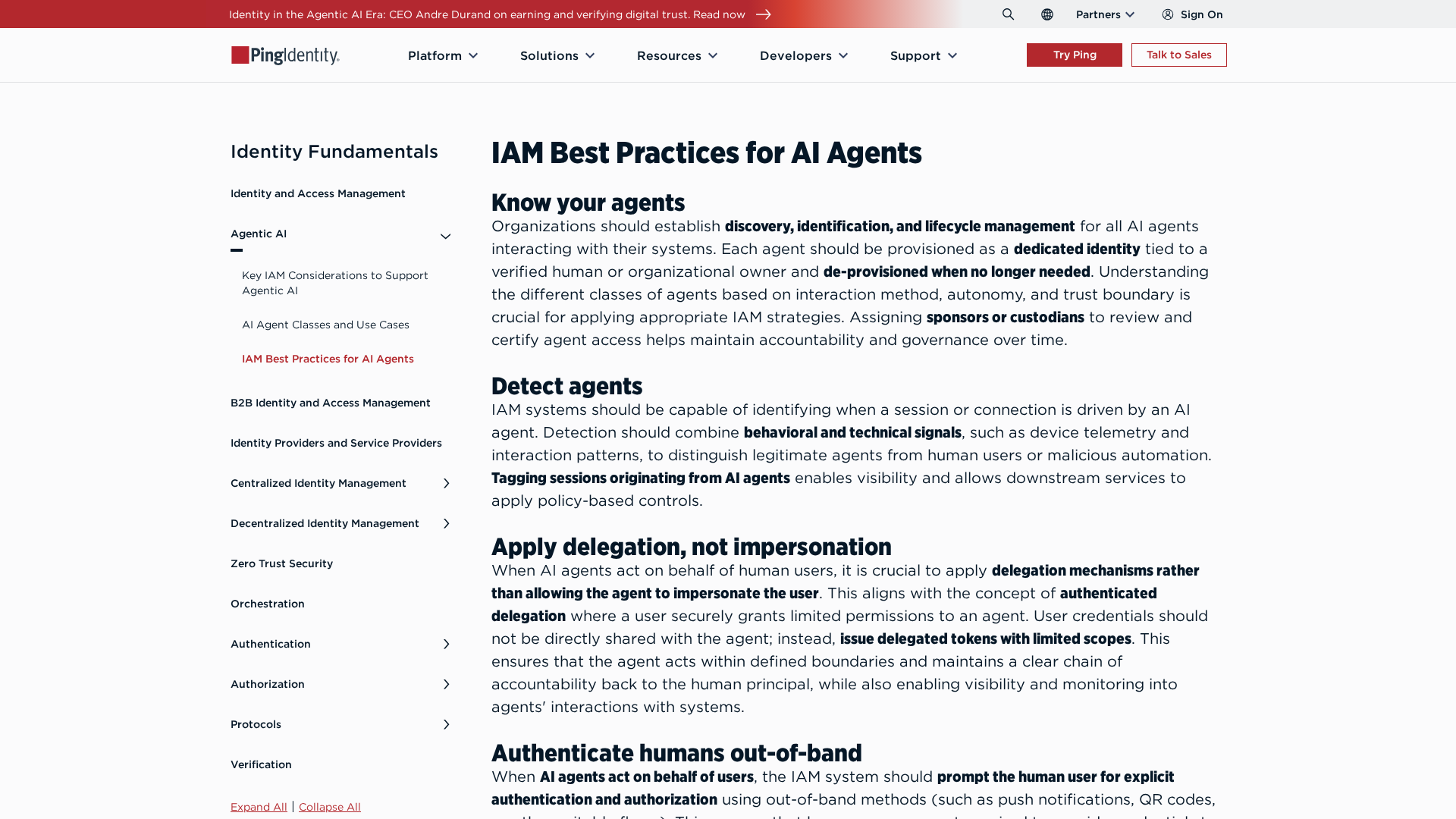

Best-practices for securing AI agents with identity management, delegated access, least privilege, and human oversight.

A practical, step-by-step guide to fine-tuning large language models with open-source NLP tools.

Meta may partner with Sify to lease a 500 MW Vishakhapatnam data center in a Rs 15,266 crore project linked to the Waterworth subsea cable.

Meta and Sify plan a 500 MW hyperscale data center in Visakhapatnam with the Waterworth subsea cable landing.

OpenAI rolls out global group chats in ChatGPT, supporting up to 20 participants in shared AI-powered conversations.