Topic Overview

This topic examines enterprise-grade GenAI development suites — notably Amazon’s Nova toolkit and comparable developer offerings from Anthropic and Google — and how they map onto four practical categories: AI tool marketplaces, agent frameworks, AI data platforms, and GenAI test automation. As organizations move beyond experiments to production AI in 2025, they face choices about vendor-managed toolchains versus open-source, composable stacks that prioritize governance, observability, and model lifecycle control. Key platform roles are clear: agent frameworks (LangChain, Kore.ai, AutoGPT/AgentGPT) provide orchestration, state management and multi-agent workflows; AI data platforms (OpenPipe, LlamaIndex) collect interaction logs, curate training datasets and enable RAG/document agent pipelines; marketplaces and managed agent services (Agentverse, Synthreo/BotX, Yellow.ai) simplify deployment and connector management; and GenAI test and quality tooling (Qodo/Codium, LangChain’s evaluation tools, OpenPipe’s evaluation hooks) drive automated validation, traceability and SDLC governance. Supporting developer productivity are code models and assistants (GitHub Copilot, StarCoder, Code Llama, Tabby) and domain platforms (Harvey for legal, Vogent for voice) while security and compliance layers (Simbian, enterprise observability) are increasingly essential. Enterprises choosing between cloud-native toolkits (Amazon Nova) and Anthropic/Google ecosystems should weigh integration with existing infra, hybrid hosting, model choice and fine-tuning workflows, data governance, and test automation maturity. The dominant trend in 2025 is hybrid, interoperable stacks that combine vendor toolkits for scale with open-source frameworks for flexibility and auditability — making governance, reproducible evaluation, and connector ecosystems the decisive selection criteria.

Tool Rankings – Top 6

Engineering platform and open-source frameworks to build, test, and deploy reliable AI agents.

Enterprise AI agent platform for building, deploying and orchestrating multi-agent workflows with governance, observabil

Managed platform to collect LLM interaction data, fine-tune models, evaluate them, and host optimized inference.

Developer-focused platform to build AI document agents, orchestrate workflows, and scale RAG across enterprises.

Open-source, client-side AI coding agent that plans, executes and audits multi-step coding tasks.

Platform to build, deploy and run autonomous AI agents and automation workflows (self-hosted or cloud-hosted).

Latest Articles (164)

A comprehensive LangChain releases roundup detailing Core 1.2.6 and interconnected updates across XAI, OpenAI, Classic, and tests.

In-depth look at Gemini 3 Pro benchmarks across reasoning, math, multimodal, and agentic capabilities with implications for building AI agents.

A step-by-step guide to building an AI-powered Reliability Guardian that reviews code locally and in CI with Qodo Command.

A developer chronicles switching to Zed on Linux, prototyping on a phone, and a late-night video correction.

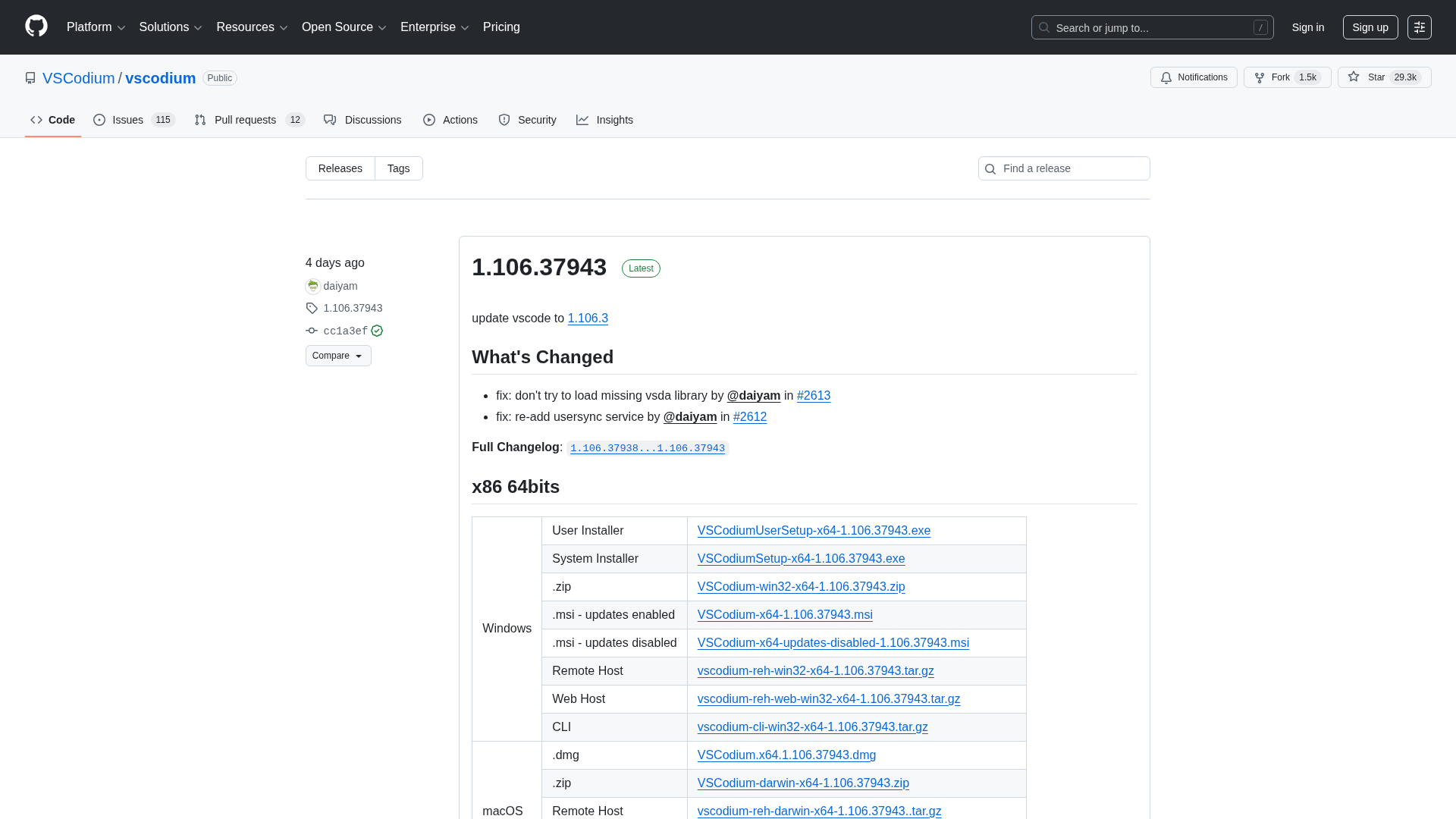

A comprehensive releases page for VSCodium with multi-arch downloads and versioned changelogs across 1.104–1.106 revisions.