Topic Overview

This topic covers how enterprises run large-scale generative AI (GenAI) workloads on specialized accelerators—AWS Trainium and Inferentia and wafer‑scale systems such as Cerebras—and how that hardware choice intersects with test automation, data platforms, and security/governance practices. As of 2026-01-21, organizations are prioritizing accelerator-backed deployments to reduce training cost, scale high‑throughput inference, and meet low‑latency requirements for agentic and multimodal applications. Operationalizing these platforms requires a full stack: cloud-managed ML platforms (e.g., Vertex AI) to unify training, fine‑tuning, deployment and monitoring; developer frameworks (LangChain) to assemble and test agent workflows; GenAI test automation (KaneAI) to convert intents into repeatable validation and regression tests; and governance and vendor oversight (Monitaur) to enforce policies, monitor drift, and manage third‑party models. Enterprise agent platforms (Kore.ai, Yellow.ai) and infrastructure providers (Xilos) address orchestration and observability for multi‑agent deployments, while conversational and developer models (Claude family) illustrate typical model targets for these accelerators. Key practical realities: deploying on Trainium/Inferentia/Cerebras often requires model compilation, runtime tuning and integrated data pipelines, and shifts some responsibilities from generic GPU stacks to accelerator‑specific tooling. That increases the need for automated testing, dataset/version controls in AI data platforms, and continuous governance to satisfy security, privacy and regulatory constraints. The most effective architectures combine accelerator-aware MLOps, end‑to‑end validation (GenAI test automation), and policy‑centric governance to deliver performant, auditable GenAI services at enterprise scale.

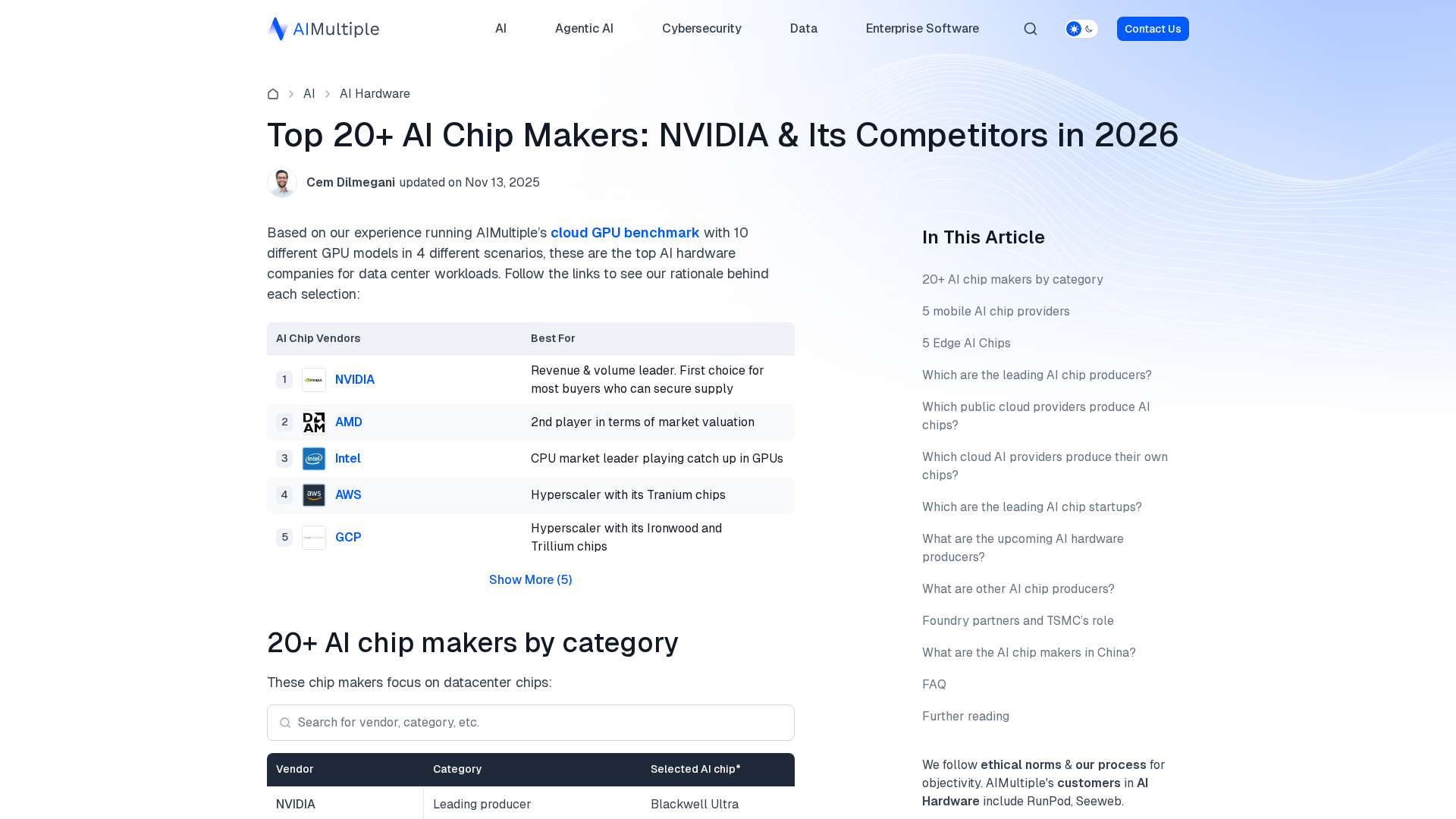

Tool Rankings – Top 6

Unified, fully-managed Google Cloud platform for building, training, deploying, and monitoring ML and GenAI models.

An open-source framework and platform to build, observe, and deploy reliable AI agents.

Insurance-focused enterprise AI governance platform centralizing policy, monitoring, validation, vendor governance and证e

Enterprise AI agent platform for building, deploying and orchestrating multi-agent workflows with governance, observabil

Anthropic's Claude family: conversational and developer AI assistants for research, writing, code, and analysis.

KaneAI is a GenAI-native testing agent that plans, writes, and evolves end-to-end tests using natural language.

Latest Articles (85)

OpenAI’s bypass moment underscores the need for governance that survives inevitable user bypass and hardens system controls.

A call to enable safe AI use at work via sanctioned access, real-time data protections, and frictionless governance.

A real-world look at AI in SOCs, debunking myths and highlighting the human role behind automation with Bell Cyber experts.

Explores the human role behind AI automation and how Bell Cyber tackles AI hallucinations in security operations.

Identity won’t secure agentic AI; you need runtime visibility and action-based policy.