Topic Overview

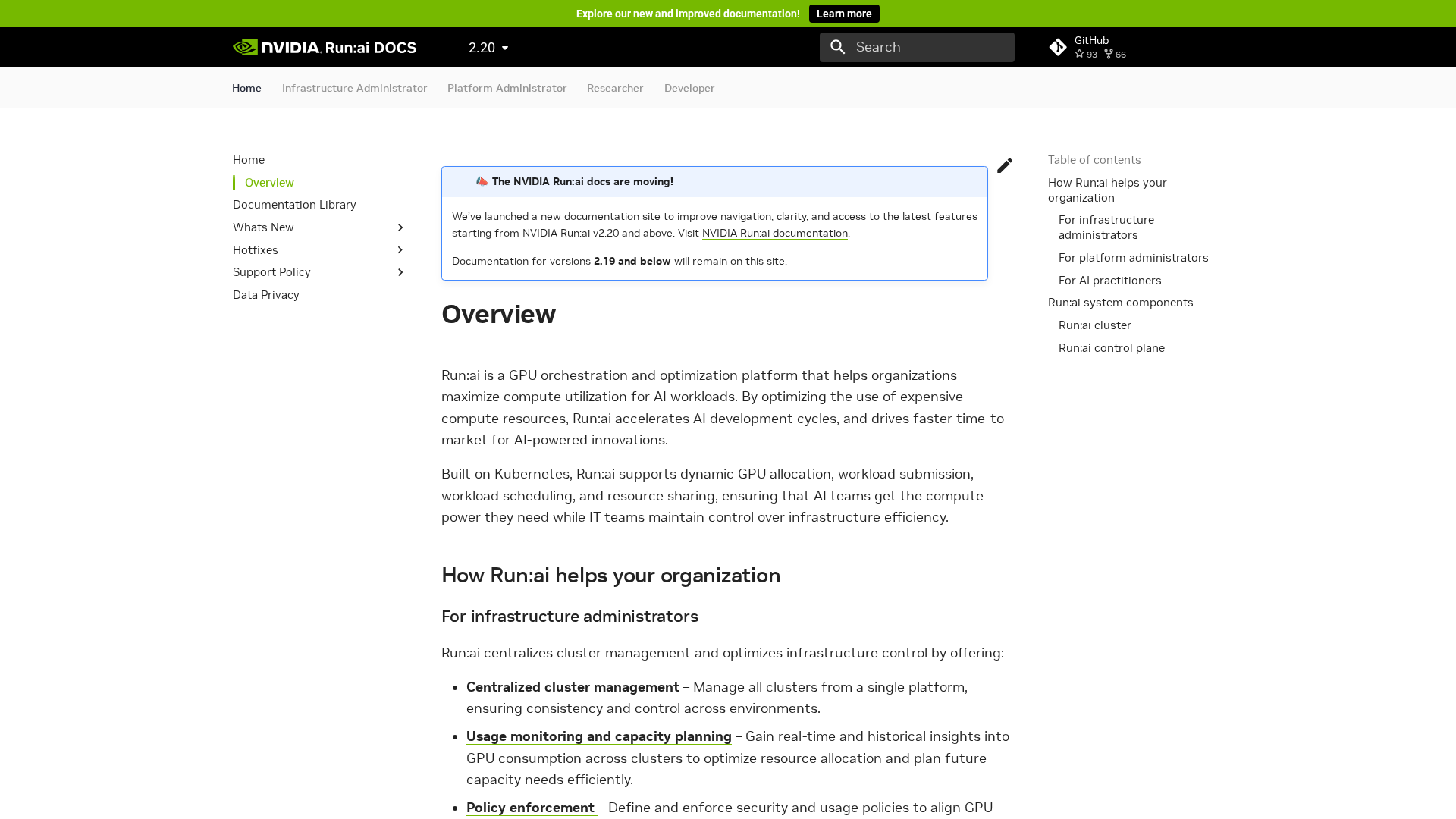

This topic covers the tooling and platform layer that helps teams control the rising operational cost of generative AI while streamlining reliable deployments. As organizations move from experimentation to production, inference, storage and orchestration costs—especially for GPU-backed models—become primary constraints. Cost-optimization and deployment tools address those constraints by combining GPU orchestration, model selection, data-platform integrations, deployment automation, and observability. Key players illustrate these roles: Run:ai provides Kubernetes-native GPU pooling and scheduling to increase utilization; Vertex AI offers an end-to-end managed cloud stack for training, fine-tuning and serving models; LangChain supplies engineering frameworks (including stateful constructs like LangGraph) to build, test and deploy agentic LLM applications; Cohere and Mistral AI offer enterprise-tuned models and production runtimes emphasizing efficiency, privacy and governance. Integrations between data platforms and model providers—e.g., Snowflake connecting to Anthropic-style models—shift inference closer to the data and enable cost-aware, data‑centric ML workflows. Emerging cost tooling (represented here by “Unicorne” and specialist cloud-cost platforms) focuses on rate limiting, model routing, spot/idle GPU scheduling, and billing transparency across multi-cloud and hybrid environments. Practically, this category intersects AI Tool Marketplaces (for model procurement and billing), AI Data Platforms (for vector search and in‑place inference), GenAI Test Automation (to validate cost/performance under load), and AI Governance Tools (for SLOs, access controls and auditability). For 2026 deployments, success depends on combining model efficiency (quantization, smaller distilled models), orchestration (GPU pooling, autoscaling), and tight data-to-model integration to minimize end‑to‑end cost without sacrificing reliability or compliance.

Tool Rankings – Top 5

Engineering platform and open-source frameworks to build, test, and deploy reliable AI agents.

Unified, fully-managed Google Cloud platform for building, training, deploying, and monitoring ML and GenAI models.

Kubernetes-native GPU orchestration and optimization platform that pools GPUs across on‑prem, cloud and multi‑cloud to提高

Enterprise-focused provider of open/efficient models and an AI production platform emphasizing privacy, governance, and

Enterprise-focused LLM platform offering private, customizable models, embeddings, retrieval, and search.

Latest Articles (48)

A comprehensive LangChain releases roundup detailing Core 1.2.6 and interconnected updates across XAI, OpenAI, Classic, and tests.

Cannot access the article content due to an access-denied error, preventing summarization.

A quick preview of POE-POE's pros and cons as seen in G2 reviews.

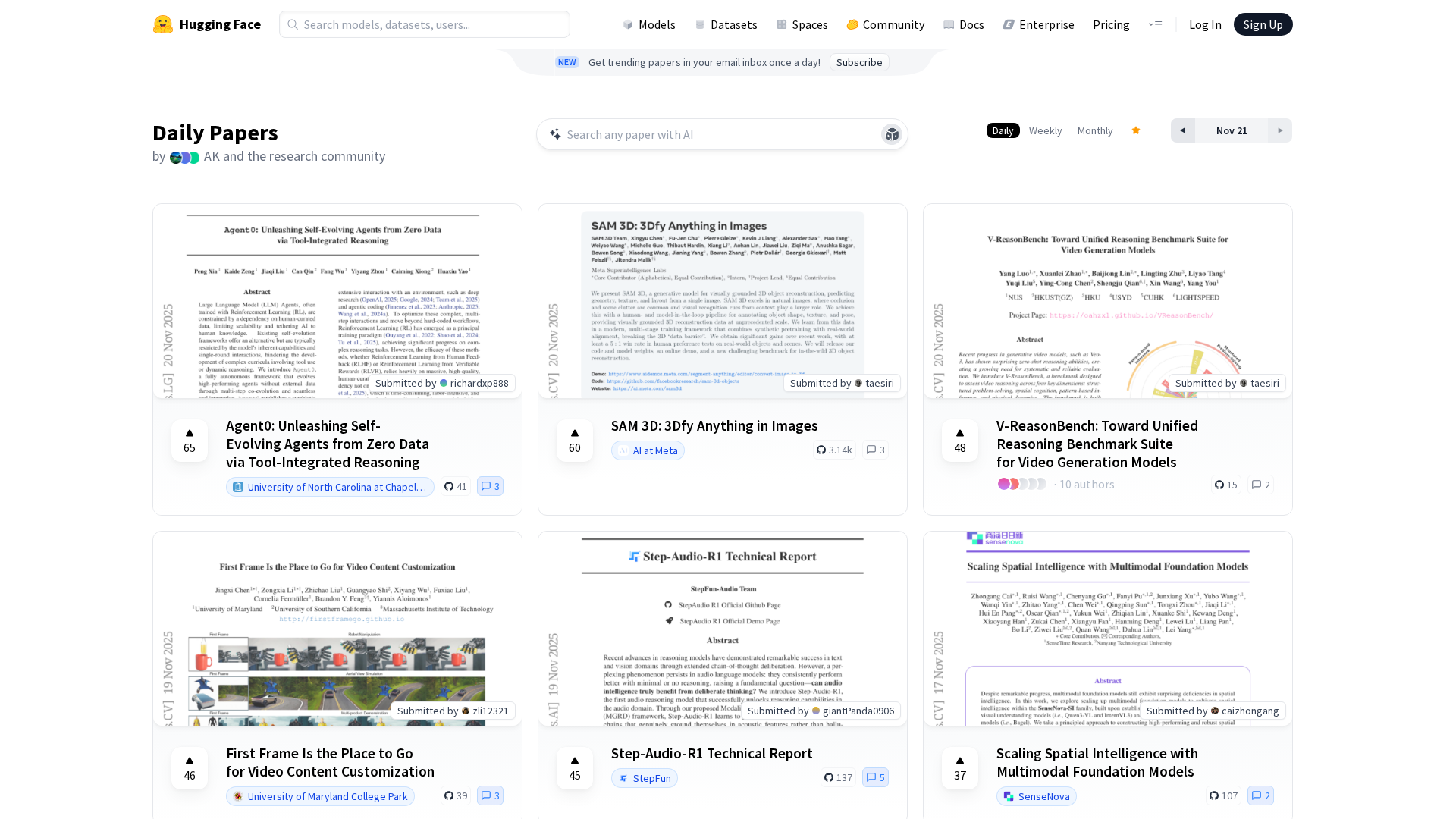

Get daily, curated trending ML papers delivered straight to your inbox.

Saudi xAI-HUMAIN launches a government-enterprise AI layer with large-scale GPU deployment and multi-year sovereignty milestones.