Topic Overview

This topic examines the growing ecosystem of hyperscale GPU and AI data‑center providers — from specialist entrants (HUMAIN/xAI) and hyperscalers (Meta, Sify) to the major cloud rivals — and how they interlock with orchestration, data and developer tooling. As of 2025‑11‑22, demand for large‑scale GPU capacity and low‑latency model serving is driving investments in purpose‑built facilities, software‑defined routing and rights‑cleared data pipelines. Key technical trends include GPU pooling and multi‑cloud orchestration (Run:ai provides Kubernetes‑native GPU orchestration to pool GPUs across on‑prem and cloud; FlexAI routes workloads to optimal compute across heterogeneous hardware), and integrated data platforms for model training and governance (OpenPipe manages LLM interaction data, supports fine‑tuning, evaluation and hosting while emphasizing rights‑cleared datasets). These platforms reflect a shift from raw capacity to managed, compliance‑aware AI stacks. Developer productivity and automation matter too: modern agentic tools (Warp’s Agentic Development Environment and Adept’s ACT‑1 automation) accelerate iterative model development, deployment and complex workflow automation inside software interfaces, reducing friction between engineers and hyperscale infrastructure. The result is a layered market where providers compete on physical scale, specialized hardware and the software glue that pools, routes and governs compute and data. Rights‑cleared data platforms and orchestration tools are increasingly central for cost control, compliance and reproducibility. For enterprises and AI teams, the practical implication is that procurement decisions now require joint evaluation of data‑governance capabilities, orchestration flexibility and integrations with developer tooling — not just raw GPU availability.

Tool Rankings – Top 5

Kubernetes-native GPU orchestration and optimization platform that pools GPUs across on‑prem, cloud and multi‑cloud to提高

%201.png)

Software-defined, hardware-agnostic AI infrastructure platform that routes workloads to optimal compute across cloud and

Managed platform to collect LLM interaction data, fine-tune models, evaluate them, and host optimized inference.

Agentic Development Environment (ADE) — a modern terminal + IDE with built-in AI agents to accelerate developer flows.

Agentic AI (ACT-1) that observes and acts inside software interfaces to automate multistep workflows for enterprises.

Latest Articles (59)

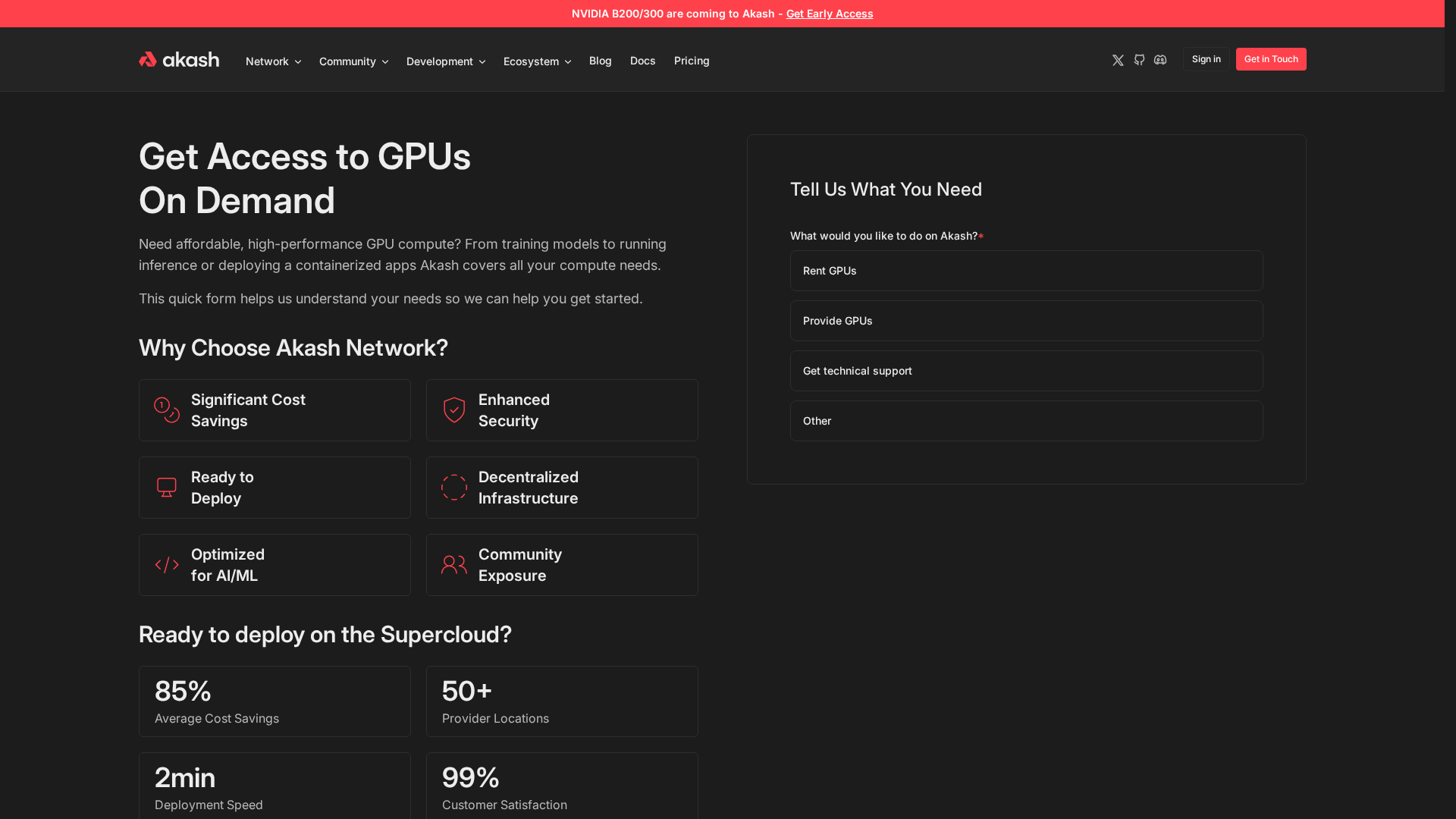

A deep dive into Akash Network, the decentralized cloud marketplace challenging traditional cloud models.

Analyzes data centers' AI-driven demand, energy and water needs, policy barriers, and routes to equitable digital infrastructure.

Nokia unveils Autonomous Networks Fabric to accelerate AI-powered, zero-touch network automation.

Humain teams with XAI to develop next-generation AI compute power, aiming to accelerate AI workloads.

Affordable, on-demand GPUs for ML training, inference, and containerized apps via Akash Network.