Topic Overview

This topic surveys the landscape of cloud GPU and AI infrastructure providers in 2026, covering hyperscalers (AWS, GCP, Azure), specialist GPU hosts such as CoreWeave, and emerging decentralized infrastructure and AI data platform patterns. Demand for large-scale model training, low-latency inference, and governed RAG deployments has driven a mix of options: hyperscalers for scale and ecosystem integration; specialist hosts for tailored GPU choices and competitive pricing; and decentralized/self-hosted stacks for data locality and compliance. Operational tooling and developer frameworks sit on top of this infrastructure. StationOps provides an AWS-focused AI DevOps workflow layer to automate provisioning and runtime configuration. LangChain and LlamaIndex are foundational for building, testing, and deploying LLM-powered agents and retrieval-augmented generation (RAG) pipelines—key capabilities for AI data platforms that ingest, index, and serve enterprise content. Autonomous-agent platforms such as AutoGPT and AgentGPT illustrate use cases that require continuous orchestration and cost-aware GPU scheduling. On the developer productivity side, Tabby, CodeGeeX, and Tabnine represent coding-assistant approaches ranging from open-source, local-first deployments to enterprise-focused, private-hosted solutions. Key trade-offs in 2026 remain cost vs. performance, hardware specialization (H100/A100 vs. newer accelerators), latency and colocated data, and governance for sensitive data. The most relevant infrastructure decisions prioritize workload profile (training vs. large-scale inference vs. agent orchestration), integration with AI data platforms for RAG and observability, and options for self-hosting or decentralized networks where compliance or lock-in are concerns. This synthesis helps teams map provider choice to technical, operational, and regulatory needs when deploying production AI at scale.

Tool Rankings – Top 6

The AI DevOps Engineer for AWS

An open-source framework and platform to build, observe, and deploy reliable AI agents.

Platform to build, deploy and run autonomous AI agents and automation workflows (self-hosted or cloud-hosted).

A browser-based platform to create and deploy autonomous AI agents with simple goals.

Developer-focused platform to build AI document agents, orchestrate workflows, and scale RAG across enterprises.

.avif)

Open-source, self-hosted AI coding assistant with IDE extensions, model serving, and local-first/cloud deployment.

Latest Articles (49)

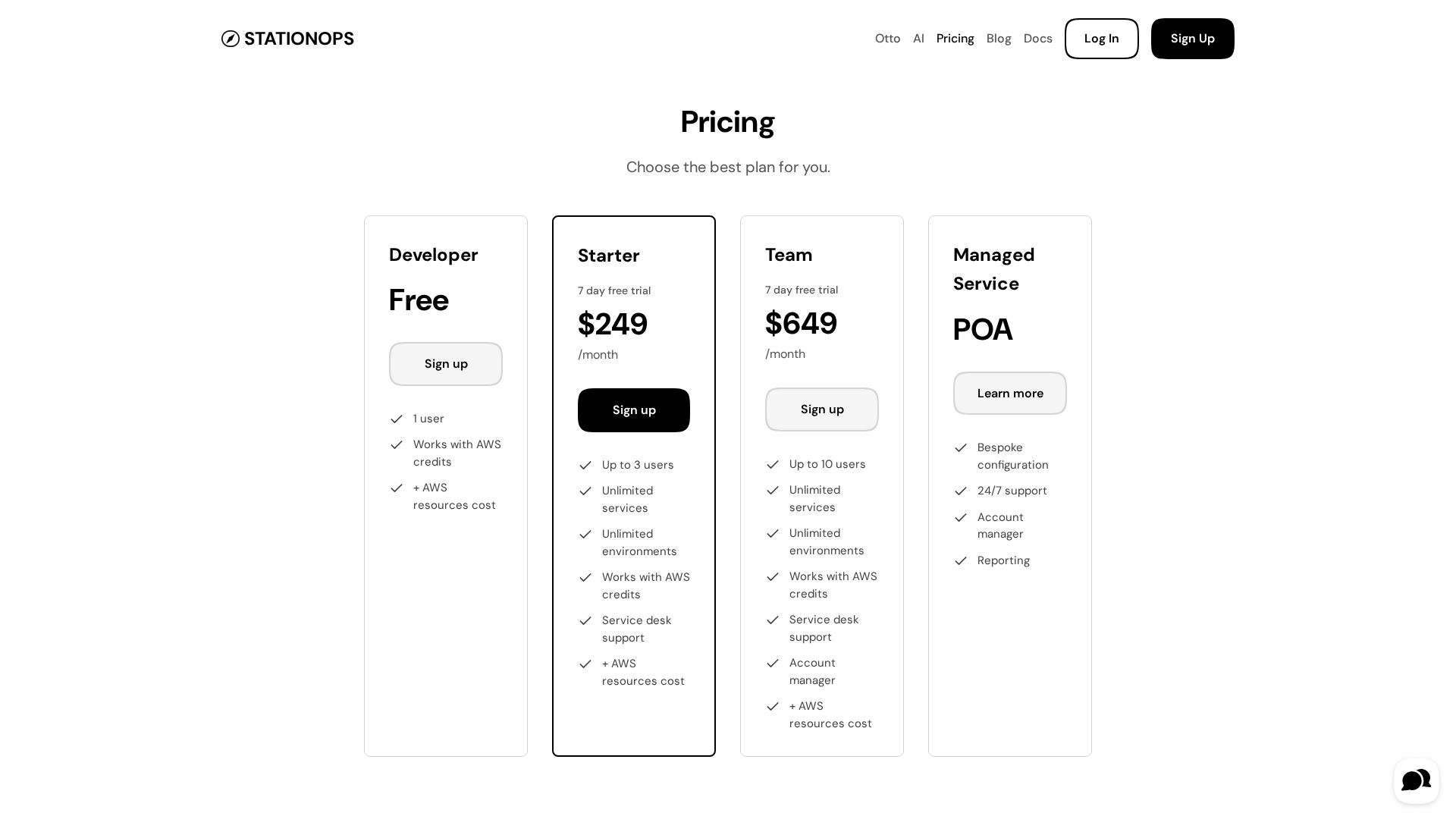

Pricing details for StationOps' AWS Internal Developer Platform, including tiers and features.

An AWS-centric internal developer platform comparison between StationOps and Netlify.

A guided look at StationOps’ internal Dev Platform for AWS—enabling governed, self‑serve environments at scale.

A concise look at how an internal developer platform on AWS accelerates delivery with governance and self-service.

A managed internal developer platform for AWS that simplifies provisioning, deployment, and governance to accelerate software delivery.